Operating Systems Notes — Fundamentals to Kernel Programming

Comprehensive guide to OS concepts, memory, processes, scheduling and kernel internals

A Comprehensive Journey Through Operating System Concepts, Architecture, and Implementation

1. Introduction to Operating Systems

What is an Operating System?

An Operating System (OS) is sophisticated system software that manages computer hardware resources and provides an environment for application programs to execute efficiently. It serves as the critical intermediary layer between the bare metal hardware and user applications, abstracting complex hardware details while providing essential services.

Core Responsibilities:

- Resource Management: Orchestrates CPU time, memory allocation, storage access, and I/O device operations

- Hardware Abstraction: Shields applications from hardware complexity through standardized interfaces

- Process Isolation: Ensures programs run independently without interfering with each other

- Security Enforcement: Implements access controls and protection mechanisms

- User Interface: Provides means for users to interact with the system

Why Study Operating Systems?

Understanding operating systems is fundamental for several critical reasons:

Foundation of All Computing

- Nearly every application executes atop an operating system

- OS behavior directly impacts application performance, security, and reliability

- Modern cloud infrastructure, mobile devices, and embedded systems all rely on OS principles

Performance Optimization

- Deep OS knowledge enables writing high-performance code

- Understanding scheduling, caching, and memory management leads to better algorithmic choices

- Ability to diagnose and resolve performance bottlenecks

Security and Reliability

- OS provides the trust boundary for system security

- Knowledge of OS internals helps identify and prevent vulnerabilities

- Understanding process isolation and memory protection prevents common exploits

Career Advancement

- Essential knowledge for system programming, embedded development, and infrastructure roles

- Required for roles in kernel development, device drivers, and low-level software

- Valuable for cloud architecture, distributed systems, and high-performance computing

Historical Evolution

First Generation (1945-1955): Vacuum Tubes and Plugboards

- Characteristics: No operating system concept; direct hardware programming

- Programming Method: Machine language with plugboards for connections

- Key Limitation: One program at a time; no multiprogramming

- Example Systems: ENIAC, UNIVAC I

Second Generation (1955-1965): Transistors and Batch Systems

- Key Innovation: Introduction of batch processing

- Batch System Concept: Group similar jobs together; process sequentially

- Advantages: Reduced setup time; improved resource utilization

- Programming: Assembly language and early compilers (FORTRAN, COBOL)

- Notable Systems: IBM 7094, Atlas Computer

Third Generation (1965-1980): Integrated Circuits and Multiprogramming

- Breakthrough: Integrated circuits enabled smaller, faster, and cheaper computers

- Multiprogramming: Multiple jobs in memory simultaneously; CPU switches between them

- Time-Sharing: Interactive computing with multiple users

- Key Concepts: Spooling, virtual memory, file systems

- Landmark Systems: IBM System/360, UNIX (1969), MULTICS

Fourth Generation (1980-Present): Personal Computers

- Revolution: Microprocessors enabled personal computers

- GUI Introduction: Graphical User Interfaces (Windows, Mac OS, X Window System)

- Network Operating Systems: Client-server architectures

- Notable Systems: MS-DOS, Windows, Mac OS, Linux (1991)

Fifth Generation (1990-Present): Mobile and Distributed Computing

- Mobile OS: iOS (2007), Android (2008)

- Cloud Computing: Virtualization, containerization

- Distributed Systems: Microservices architecture

- IoT: Embedded and real-time operating systems

- Modern Trends: Rust-based OS development, RISC-V architecture

2. Computer Hardware Fundamentals

Understanding operating systems requires solid knowledge of underlying hardware components.

The Transistor: Building Block of Computing

A transistor is a semiconductor device functioning as an electronic switch with two states: conducting (ON) or non-conducting (OFF). These binary states represent the fundamental 0s and 1s of digital computing.

Key Facts:

- Modern CPUs contain billions of transistors (Apple M2: ~20 billion; AMD EPYC: ~40+ billion)

- Transistor density follows Moore’s Law: doubling approximately every two years

- Advanced manufacturing processes now reach 3nm and smaller

Transistor Evolution:

1947: First transistor (Bell Labs) - Point-contact type

1959: Planar silicon transistor - Foundation for ICs

1971: Intel 4004 - 2,300 transistors

2023: AMD EPYC Genoa - 57 billion transistorsIntegrated Circuits (ICs)

An Integrated Circuit is a complete electronic circuit fabricated on a single semiconductor wafer (typically silicon). It contains millions or billions of interconnected transistors, resistors, and capacitors forming complex circuitry.

Types:

- SSI (Small-Scale Integration): < 100 components

- MSI (Medium-Scale Integration): 100-1000 components

- LSI (Large-Scale Integration): 1000-100,000 components

- VLSI (Very Large-Scale Integration): > 100,000 components

- ULSI (Ultra Large-Scale Integration): > 1 million components

Central Processing Unit (CPU)

The CPU is the primary computational engine, often called the “brain” of the computer. It fetches instructions from memory, decodes them, executes operations, and stores results.

CPU Architecture Components

1. Arithmetic Logic Unit (ALU)

Functions:

├── Arithmetic Operations

│ ├── Addition, Subtraction

│ ├── Multiplication, Division

│ └── Modulo operations

└── Logical Operations

├── AND, OR, XOR, NOT

├── Bit shifting (Left/Right)

└── Comparison operationsImplementation Concept:

// Simplified ALU operation representation

uint32_t alu_execute(uint8_t operation, uint32_t operand_a, uint32_t operand_b) {

switch(operation) {

case ALU_ADD: return operand_a + operand_b;

case ALU_SUB: return operand_a - operand_b;

case ALU_AND: return operand_a & operand_b;

case ALU_OR: return operand_a | operand_b;

case ALU_XOR: return operand_a ^ operand_b;

case ALU_SHL: return operand_a << operand_b;

case ALU_SHR: return operand_a >> operand_b;

default: return 0; // Invalid operation

}

}2. Control Unit (CU)

The Control Unit orchestrates CPU operations by:

- Fetching instructions from memory

- Decoding instruction opcodes

- Generating control signals for other components

- Managing instruction sequencing

Instruction Cycle (Fetch-Decode-Execute):

1. FETCH: Retrieve instruction from memory[PC]

2. DECODE: Interpret opcode and extract operands

3. EXECUTE: Perform operation via ALU or other units

4. STORE: Write results back to registers/memory

5. INCREMENT: Update Program Counter (PC)3. Registers

Registers are ultra-fast storage locations within the CPU for immediate data access.

Essential Register Types:

| Register | Purpose | Description |

|---|---|---|

| Program Counter (PC) | Next Instruction Address | Points to memory address of next instruction to execute |

| Instruction Register (IR) | Current Instruction | Holds instruction currently being executed |

| Accumulator (ACC) | ALU Results | Stores intermediate arithmetic/logic results |

| Memory Address Register (MAR) | Memory Address | Contains address for memory read/write operations |

| Memory Data Register (MDR) | Memory Data | Buffer for data read from or written to memory |

| Stack Pointer (SP) | Stack Top | Points to top of the runtime stack |

| Status Register (FLAGS) | Processor State | Contains condition codes (Zero, Carry, Negative, Overflow) |

| General Purpose Registers | Data Storage | R0-R15 (ARM), RAX-R15 (x86-64) for various operations |

Register Example (x86-64 Architecture):

; Example: Adding two numbers using registers

mov rax, 10 ; Load 10 into RAX register

mov rbx, 20 ; Load 20 into RBX register

add rax, rbx ; Add RBX to RAX (result: RAX = 30)

mov [result], rax ; Store result in memory4. Cache Memory Hierarchy

Modern CPUs employ multi-level caching to bridge the speed gap between processor and main memory.

Cache Levels:

CPU Registers (< 1 KB)

↓ Access: 0 cycles

L1 Cache (32-64 KB per core)

↓ Access: 1-4 cycles

L2 Cache (256-512 KB per core)

↓ Access: 10-20 cycles

L3 Cache (8-64 MB shared)

↓ Access: 40-70 cycles

Main Memory (4-256 GB)

↓ Access: 100-300 cycles

SSD Storage (128 GB - 4 TB)

↓ Access: ~50,000 cycles

HDD Storage (500 GB - 20 TB)

↓ Access: ~10,000,000 cyclesCache Properties:

- Cache Line: Typically 64 bytes; unit of transfer between cache levels

- Cache Hit: Data found in cache (fast access)

- Cache Miss: Data not in cache, must fetch from lower level (slow)

- Cache Coherence: Maintaining consistency across multiple caches in multicore systems

Cache Coherence Protocols:

- MESI Protocol (Modified, Exclusive, Shared, Invalid)

- MOESI Protocol (adds Owned state)

- Ensures all CPUs see consistent memory values

Graphics Processing Unit (GPU)

GPUs are specialized processors optimized for parallel computation, originally designed for graphics rendering but now widely used for general-purpose computing.

Key Differences from CPUs:

| Aspect | CPU | GPU |

|---|---|---|

| Core Count | 4-64 high-performance cores | 1000s of simpler cores |

| Design Philosophy | Optimized for latency | Optimized for throughput |

| Task Suitability | Complex, sequential tasks | Highly parallel, repetitive tasks |

| Memory | Large cache hierarchy | High-bandwidth memory |

| Control Flow | Complex branching | SIMT (Single Instruction, Multiple Threads) |

GPU Applications:

- Graphics rendering and ray tracing

- Machine learning and deep neural networks

- Scientific simulations (computational fluid dynamics, molecular modeling)

- Cryptocurrency mining

- Video encoding/decoding

Memory Systems

Memory Hierarchy Goals:

- Speed: Fast access for frequently used data

- Capacity: Large storage for all program data

- Cost: Balance between performance and affordability

Primary Memory (Volatile)

Random Access Memory (RAM)

- DRAM (Dynamic RAM): Main memory; needs periodic refresh; slower but denser

- DDR4: 2133-3200 MT/s, 16-64 GB typical

- DDR5: 4800-8400 MT/s, 16-128 GB typical

- SRAM (Static RAM): Cache memory; no refresh needed; faster but more expensive

- Used in L1/L2/L3 caches

Memory Access Patterns:

// Demonstrating cache-friendly vs cache-unfriendly access

// Cache-friendly: Sequential access (spatial locality)

void sum_array_row_major(int arr[N][N]) {

for (int i = 0; i < N; i++) {

for (int j = 0; j < N; j++) {

sum += arr[i][j]; // Sequential memory access

}

}

}

// Cache-unfriendly: Column-major in row-major storage

void sum_array_column_major(int arr[N][N]) {

for (int j = 0; j < N; j++) {

for (int i = 0; i < N; i++) {

sum += arr[i][j]; // Jumps across cache lines

}

}

}Secondary Storage (Non-Volatile)

Solid State Drives (SSDs)

- Technology: NAND flash memory

- Speed: 500-7000 MB/s (SATA to NVMe)

- Latency: 50-100 microseconds

- Wear Leveling: Distributes writes to prevent cell degradation

Hard Disk Drives (HDDs)

- Technology: Magnetic storage on rotating platters

- Speed: 100-200 MB/s

- Latency: 5-15 milliseconds (seek time + rotational delay)

- Capacity: Up to 20 TB per drive

Storage Technologies Comparison:

| Technology | Speed | Latency | Cost per GB | Durability | Use Case |

|---|---|---|---|---|---|

| RAM | 20+ GB/s | 50-100 ns | High | Volatile | Active data |

| NVMe SSD | 3-7 GB/s | 50-100 μs | Medium | Limited writes | OS, applications |

| SATA SSD | 500 MB/s | 50-100 μs | Medium-Low | Limited writes | General storage |

| HDD | 100-200 MB/s | 5-15 ms | Low | High | Archival, bulk storage |

System Bus Architecture

The System Bus is a collection of parallel electrical conductors connecting CPU, memory, and I/O devices.

Bus Types:

1. Data Bus

- Carries actual data between components

- Width determines data transfer rate (32-bit, 64-bit)

- Bidirectional

2. Address Bus

- Carries memory addresses

- Width determines addressable memory space

- 32-bit: 4 GB (2³² bytes)

- 64-bit: 16 Exabytes (2⁶⁴ bytes)

- Unidirectional (CPU to memory/devices)

3. Control Bus

- Carries control signals (read, write, interrupt, clock)

- Coordinates bus operations

- Bidirectional

Bus Operation Example:

CPU wants to read memory location 0x1000:

1. CPU places 0x1000 on Address Bus

2. CPU asserts READ control signal on Control Bus

3. Memory responds by placing data at 0x1000 onto Data Bus

4. CPU reads data from Data Bus

5. CPU de-asserts READ signal3. Operating System Architecture

OS Design Principles

Separation of Mechanism and Policy

- Mechanism: How to do something (implementation)

- Policy: What should be done (decision-making)

- Benefit: Flexibility; policies can change without altering mechanisms

Example:

Mechanism: Context switching between processes

Policy: Which process to run next (scheduling algorithm)Optimization for Common Case

- Design decisions should favor frequently occurring scenarios

- Identify workload characteristics and usage patterns

- Balance generality with specialized optimization

Kernel Architectures

1. Monolithic Kernel

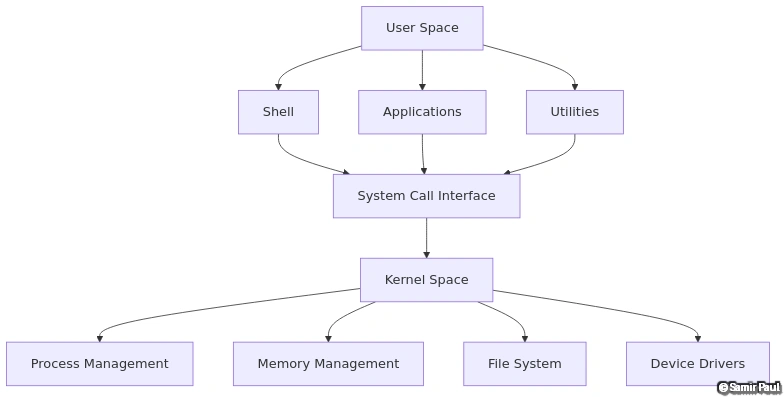

Concept: All OS services run in kernel space with full hardware access.

Structure:

User Space

│

├─ Application 1

├─ Application 2

└─ Application N

────────────── System Call Interface ──────────────

Kernel Space (Single Address Space)

│

├─ Process Management

├─ Memory Management

├─ File System

├─ Device Drivers

├─ Network Stack

└─ Scheduling

────────────── Hardware ──────────────Advantages of Monolithic Kernel:

- Performance: Direct function calls within kernel; no context switching overhead

- Simplicity: Straightforward design and implementation

- Efficiency: Minimal message passing overhead

Disadvantages of Monolithic Kernel:

- Reliability: Bug in any component can crash entire system

- Maintainability: Large, complex codebase difficult to maintain

- Security: Large attack surface; any vulnerability affects whole kernel

Examples: Linux, traditional UNIX, Windows 9x

Linux Kernel Structure:

// Simplified representation of kernel subsystems

struct linux_kernel {

struct process_manager {

struct task_struct *process_list;

struct scheduler *sched;

} pm;

struct memory_manager {

struct page_table *pgd;

struct vm_area *vma_list;

} mm;

struct file_system {

struct super_block *sb;

struct inode_cache *icache;

} fs;

struct network_stack {

struct socket *socket_list;

struct protocol_handler *protocols;

} net;

};2. Microkernel Architecture

Concept: Minimal kernel with essential services only; other services run in user space.

Structure:

User Space

│

├─ File Server

├─ Device Drivers

├─ Network Server

├─ Applications

────────────── IPC (Message Passing) ──────────────

Kernel Space (Minimal)

│

├─ IPC Mechanism

├─ Basic Scheduling

├─ Low-level Memory Management

└─ Hardware Abstraction

────────────── Hardware ──────────────Essential Kernel Services:

- Inter-Process Communication (IPC)

- Basic thread scheduling

- Low-level address space management

- Interrupt handling

Advantages of Microkernel:

- Fault Isolation: Service crash doesn’t affect kernel

- Modularity: Easy to add/remove services

- Security: Smaller trusted computing base (TCB)

- Portability: Hardware-specific code isolated

Disadvantages of Microkernel:

- Performance: IPC overhead for service communication

- Complexity: More complex system structure

- Development Effort: Requires careful IPC design

Examples: MINIX 3, L4 microkernel family, QNX, seL4

Microkernel IPC Example:

// Simplified IPC message passing

struct message {

int src_pid;

int dst_pid;

int msg_type;

char data[1024];

};

// Send message to file server

int read_file(const char *path, char *buffer, size_t size) {

struct message msg;

msg.src_pid = getpid();

msg.dst_pid = FILE_SERVER_PID;

msg.msg_type = MSG_READ_FILE;

strcpy(msg.data, path);

// Send to microkernel IPC

send_message(&msg);

// Wait for reply

receive_message(&msg);

// Copy data from reply

memcpy(buffer, msg.data, size);

return msg.msg_type; // Return value

}3. Hybrid Kernel

Concept: Combines monolithic and microkernel approaches; some services in kernel for performance, others in user space.

Structure:

User Space

│

├─ User-mode Services

├─ Device Drivers (some)

└─ Applications

────────────── Mixed Interface ──────────────

Kernel Space

│

├─ Core Kernel (Microkernel-like)

│ ├─ IPC

│ ├─ Scheduling

│ └─ Low-level MM

│

└─ Integrated Services (Monolithic-like)

├─ File System

├─ Device Drivers (critical)

└─ Network Stack

────────────── Hardware ──────────────Advantages:

- Balances performance and modularity

- Critical services in kernel for speed

- Non-critical services in user space for safety

Examples:

- Windows NT/modern Windows: NT kernel (hybrid)

- macOS XNU: Mach microkernel + BSD monolithic components

XNU (macOS/iOS Kernel) Architecture:

┌─────────────────────────────────────┐

│ User Space Applications │

├─────────────────────────────────────┤

│ System Libraries │

│ (libSystem, Foundation, etc.) │

├─────────────────────────────────────┤

│ XNU Kernel │

│ ┌─────────────────────────────┐ │

│ │ BSD Layer (Monolithic) │ │

│ │ - POSIX compatibility │ │

│ │ - Networking (TCP/IP) │ │

│ │ - VFS (Virtual File System)│ │

│ ├─────────────────────────────┤ │

│ │ Mach Layer (Microkernel) │ │

│ │ - IPC (Mach ports) │ │

│ │ - Virtual Memory │ │

│ │ - Task/Thread management │ │

│ ├─────────────────────────────┤ │

│ │ I/O Kit (C++ drivers) │ │

│ └─────────────────────────────┘ │

├─────────────────────────────────────┤

│ Hardware │

└─────────────────────────────────────┘4. Exokernel

Concept: Expose hardware resources directly to applications; OS provides minimal abstraction.

Philosophy: Applications know best how to manage resources for their specific needs.

Advantages:

- Maximum performance and flexibility

- Application-level resource management

- Eliminates unnecessary abstraction layers

Disadvantages:

- Complex application development

- Limited protection between applications

- Difficult to program

Examples: MIT Exokernel, Aegis

5. Unikernel

Concept: Single address space machine image; application and OS services compiled into single binary.

Structure:

┌──────────────────────────────┐

│ Application Code │

│ + OS Services (LibOS) │

│ (Single Binary) │

├──────────────────────────────┤

│ Hypervisor / Hardware │

└──────────────────────────────┘Advantages:

- Minimal attack surface

- Fast boot times (milliseconds)

- Small memory footprint

- Optimized for specific applications

Disadvantages:

- Lack of dynamic process management

- Limited debugging tools

- Application must be recompiled for changes

Examples: MirageOS (OCaml), IncludeOS (C++), HalVM (Haskell)

Use Cases:

- Cloud microservices

- IoT devices

- Network appliances

System Layers

User Mode vs Kernel Mode

Modern processors provide privilege levels (protection rings) to enforce security and stability.

x86 Protection Rings:

Ring 3 (Lowest Privilege) ─── User Applications

Ring 2 (Device Drivers - rarely used)

Ring 1 (Device Drivers - rarely used)

Ring 0 (Highest Privilege) ─── Operating System KernelARM Exception Levels (ARMv8):

EL0 ─── User Applications

EL1 ─── Operating System Kernel

EL2 ─── Hypervisor

EL3 ─── Secure Monitor (TrustZone)Mode Transition: User programs cannot directly access hardware or privileged instructions. They must request OS services via system calls, which trigger a mode switch.

Mode Switch Process:

1. Application executes syscall instruction (INT 0x80, SYSCALL, SVC)

2. CPU switches from User Mode to Kernel Mode

3. CPU saves user context (registers, program counter)

4. CPU jumps to kernel's system call handler

5. Kernel validates request and executes service

6. Kernel prepares return value

7. CPU restores user context

8. CPU switches back to User Mode

9. Application resumes with resultProtection Benefits:

- Isolation: Faulty applications can’t crash the system

- Security: Malicious code can’t directly manipulate hardware

- Stability: Critical OS data structures protected from corruption

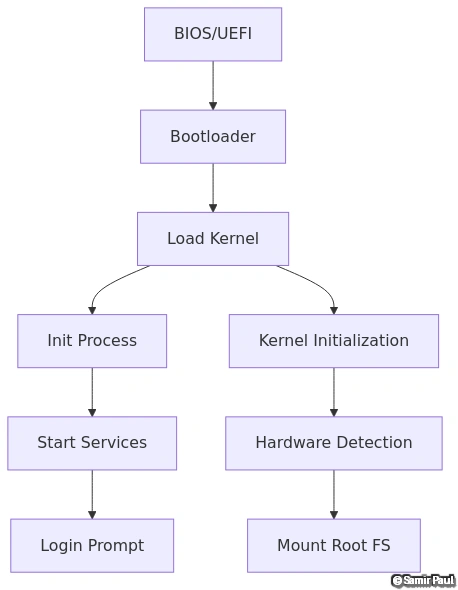

4. Boot Process and System Initialization

x86 Boot Sequence

1. Power-On Self-Test (POST)

Power On → CPU Reset → POST

│

├─ Test CPU registers

├─ Check memory

├─ Initialize chipset

├─ Detect hardware

└─ Display boot screen2. BIOS/UEFI Initialization

Legacy BIOS (Basic Input/Output System):

- Stored in ROM/flash on motherboard

- Executes in 16-bit real mode

- Loads Master Boot Record (MBR) from boot device

MBR Structure (512 bytes):

Offset Size Description

0x000 446 Boot code

0x1BE 16 Partition entry 1

0x1CE 16 Partition entry 2

0x1DE 16 Partition entry 3

0x1EE 16 Partition entry 4

0x1FE 2 Boot signature (0x55AA)Simple MBR Boot Code (x86 Assembly):

; boot.asm - Minimal bootloader

BITS 16 ; 16-bit real mode

ORG 0x7C00 ; BIOS loads us at 0x7C00

start:

; Setup segments

xor ax, ax

mov ds, ax

mov es, ax

mov ss, ax

mov sp, 0x7C00

; Display message

mov si, message

call print_string

; Hang

jmp $

print_string:

lodsb ; Load byte from [SI] into AL

or al, al ; Check if zero (end of string)

jz done

mov ah, 0x0E ; BIOS teletype function

int 0x10 ; Call BIOS video interrupt

jmp print_string

done:

ret

message db 'Booting...', 0x0D, 0x0A, 0

times 510-($-$$) db 0 ; Pad to 510 bytes

dw 0xAA55 ; Boot signatureUEFI (Unified Extensible Firmware Interface):

- Modern replacement for BIOS

- Supports 32-bit and 64-bit modes

- GPT (GUID Partition Table) instead of MBR

- More secure (Secure Boot)

- Includes a boot manager

UEFI Boot Process:

Power On → UEFI Firmware → Boot Manager → Boot Loader → OS Kernel3. Bootloader Execution

GRUB (Grand Unified Bootloader):

Stage 1: Bootstrap code (in MBR or GPT)

↓

Stage 1.5: Filesystem drivers (if needed)

↓

Stage 2: Full GRUB with menu

↓

Kernel Loading: Load kernel image into memory

↓

Kernel Execution: Jump to kernel entry pointMultiboot Specification:

// Multiboot header (must be in first 8KB of kernel)

#define MULTIBOOT_HEADER_MAGIC 0x1BADB002

#define MULTIBOOT_HEADER_FLAGS 0x00000003

.section .multiboot

.align 4

multiboot_header:

.long MULTIBOOT_HEADER_MAGIC

.long MULTIBOOT_HEADER_FLAGS

.long -(MULTIBOOT_HEADER_MAGIC + MULTIBOOT_HEADER_FLAGS)4. Protected Mode Transition

Real mode has limitations:

- Only 1 MB addressable memory

- No memory protection

- 16-bit operations

Entering Protected Mode:

; Disable interrupts

cli

; Load Global Descriptor Table (GDT)

lgdt [gdt_descriptor]

; Set PE (Protection Enable) bit in CR0

mov eax, cr0

or eax, 0x1

mov cr0, eax

; Far jump to load CS with code segment selector

jmp 0x08:protected_mode

[BITS 32]

protected_mode:

; Setup data segments

mov ax, 0x10 ; Data segment selector

mov ds, ax

mov es, ax

mov fs, ax

mov gs, ax

mov ss, ax

; Setup stack

mov esp, 0x90000

; Jump to kernel

call kernel_mainGlobal Descriptor Table (GDT):

struct gdt_entry {

uint16_t limit_low;

uint16_t base_low;

uint8_t base_middle;

uint8_t access;

uint8_t granularity;

uint8_t base_high;

} __attribute__((packed));

struct gdt_ptr {

uint16_t limit;

uint32_t base;

} __attribute__((packed));

struct gdt_entry gdt[5];

void gdt_set_gate(int num, uint32_t base, uint32_t limit, uint8_t access, uint8_t gran) {

gdt[num].base_low = (base & 0xFFFF);

gdt[num].base_middle = (base >> 16) & 0xFF;

gdt[num].base_high = (base >> 24) & 0xFF;

gdt[num].limit_low = (limit & 0xFFFF);

gdt[num].granularity = ((limit >> 16) & 0x0F) | (gran & 0xF0);

gdt[num].access = access;

}

void gdt_install() {

struct gdt_ptr gp;

gp.limit = (sizeof(struct gdt_entry) * 5) - 1;

gp.base = (uint32_t)&gdt;

gdt_set_gate(0, 0, 0, 0, 0); // Null segment

gdt_set_gate(1, 0, 0xFFFFFFFF, 0x9A, 0xCF); // Code segment

gdt_set_gate(2, 0, 0xFFFFFFFF, 0x92, 0xCF); // Data segment

gdt_set_gate(3, 0, 0xFFFFFFFF, 0xFA, 0xCF); // User code

gdt_set_gate(4, 0, 0xFFFFFFFF, 0xF2, 0xCF); // User data

gdt_flush(&gp); // Assembly function to load GDT

}ARM Boot Process

1. ROM Bootloader (Primary Bootloader)

- Hardcoded in SoC ROM

- Minimal functionality

- Loads secondary bootloader from boot media (SD, eMMC, SPI)

2. SPL (Secondary Program Loader) / U-Boot

- Initializes DRAM controller

- Sets up clocks and power management

- Loads main bootloader or kernel

U-Boot Commands:

# Load kernel from SD card

fatload mmc 0:1 ${kernel_addr_r} zImage

# Load device tree

fatload mmc 0:1 ${fdt_addr_r} bcm2710-rpi-3-b.dtb

# Boot kernel

bootz ${kernel_addr_r} - ${fdt_addr_r}3. Device Tree (DTB)

- Hardware description format

- Describes hardware topology, addresses, interrupts

- Passed to kernel during boot

Device Tree Example (Simplified):

/dts-v1/;

/ {

compatible = "raspberrypi,3-model-b", "brcm,bcm2837";

model = "Raspberry Pi 3 Model B";

memory@0 {

device_type = "memory";

reg = <0x00000000 0x40000000>; // 1GB

};

cpus {

#address-cells = <1>;

#size-cells = <0>;

cpu@0 {

device_type = "cpu";

compatible = "arm,cortex-a53";

reg = <0>;

};

// ... more CPUs

};

soc {

uart0: serial@7e201000 {

compatible = "brcm,bcm2835-uart";

reg = <0x7e201000 0x1000>;

interrupts = <2 25>;

};

};

};4. Kernel Initialization

Linux Kernel Boot (ARM64):

1. Decompress kernel (if compressed)

2. Setup initial page tables

3. Enable MMU and caches

4. Parse device tree

5. Initialize memory subsystem

6. Mount initial ramdisk (initramfs)

7. Initialize drivers

8. Start init process (PID 1)Kernel Initialization Deep Dive

Early Boot Process:

// Simplified Linux kernel initialization (kernel/init/main.c)

asmlinkage __visible void __init start_kernel(void) {

char *command_line;

// 1. Setup architecture-specific components

setup_arch(&command_line);

// 2. Initialize memory management

mm_init();

// 3. Setup scheduler

sched_init();

// 4. Initialize IRQs and timers

init_IRQ();

init_timers();

// 5. Initialize console for early output

console_init();

// 6. Initialize rest of kernel subsystems

vfs_caches_init();

signals_init();

// 7. Rest of initialization (drivers, etc.)

rest_init();

}

static noinline void __init rest_init(void) {

// Create kernel threads

kernel_thread(kernel_init, NULL, CLONE_FS);

kernel_thread(kthreadd, NULL, CLONE_FS | CLONE_FILES);

// CPU idle loop

cpu_startup_entry(CPUHP_ONLINE);

}

static int __init kernel_init(void *unused) {

// Try to run init program

if (!try_to_run_init_process("/sbin/init") ||

!try_to_run_init_process("/etc/init") ||

!try_to_run_init_process("/bin/init") ||

!try_to_run_init_process("/bin/sh"))

return 0;

panic("No working init found.");

}5. Process Management

Process Concept

A process is an instance of a program in execution. It represents the dynamic, active entity, as opposed to a program which is a passive, static collection of instructions.

Process Components:

- Program Code (Text Section): Executable instructions

- Program Counter: Current instruction position

- Processor Registers: Register contents

- Stack: Temporary data (function parameters, return addresses, local variables)

- Data Section: Global variables

- Heap: Dynamically allocated memory

Process vs Program:

Program: Passive entity (file on disk containing instructions)

Process: Active entity (program loaded in memory and executing)

Example:

- "notepad.exe" is a program

- When you run it, each window is a separate processProcess Memory Layout

High Memory (0xFFFFFFFF)

┌─────────────────────┐

│ Kernel Space │ ← OS kernel (1GB on 32-bit Linux)

├─────────────────────┤

│ Stack │ ← Grows downward

│ ↓ │ Function calls, local variables

│ │

│ (Unused) │

│ │

│ ↑ │

│ Heap │ ← Grows upward

├─────────────────────┤ Dynamic allocation (malloc/new)

│ BSS Segment │ ← Uninitialized data

├─────────────────────┤

│ Data Segment │ ← Initialized data

├─────────────────────┤

│ Text Segment │ ← Program code (read-only)

└─────────────────────┘

Low Memory (0x00000000)Example:

#include <stdlib.h>

int global_initialized = 42; // Data segment

int global_uninitialized; // BSS segment

const char *text_data = "Hello"; // Text/Read-only segment

void function() {

int stack_var = 10; // Stack

int *heap_var = malloc(sizeof(int)); // Heap

*heap_var = 20;

free(heap_var);

}Process Control Block (PCB)

The PCB (also called Task Control Block or TCB) is the kernel data structure representing a process.

PCB Contents:

struct task_struct { // Linux representation (simplified)

// 1. Process State

volatile long state; // Running, Ready, Waiting, etc.

// 2. Process ID

pid_t pid; // Process ID

pid_t tgid; // Thread group ID

struct task_struct *parent; // Parent process

// 3. CPU Scheduling Information

int prio; // Priority

int static_prio; // Static priority

int normal_prio; // Normal priority

unsigned int policy; // Scheduling policy

struct sched_entity se; // Scheduling entity

// 4. Memory Management

struct mm_struct *mm; // Memory descriptor

// 5. File Descriptors

struct files_struct *files; // Open file information

// 6. CPU Context

struct thread_struct thread; // CPU registers, PC, SP

// 7. Signal Handling

struct signal_struct *signal;

// 8. Process Times

u64 utime; // User mode time

u64 stime; // Kernel mode time

// 9. Process Credentials

uid_t uid, euid; // User ID, Effective UID

gid_t gid, egid; // Group ID, Effective GID

// 10. List Management

struct list_head tasks; // Process list

struct list_head sibling; // Sibling list

struct list_head children; // Children list

};Process States

A process transitions through various states during its lifetime.

Five-State Model:

┌─────────┐

│ New │ ← Process being created

└────┬────┘

│

↓

┌─────────┐ Scheduler ┌─────────────┐

┌───→│ Ready │ ← ─ ─ ─ ─ ─ ─ ─ ─ ┤ Running │

│ └────┬────┘ Dispatch └──────┬──────┘

│ │ │

│ └────────────────────────────────┘

│ │

│ │ I/O or

│ I/O Complete │ Event Wait

│ ┌─────────────────────────────────┘

│ │

│ ↓

│ ┌─────────┐ ┌─────────────┐

└────┤ Waiting │ │ Terminated │

└─────────┘ └─────────────┘State Descriptions:

- New: Process is being created; PCB allocated but not ready

- Ready: Process is waiting to be assigned to CPU

- Running: Instructions are being executed

- Waiting (Blocked): Process waiting for event (I/O completion, signal)

- Terminated (Zombie): Process finished execution; waiting for parent to read exit status

State Transitions Example (Linux):

// Process states in Linux (linux/sched.h)

#define TASK_RUNNING 0

#define TASK_INTERRUPTIBLE 1 // Can be woken by signals

#define TASK_UNINTERRUPTIBLE 2 // Cannot be interrupted

#define __TASK_STOPPED 4

#define __TASK_TRACED 8

#define EXIT_ZOMBIE 16

#define EXIT_DEAD 32

// State transition example

void state_transition() {

set_current_state(TASK_INTERRUPTIBLE); // Ready → Waiting

schedule(); // Yield CPU

// After wake-up

// Waiting → Ready → Running (when scheduled)

}Process Creation

UNIX: fork() System Call

fork() creates a new process by duplicating the calling process.

Characteristics:

- Child process gets a copy of parent’s address space (Copy-on-Write)

- Child has unique PID

- fork() returns twice: 0 in child, child’s PID in parent

Copy-on-Write Optimization: fork() doesn’t immediately copy all memory! Instead, parent and child share the same pages until one tries to write. This makes fork() extremely fast and memory-efficient, even for large processes.

Fork Example:

#include <stdio.h>

#include <unistd.h>

#include <sys/types.h>

int main() {

pid_t pid;

int x = 10;

printf("Before fork: x = %d\n", x);

pid = fork();

if (pid < 0) {

// Fork failed

fprintf(stderr, "Fork failed\n");

return 1;

} else if (pid == 0) {

// Child process

x = 20;

printf("Child: x = %d, PID = %d\n", x, getpid());

} else {

// Parent process

x = 30;

printf("Parent: x = %d, Child PID = %d\n", x, pid);

}

return 0;

}

/* Output:

Before fork: x = 10

Child: x = 20, PID = 1235

Parent: x = 30, Child PID = 1235

*/exec() Family

exec() replaces the current process image with a new program.

Common exec variants:

execl(): List of argumentsexecv(): Vector (array) of argumentsexecle(): List + environmentexecve(): Vector + environment

Fork + Exec Pattern:

#include <stdio.h>

#include <unistd.h>

#include <sys/wait.h>

int main() {

pid_t pid = fork();

if (pid == 0) {

// Child: Replace with "/bin/ls" program

char *args[] = {"/bin/ls", "-l", "/tmp", NULL};

execv("/bin/ls", args);

// If execv returns, it failed

perror("execv failed");

return 1;

} else {

// Parent: Wait for child to finish

int status;

waitpid(pid, &status, 0);

printf("Child exited with status %d\n", WEXITSTATUS(status));

}

return 0;

}Windows: CreateProcess()

Windows doesn’t use fork(); it creates processes differently.

#include <windows.h>

#include <stdio.h>

int main() {

STARTUPINFO si;

PROCESS_INFORMATION pi;

ZeroMemory(&si, sizeof(si));

si.cb = sizeof(si);

ZeroMemory(&pi, sizeof(pi));

// Create child process

if (!CreateProcess(

NULL, // Application name

"notepad.exe", // Command line

NULL, // Process attributes

NULL, // Thread attributes

FALSE, // Inherit handles

0, // Creation flags

NULL, // Environment

NULL, // Current directory

&si, // Startup info

&pi // Process info

)) {

printf("CreateProcess failed (%d)\n", GetLastError());

return 1;

}

// Wait for child

WaitForSingleObject(pi.hProcess, INFINITE);

// Close handles

CloseHandle(pi.hProcess);

CloseHandle(pi.hThread);

return 0;

}Process Termination

Normal Termination:

- Program executes last statement

- Calls

exit()system call - Returns from

main()

Abnormal Termination:

- Fatal error (division by zero, segmentation fault)

- Killed by another process (kill command)

- Parent terminates (orphan processes)

Exit Status:

#include <stdlib.h>

int main() {

// exit(0) - Success

// exit(1) - General error

// exit(127) - Command not found

if (error_occurred) {

exit(EXIT_FAILURE);

}

return EXIT_SUCCESS;

}Zombie and Orphan Processes:

Zombie Process:

- Process terminated but PCB still exists

- Waiting for parent to read exit status via

wait() - Shows as

<defunct>inpsoutput

Zombie Processes: If a parent never calls wait(), the child’s PCB remains allocated even after the child terminates. This wastes kernel resources. Long-running parent processes should always wait() for their children or ignore SIGCHLD to automatically reap zombies.

// Creating a zombie

#include <unistd.h>

int main() {

if (fork() == 0) {

// Child exits immediately

exit(0);

}

// Parent doesn't call wait()

sleep(60); // Child becomes zombie for 60 seconds

return 0;

}Orphan Process:

- Parent terminates before child

- Child is adopted by init (PID 1) or systemd

- Init/systemd periodically calls

wait()to reap orphans

Process Wait Example:

#include <sys/wait.h>

#include <stdio.h>

#include <unistd.h>

int main() {

pid_t pid = fork();

if (pid == 0) {

printf("Child process\n");

sleep(2);

exit(42); // Exit with status 42

} else {

int status;

pid_t child = wait(&status); // Wait for any child

if (WIFEXITED(status)) {

printf("Child %d exited with status %d\n",

child, WEXITSTATUS(status));

}

// Alternative: waitpid() for specific child

// waitpid(pid, &status, 0);

}

return 0;

}Context Switching

Context Switch: Saving the state of current process and loading the state of the next process.

Context Switch Steps:

1. Save current process state (registers, PC, SP) to PCB

2. Update PCB (state, timing information)

3. Move PCB to appropriate queue (ready, waiting)

4. Select next process (scheduling algorithm)

5. Update new process PCB (state = RUNNING)

6. Restore new process context from PCB

7. Switch memory management (page tables)

8. Resume execution of new processContext Switch Overhead - A Hidden Cost:

- Direct Cost: Time to save/restore registers (~microseconds)

- Indirect Cost: Cache flush → huge performance hit! Modern CPUs with large caches can lose 1-10% performance per context switch

- TLB Flush: Virtual memory translation caches invalidated

- Pipeline Flush: CPU instruction pipeline emptied

Practical Impact: High context switch rates (>1000/sec) cause significant throughput degradation even though each individual switch is “fast”

Minimizing Context Switches:

- Use threads instead of processes (shared address space)

- Increase time slices (but hurts responsiveness)

- CPU affinity (keep process on same CPU)

- Reduce I/O operations (batch I/O requests)

Linux Context Switch Implementation (Simplified):

// arch/x86/include/asm/switch_to.h

#define switch_to(prev, next, last) do { \

/* Save previous task's context */ \

asm volatile( \

"pushfl\n\t" /* Save flags */ \

"pushl %%ebp\n\t" /* Save base pointer */\

"movl %%esp,%[prev_sp]\n\t" /* Save stack ptr */ \

"movl %[next_sp],%%esp\n\t" /* Load next stack*/\

"movl $1f,%[prev_ip]\n\t" /* Save return IP */ \

"pushl %[next_ip]\n\t" /* Push next IP */ \

"jmp __switch_to\n\t" /* Context switch */ \

"1:\t" \

"popl %%ebp\n\t" /* Restore base ptr */ \

"popfl\n" /* Restore flags */ \

: [prev_sp] "=m" (prev->thread.sp), \

[prev_ip] "=m" (prev->thread.ip) \

: [next_sp] "m" (next->thread.sp), \

[next_ip] "m" (next->thread.ip), \

[prev] "a" (prev), \

[next] "d" (next) \

: "memory", "cc" \

); \

} while (0)Process Hierarchies

UNIX Process Tree:

init (PID 1) or systemd

├─ sshd

│ └─ bash (login shell)

│ └─ vim

├─ cron

│ └─ backup_script

├─ Apache httpd

│ ├─ httpd (worker 1)

│ ├─ httpd (worker 2)

│ └─ httpd (worker N)

└─ X Window System

└─ window_manager

├─ terminal_emulator

└─ browserViewing Process Tree (Linux):

$ pstree

init─┬─acpid

├─cron

├─dbus-daemon

├─dhclient

├─login───bash───pstree

├─rsyslogd───3*[{rsyslogd}]

├─sshd───sshd───bash

└─udevd───2*[udevd]

$ ps -ef # Full process list

$ ps aux # BSD-style format6. Thread Management and Concurrency

Thread Concept

A thread is the smallest unit of execution within a process. Unlike processes which have separate address spaces, threads share the same memory space and resources, making them lightweight and efficient.

Key Differences: Process vs Thread

| Aspect | Process | Thread |

|---|---|---|

| Memory Space | Separate address space | Shared address space |

| Resource Overhead | High (memory, file descriptors) | Low (shares with process) |

| Creation Time | Slow (milliseconds) | Fast (microseconds) |

| Context Switch | Expensive (page tables, cache misses) | Cheaper (same page tables) |

| Communication | IPC mechanisms needed | Direct memory access |

| Isolation | High (protected) | Low (shared memory) |

Thread Components:

Each thread has its own:

├── Program Counter (PC)

├── Stack (local variables, function calls)

├── CPU Registers

├── Thread-local storage

└── Stack pointer

Shared by all threads in process:

├── Process memory (heap, code, globals)

├── File descriptors

├── Signal handlers

└── Current working directoryUser-Level vs Kernel-Level Threads

User-Level Threads

- Managed by thread library (not OS)

- No kernel involvement

- Fast context switching

- Cannot utilize multiprocessors

- If one thread blocks, entire process blocked

// User-level thread example (POSIX Threads)

#include <pthread.h>

#include <stdio.h>

void* thread_function(void *arg) {

printf("Thread ID: %ld\n", (long)pthread_self());

return NULL;

}

int main() {

pthread_t thread;

// Create user-level thread

pthread_create(&thread, NULL, thread_function, NULL);

// Wait for thread completion

pthread_join(thread, NULL);

return 0;

}Kernel-Level Threads

- Managed by OS kernel

- Full kernel support

- Can run on multiple processors

- Slower context switching (syscall overhead)

- Better for I/O-bound operations

Thread Models

1. One-to-One Model

User Thread 1 ↔ Kernel Thread 1

User Thread 2 ↔ Kernel Thread 2

User Thread 3 ↔ Kernel Thread 3

Advantages:

- Full parallelism

- Blocking doesn't stop other threads

- OS sees all threads

Disadvantages:

- High kernel overhead

- Limited number of threads

- Resource consumption2. Many-to-One Model

User Thread 1 ┐

User Thread 2 ├→ Kernel Thread 1

User Thread 3 ┘

Advantages:

- Low overhead

- Fast context switching

- No syscalls needed

Disadvantages:

- No true parallelism

- One blocking thread blocks all

- Cannot use multiprocessors3. Many-to-Many Model

User Thread 1 ┐ ┌→ Kernel Thread 1

User Thread 2 ├─→ ├→ Kernel Thread 2

User Thread 3 ┤ └→ Kernel Thread 3

User Thread 4 ┘

Best of both worlds:

- Multiple kernel threads

- Efficient thread management

- True parallelism with low overheadThread Synchronization

Critical Section: Code segment that accesses shared resources

Mutual Exclusion Problem: Ensuring only one thread enters critical section at a time

Race Condition Danger: Without synchronization, the statement shared_counter++ (which consists of 3 operations: READ → INCREMENT → WRITE) can interleave between threads, causing lost updates. For example:

Thread 1: READ (10) → INCREMENT (11)

Thread 2: READ (10) → INCREMENT (11) ← Oops! Both read 10

Thread 1: WRITE (11)

Thread 2: WRITE (11) ← Expected 12, got 11!Solution 1: Mutex (Mutual Exclusion Lock)

#include <pthread.h>

#include <stdio.h>

pthread_mutex_t mutex = PTHREAD_MUTEX_INITIALIZER;

int shared_counter = 0;

void* increment_counter(void *arg) {

for (int i = 0; i < 1000000; i++) {

pthread_mutex_lock(&mutex);

shared_counter++; // Critical section

pthread_mutex_unlock(&mutex);

}

return NULL;

}

int main() {

pthread_t threads[2];

pthread_create(&threads[0], NULL, increment_counter, NULL);

pthread_create(&threads[1], NULL, increment_counter, NULL);

pthread_join(threads[0], NULL);

pthread_join(threads[1], NULL);

printf("Counter value: %d (expected: 2000000)\n", shared_counter);

pthread_mutex_destroy(&mutex);

return 0;

}Solution 2: Semaphore

#include <semaphore.h>

#include <pthread.h>

#include <stdio.h>

sem_t semaphore;

int shared_resource = 0;

void* worker(void *arg) {

for (int i = 0; i < 5; i++) {

sem_wait(&semaphore); // Acquire (P operation)

printf("Thread %ld accessing resource\n",

(long)pthread_self());

shared_resource++;

sem_post(&semaphore); // Release (V operation)

}

return NULL;

}

int main() {

sem_init(&semaphore, 0, 1); // Binary semaphore

pthread_t threads[3];

for (int i = 0; i < 3; i++) {

pthread_create(&threads[i], NULL, worker, NULL);

}

for (int i = 0; i < 3; i++) {

pthread_join(threads[i], NULL);

}

sem_destroy(&semaphore);

return 0;

}Solution 3: Condition Variables

#include <pthread.h>

#include <stdio.h>

pthread_mutex_t mutex = PTHREAD_MUTEX_INITIALIZER;

pthread_cond_t condition = PTHREAD_COND_INITIALIZER;

int ready = 0;

void* waiter_thread(void *arg) {

pthread_mutex_lock(&mutex);

while (!ready) { // Always use while, not if

printf("Waiting...\n");

pthread_cond_wait(&condition, &mutex);

}

printf("Proceeding!\n");

pthread_mutex_unlock(&mutex);

return NULL;

}

void* signaler_thread(void *arg) {

sleep(2);

pthread_mutex_lock(&mutex);

ready = 1;

pthread_cond_signal(&condition); // Wake one waiter

pthread_mutex_unlock(&mutex);

return NULL;

}

int main() {

pthread_t t1, t2;

pthread_create(&t1, NULL, waiter_thread, NULL);

pthread_create(&t2, NULL, signaler_thread, NULL);

pthread_join(t1, NULL);

pthread_join(t2, NULL);

return 0;

}Producer-Consumer Problem

Scenario: Producer generates data, Consumer uses data, bounded buffer between them

Classic Synchronization Problem: The Producer-Consumer problem demonstrates critical synchronization concepts:

- Mutual Exclusion: Only one thread accesses buffer at a time

- Resource Counting: Track empty/full slots

- Deadlock Avoidance: Proper ordering of semaphore operations

- Real-world Applications: Message queues, thread pools, I/O buffering

#include <pthread.h>

#include <stdio.h>

#include <stdlib.h>

#include <semaphore.h>

#define BUFFER_SIZE 5

int buffer[BUFFER_SIZE];

int in = 0, out = 0;

sem_t empty; // Counts empty slots

sem_t full; // Counts full slots

pthread_mutex_t mutex;

void* producer(void *arg) {

for (int i = 0; i < 10; i++) {

sem_wait(&empty); // Wait for empty slot

pthread_mutex_lock(&mutex);

buffer[in] = i * 10;

printf("Producer: Added %d at %d\n", buffer[in], in);

in = (in + 1) % BUFFER_SIZE;

pthread_mutex_unlock(&mutex);

sem_post(&full); // Signal full slot

}

return NULL;

}

void* consumer(void *arg) {

for (int i = 0; i < 10; i++) {

sem_wait(&full); // Wait for full slot

pthread_mutex_lock(&mutex);

int value = buffer[out];

printf("Consumer: Consumed %d from %d\n", value, out);

out = (out + 1) % BUFFER_SIZE;

pthread_mutex_unlock(&mutex);

sem_post(&empty); // Signal empty slot

}

return NULL;

}

int main() {

pthread_t prod, cons;

sem_init(&empty, 0, BUFFER_SIZE);

sem_init(&full, 0, 0);

pthread_mutex_init(&mutex, NULL);

pthread_create(&prod, NULL, producer, NULL);

pthread_create(&cons, NULL, consumer, NULL);

pthread_join(prod, NULL);

pthread_join(cons, NULL);

sem_destroy(&empty);

sem_destroy(&full);

pthread_mutex_destroy(&mutex);

return 0;

}7. CPU Scheduling Algorithms

Scheduling Criteria

Maximize:

- CPU Utilization: Keep CPU as busy as possible (40-90% is reasonable)

- Throughput: Number of processes completed per unit time

Minimize:

- Turnaround Time: Time from submission to completion

- Waiting Time: Time spent in ready queue

- Response Time: Time from submission to first response

Scheduling Algorithms (Complete Analysis)

1. First-Come-First-Served (FCFS)

Algorithm: Process executes for entire duration, no preemption

// FCFS Scheduler Implementation

struct process {

int pid;

int arrival_time;

int burst_time;

int start_time;

int end_time;

};

void fcfs_schedule(struct process procs[], int n) {

int current_time = 0;

double total_turnaround = 0, total_wait = 0;

for (int i = 0; i < n; i++) {

procs[i].start_time = current_time;

procs[i].end_time = current_time + procs[i].burst_time;

current_time = procs[i].end_time;

int turnaround = procs[i].end_time - procs[i].arrival_time;

int wait = procs[i].start_time - procs[i].arrival_time;

total_turnaround += turnaround;

total_wait += wait;

printf("P%d: Wait=%d, Turnaround=%d\n",

procs[i].pid, wait, turnaround);

}

printf("Average Wait Time: %.2f\n", total_wait / n);

printf("Average Turnaround: %.2f\n", total_turnaround / n);

}

/*

Example: Processes with burst times [24, 3, 3]

FCFS: P1_____________________ P2__ P3__

0 24 27 30

Waiting times: P1=0, P2=24, P3=27

Average wait: 17ms

*/Convoy Effect Problem: Short processes become stuck behind long ones in the queue, causing massive average waiting time degradation. In this example, P2 and P3 must wait 24 and 27 time units respectively just for P1 to finish - this is highly inefficient! FCFS is rarely used in practice due to this critical limitation.

2. Shortest Job First (SJF)

Non-preemptive: Each process runs completely

void sjf_schedule(struct process procs[], int n) {

// Sort by burst time (ascending)

for (int i = 0; i < n - 1; i++) {

for (int j = 0; j < n - i - 1; j++) {

if (procs[j].burst_time > procs[j+1].burst_time) {

struct process temp = procs[j];

procs[j] = procs[j+1];

procs[j+1] = temp;

}

}

}

fcfs_schedule(procs, n); // Then apply FCFS on sorted list

}

/*

Example: Processes [24, 3, 3]

Sorted: [3, 3, 24]

Gantt Chart:

P2_ P3_ P1_____________________

0 3 6 30

Average wait: (0 + 3 + 6) / 3 = 3ms (vs 17ms for FCFS!)

*/SJF Optimization: Notice the dramatic improvement! Average waiting time dropped from 17ms (FCFS) to just 3ms (SJF) - an 82% reduction! SJF is provably optimal for minimizing average waiting time when all processes arrive simultaneously.

Starvation Risk: Long processes may never execute if short processes keep arriving. Not practical in real systems without aging mechanisms.

Preemptive SJF (Shortest Remaining Time First - SRTF)

void srtf_schedule(struct process procs[], int n) {

int remaining[n];

for (int i = 0; i < n; i++) {

remaining[i] = procs[i].burst_time;

}

int time = 0;

int total_processes = n;

while (total_processes > 0) {

// Find process with shortest remaining time

int min_index = -1;

int min_remaining = INT_MAX;

for (int i = 0; i < n; i++) {

if (remaining[i] > 0 && remaining[i] < min_remaining &&

procs[i].arrival_time <= time) {

min_remaining = remaining[i];

min_index = i;

}

}

if (min_index == -1) {

time++;

continue;

}

// Execute for 1 time unit

remaining[min_index]--;

time++;

if (remaining[min_index] == 0) {

total_processes--;

}

}

}3. Round Robin (RR) Scheduling

Overview: Round Robin is a preemptive scheduling algorithm that assigns a fixed time slice (quantum) to each process in the ready queue. It’s designed specifically for time-sharing systems where fairness and responsiveness are critical.

Key Concept: Time Quantum (Time Slice) - Fixed time period allocated to each process

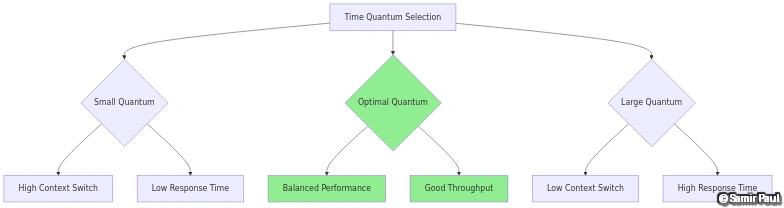

Time Quantum Selection is Critical:

- Too small (1-10ms): Excessive context switching overhead → CPU thrashing

- Too large (100ms+): Behaves like FCFS → poor response time

- Sweet spot: 80% of CPU bursts should be shorter than the quantum

- Linux default: ~100ms (configurable via

sched_latency_ns)

Characteristics:

- Preemptive: CPU is preempted after time quantum expires

- Fair: All processes get equal CPU time in rotation

- FIFO Queue: Processes are arranged in circular queue

- No Starvation: Every process eventually gets CPU time

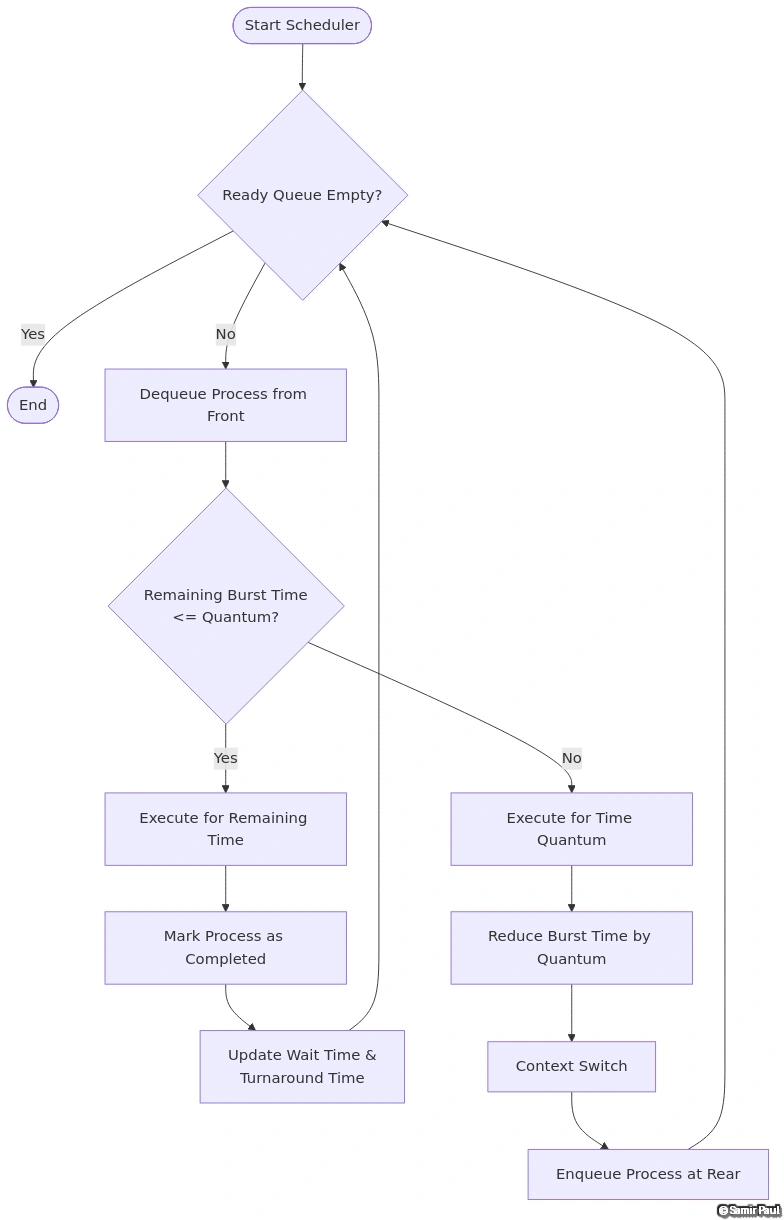

Round Robin Algorithm Flow

Figure 1: Round Robin scheduling algorithm flowchart illustrating the process dequeue, execution, and re-queue mechanism with time quantum management.

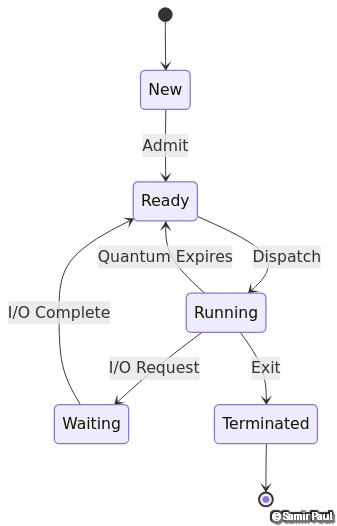

Process State Transitions in Round Robin

Figure 2: Process state transitions in Round Robin scheduling with time quantum expiration triggering preemption and re-queueing of processes.

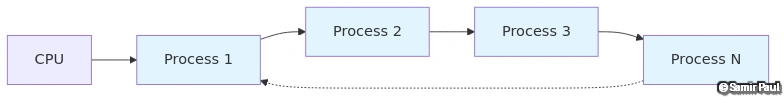

Circular Queue Mechanism

Figure 3: Circular ready queue mechanism showing how processes are dispatched to CPU and re-enqueued when time quantum expires before completion.

Detailed Implementation with Comments

#include <stdio.h>

#include <stdlib.h>

// Process structure with all necessary attributes

typedef struct {

int pid; // Process ID

int arrival_time; // When process arrives in ready queue

int burst_time; // Total CPU time needed

int remaining_time; // Remaining CPU time

int completion_time; // When process completes

int waiting_time; // Total time spent waiting

int turnaround_time; // completion_time - arrival_time

int response_time; // First time process gets CPU - arrival_time

int first_response; // Flag to track first CPU allocation

} Process;

// Circular queue for ready processes

typedef struct {

Process *processes[100];

int front;

int rear;

int count;

} ReadyQueue;

// Initialize queue

void init_queue(ReadyQueue *q) {

q->front = 0;

q->rear = -1;

q->count = 0;

}

// Check if queue is empty

int is_empty(ReadyQueue *q) {

return q->count == 0;

}

// Enqueue process at rear

void enqueue(ReadyQueue *q, Process *p) {

q->rear = (q->rear + 1) % 100; // Circular increment

q->processes[q->rear] = p;

q->count++;

}

// Dequeue process from front

Process* dequeue(ReadyQueue *q) {

if (is_empty(q)) return NULL;

Process *p = q->processes[q->front];

q->front = (q->front + 1) % 100; // Circular increment

q->count--;

return p;

}

/**

* Round Robin Scheduling Algorithm

*

* Parameters:

* processes: Array of processes to schedule

* n: Number of processes

* time_quantum: Fixed time slice for each process

*

* Algorithm Steps:

* 1. Create ready queue and add all processes

* 2. While queue is not empty:

* a. Dequeue process from front

* b. If remaining_time <= quantum: Execute completely

* c. Else: Execute for quantum, then re-enqueue

* 3. Calculate metrics (waiting time, turnaround time)

*/

void round_robin_schedule(Process processes[], int n, int time_quantum) {

ReadyQueue ready_queue;

init_queue(&ready_queue);

// Add all processes to ready queue (assume all arrive at time 0)

for (int i = 0; i < n; i++) {

processes[i].remaining_time = processes[i].burst_time;

processes[i].first_response = 0; // Not yet responded

enqueue(&ready_queue, &processes[i]);

}

int current_time = 0;

int completed = 0;

printf("\n=== Round Robin Scheduling (Quantum = %d) ===\n\n", time_quantum);

printf("Time\tProcess\tAction\n");

printf("----\t-------\t------\n");

// Main scheduling loop

while (!is_empty(&ready_queue)) {

// Dequeue next process from front of ready queue

Process *current = dequeue(&ready_queue);

// Record response time (first time process gets CPU)

if (current->first_response == 0) {

current->response_time = current_time - current->arrival_time;

current->first_response = 1;

}

// Determine execution time for this time slice

int execution_time;

if (current->remaining_time <= time_quantum) {

// Process will complete within this quantum

execution_time = current->remaining_time;

current->remaining_time = 0;

printf("%d\tP%d\tExecute for %dms (COMPLETE)\n",

current_time, current->pid, execution_time);

current_time += execution_time;

current->completion_time = current_time;

current->turnaround_time = current->completion_time - current->arrival_time;

current->waiting_time = current->turnaround_time - current->burst_time;

completed++;

} else {

// Process needs more time - will be preempted

execution_time = time_quantum;

current->remaining_time -= time_quantum;

printf("%d\tP%d\tExecute for %dms (PREEMPT, %dms remaining)\n",

current_time, current->pid, execution_time, current->remaining_time);

current_time += execution_time;

// Context switch: Re-enqueue at rear of ready queue

enqueue(&ready_queue, current);

}

}

printf("\n=== Process Completion Summary ===\n");

printf("PID\tBurst\tCompletion\tTurnaround\tWaiting\tResponse\n");

printf("---\t-----\t----------\t----------\t-------\t--------\n");

float avg_turnaround = 0, avg_waiting = 0, avg_response = 0;

for (int i = 0; i < n; i++) {

printf("P%d\t%d\t%d\t\t%d\t\t%d\t%d\n",

processes[i].pid,

processes[i].burst_time,

processes[i].completion_time,

processes[i].turnaround_time,

processes[i].waiting_time,

processes[i].response_time);

avg_turnaround += processes[i].turnaround_time;

avg_waiting += processes[i].waiting_time;

avg_response += processes[i].response_time;

}

printf("\n=== Performance Metrics ===\n");

printf("Average Turnaround Time: %.2f ms\n", avg_turnaround / n);

printf("Average Waiting Time: %.2f ms\n", avg_waiting / n);

printf("Average Response Time: %.2f ms\n", avg_response / n);

printf("Number of Context Switches: %d\n", current_time / time_quantum);

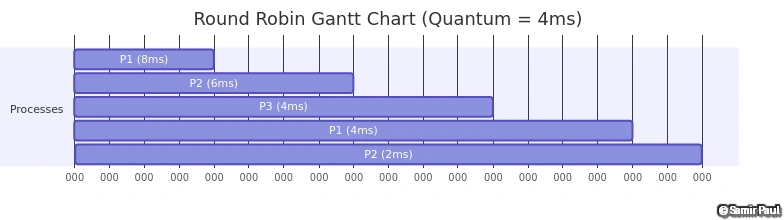

}Gantt Chart Example

Example Problem: Schedule 3 processes with Round Robin (Quantum = 4ms)

| Process | Burst Time |

|---|---|

| P1 | 24 ms |

| P2 | 3 ms |

| P3 | 3 ms |

Execution Timeline:

Figure 4: Gantt chart visualization of Round Robin scheduling with quantum=4ms, demonstrating process interleaving and completion times.

Step-by-Step Execution:

Time 0-4: P1 executes (24-4=20ms remaining) → Re-queue

Time 4-7: P2 executes (3-3=0ms) → Complete ✓

Time 7-10: P3 executes (3-3=0ms) → Complete ✓

Time 10-14: P1 executes (20-4=16ms remaining) → Re-queue

Time 14-18: P1 executes (16-4=12ms remaining) → Re-queue

Time 18-22: P1 executes (12-4=8ms remaining) → Re-queue

Time 22-26: P1 executes (8-4=4ms remaining) → Re-queue

Time 26-30: P1 executes (4-4=0ms) → Complete ✓Metrics Calculation:

- P1: Turnaround = 30-0 = 30ms, Waiting = 30-24 = 6ms, Response = 0ms

- P2: Turnaround = 7-0 = 7ms, Waiting = 7-3 = 4ms, Response = 4ms

- P3: Turnaround = 10-0 = 10ms, Waiting = 10-3 = 7ms, Response = 7ms

Impact of Time Quantum

Figure 5: Comparative analysis of time quantum values demonstrating the balance between response time, context switch overhead, and throughput in Round Robin scheduling.

Quantum Selection Guidelines:

- Rule of Thumb: 80% of CPU bursts should be shorter than quantum

- Typical Values: 10-100 milliseconds

- Linux Default: ~100ms (adjustable via

sched_latency_ns) - Context Switch Cost: Usually 1-10 microseconds

Quantum Comparison Example

// Demonstrate effect of different quantum values

void compare_quantum_values() {

Process procs[] = {

{1, 0, 53, 0, 0, 0, 0, 0, 0},

{2, 0, 17, 0, 0, 0, 0, 0, 0},

{3, 0, 68, 0, 0, 0, 0, 0, 0},

{4, 0, 24, 0, 0, 0, 0, 0, 0}

};

int quantum_values[] = {1, 5, 10, 20, 100};

printf("Quantum\tAvg Wait\tAvg Response\tContext Switches\n");

printf("-------\t--------\t------------\t----------------\n");

for (int i = 0; i < 5; i++) {

Process test_procs[4];

memcpy(test_procs, procs, sizeof(procs));

int quantum = quantum_values[i];

round_robin_schedule(test_procs, 4, quantum);

// Results would show:

// Q=1: High wait time, excellent response, ~162 switches

// Q=5: Good wait time, good response, ~32 switches

// Q=10: Good wait time, good response, ~16 switches

// Q=20: Moderate wait, moderate response, ~8 switches

// Q=100: High wait time, poor response, ~2 switches (like FCFS)

}

}Advantages and Disadvantages

Advantages: ✓ Fair CPU allocation: Every process gets equal time ✓ No starvation: All processes eventually execute ✓ Good response time: Especially for interactive systems ✓ Simple to implement: Easy to understand and code ✓ Preemptive: Can handle time-sharing systems

Disadvantages: ✗ Context switch overhead: More switches = more overhead ✗ Higher average turnaround: Compared to SJF ✗ Performance depends on quantum: Hard to choose optimal value ✗ Not ideal for batch systems: Throughput may suffer ✗ Penalizes CPU-bound processes: Long processes wait repeatedly

Real-World Usage

Linux Time-Sharing Scheduler (Pre-CFS):

// Simplified Linux Round Robin concept (older versions)

#define DEF_TIMESLICE (100 * HZ / 1000) // 100ms default

void schedule() {

struct task_struct *next;

// Select next task from run queue

next = pick_next_task_rr();

if (next->time_slice == 0) {

// Quantum expired - move to expired array

next->time_slice = DEF_TIMESLICE;

enqueue_task_expired(next);

} else {

// Continue with current process

context_switch(prev, next);

}

}Modern Applications:

- Interactive Systems: Desktop environments, mobile OS

- Time-Sharing Systems: Multi-user Unix/Linux systems

- Real-Time Systems: Combined with priority scheduling

- Virtual Machines: CPU time sharing among VMs

Practice Problems

Problem 1: Four processes arrive at time 0 with burst times P1=10, P2=1, P3=2, P4=1. Calculate average waiting time for quantum=1.

Solution:

Time 0-1: P1 → Re-queue (9 remaining)

Time 1-2: P2 → Complete

Time 2-4: P3 → Complete

Time 4-5: P4 → Complete

Time 5-6: P1 → Re-queue (8 remaining)

... continues ...

Time 12-13: P1 → Complete

Waiting Times: P1=(13-10)=3, P2=(2-1)=1, P3=(4-2)=2, P4=(5-1)=4

Average = (3+1+2+4)/4 = 2.5msProblem 2: Why is quantum=1 inefficient even though it provides best response time?

Answer: Context switch overhead dominates. If context switch takes 0.1ms and quantum is 1ms, system spends ~9% of time just switching contexts rather than executing processes.

4. Priority Scheduling

void priority_schedule(struct process procs[], int n) {

// Sort by priority (lower number = higher priority)

for (int i = 0; i < n - 1; i++) {

for (int j = 0; j < n - i - 1; j++) {

if (procs[j].priority > procs[j+1].priority) {

struct process temp = procs[j];

procs[j] = procs[j+1];

procs[j+1] = temp;

}

}

}

// Apply FCFS on priority-sorted list

fcfs_schedule(procs, n);

}

/*

Priority Inversion Problem:

- Low-priority task holds resource needed by high-priority task

- High-priority task waits for low-priority task

- Solution: Priority inheritance (boost lock holder's priority)

*/5. Linux CFS (Completely Fair Scheduler)

Concept: Each process gets fair share of CPU based on nice value

// Simplified CFS concept

typedef struct {

long vruntime; // Virtual runtime

long weight; // Priority weight

} cfs_task;

void cfs_update_runtime(cfs_task *task, long delta_exec) {

// Nice value determines weight:

// weight = 1024 / (1.25 ^ nice_value)

task->vruntime += (delta_exec * 1024) / task->weight;

}

/*

Red-Black Tree maintains tasks by vruntime:

P2 (vruntime=10)

/ \

P1 P3

(v=5) (v=15)

Selection: Always pick leftmost (smallest vruntime)

This ensures fairness while minimizing context switches

*/Context Switching Overhead

Costs of Context Switching:

Direct Cost: ~1-10 microseconds

- Save/restore registers

- Switch address space (if different process)

Indirect Cost: ~milliseconds

- Cache misses (cold cache)

- TLB misses

- Increased memory bandwidth

Example:

- Context switch: 10 μs

- CPU stall for cache miss: 100 ns

- 100+ misses per switch = 10 μs + 10 μs = 20 μs visible cost8. Synchronization Primitives

Race Conditions and Critical Sections

Race Condition: Multiple threads/processes access shared data, result depends on timing

// Classic race condition example

int counter = 0;

// Without synchronization (WRONG!)

void increment_unsafe() {

counter++; // Not atomic! Translates to:

// 1. Load counter into register

// 2. Increment register

// 3. Store register back to counter

}

// With synchronization (CORRECT)

pthread_mutex_t lock = PTHREAD_MUTEX_INITIALIZER;

void increment_safe() {

pthread_mutex_lock(&lock);

counter++; // Now atomic

pthread_mutex_unlock(&lock);

}

/*

Without locks, with 1000 increments per thread (2 threads):

Expected: 2000

Actual: Random (often 1200, 1500, etc.)

Reason: Interleaved execution:

Thread 1: Load (0) Increment (1) ...

Thread 2: Load (0) Increment (1) Store (1) ...

Thread 1: ... Store (1)

Result: Both stored 1, lost an increment

*/Semaphores Detailed

Binary Semaphore (value: 0 or 1)

#include <semaphore.h>

sem_t mutex;

sem_init(&mutex, 0, 1); // Initial value = 1

// Usage

sem_wait(&mutex); // P() operation - decrement

// Critical section

sem_post(&mutex); // V() operation - increment

Counting Semaphore (value: 0 to N)

sem_t available_resources;

sem_init(&available_resources, 0, 5); // 5 resources available

void* worker(void *arg) {

sem_wait(&available_resources); // Acquire resource

// Use resource

printf("Using resource\n");

sleep(1);

sem_post(&available_resources); // Release resource

return NULL;

}

/*

Example with 3 threads and 2 resources:

Thread 1: sem_wait() → success (available=1)

Thread 2: sem_wait() → success (available=0)

Thread 3: sem_wait() → blocks (available=0)

Thread 1: sem_post() → (available=1), wakes Thread 3

*/Readers-Writers Problem

Requirement: Multiple readers can access resource simultaneously, but writers need exclusive access

#include <pthread.h>

#include <semaphore.h>

#include <stdio.h>

int read_count = 0;

int shared_data = 0;

pthread_mutex_t read_count_lock = PTHREAD_MUTEX_INITIALIZER;

sem_t write_lock;

void* reader(void *arg) {

while (1) {

// Entry section

pthread_mutex_lock(&read_count_lock);

read_count++;

if (read_count == 1) {

sem_wait(&write_lock); // First reader blocks writers

}

pthread_mutex_unlock(&read_count_lock);

// Critical section (read)

printf("Reader %ld: %d\n", (long)pthread_self(), shared_data);

// Exit section

pthread_mutex_lock(&read_count_lock);

read_count--;

if (read_count == 0) {

sem_post(&write_lock); // Last reader allows writers

}

pthread_mutex_unlock(&read_count_lock);

}

return NULL;

}

void* writer(void *arg) {

while (1) {

sem_wait(&write_lock); // Exclusive access

// Critical section (write)

shared_data++;

printf("Writer %ld: Updated to %d\n",

(long)pthread_self(), shared_data);

sem_post(&write_lock);

}

return NULL;

}Atomic Operations and Memory Barriers

#include <stdatomic.h>

#include <stdint.h>

// Atomic variable

atomic_int counter = ATOMIC_VAR_INIT(0);

void increment_atomic() {

atomic_fetch_add(&counter, 1); // Guaranteed atomic

}

// Memory barriers

void process_data(int *data) {

*data = 42;

atomic_thread_fence(memory_order_release); // Release barrier

// All writes before this point visible to other threads

}

void read_data(int *data) {

atomic_thread_fence(memory_order_acquire); // Acquire barrier

// All reads after this point see changes made before

int value = *data;

}

/*

Memory Ordering Options:

- memory_order_relaxed: No synchronization

- memory_order_release: Release semantics

- memory_order_acquire: Acquire semantics

- memory_order_seq_cst: Sequential consistency (default, most expensive)

*/Lock-Free Data Structures

#include <stdatomic.h>

// Lock-free counter

typedef struct {

atomic_long value;

} LockFreeCounter;

void increment(LockFreeCounter *counter) {

atomic_fetch_add(&counter->value, 1);

}

// Lock-free stack using Compare-And-Swap (CAS)

typedef struct Node {

int data;

struct Node *next;

} Node;

typedef struct {

atomic_ptr_t top;

} LockFreeStack;

void push(LockFreeStack *stack, int value) {

Node *new_node = malloc(sizeof(Node));

new_node->data = value;

Node *old_top;

do {

old_top = atomic_load(&stack->top);

new_node->next = old_top;

} while (!atomic_compare_exchange_strong(&stack->top,

&old_top,

new_node));

}

/*

CAS (Compare-And-Swap):

- Atomically compare value at address with expected

- If equal, store new value

- Return whether operation succeeded

- No explicit locks needed

- Wait-free for many scenarios

*/9. Deadlock Handling

9.1 Understanding Deadlocks

A deadlock is a situation where a set of processes are blocked because each process is holding a resource and waiting for another resource held by another process in the set.

Coffman Conditions (Necessary Conditions for Deadlock)

For a deadlock to occur, all four conditions must hold simultaneously:

- Mutual Exclusion: At least one resource must be held in a non-shareable mode

- Hold and Wait: A process must be holding at least one resource and waiting for additional resources

- No Preemption: Resources cannot be forcibly removed from processes

- Circular Wait: A circular chain of processes exists where each process holds resources needed by the next

/*

* Example: Deadlock Scenario

* Process P1 holds Resource R1, needs R2

* Process P2 holds Resource R2, needs R1

*/

#include <pthread.h>

#include <stdio.h>

#include <unistd.h>

pthread_mutex_t resource1 = PTHREAD_MUTEX_INITIALIZER;

pthread_mutex_t resource2 = PTHREAD_MUTEX_INITIALIZER;

void* process1(void *arg) {

printf("P1: Acquiring Resource 1...\n");

pthread_mutex_lock(&resource1);

printf("P1: Got Resource 1\n");

sleep(1); // Simulate some work

printf("P1: Acquiring Resource 2...\n");

pthread_mutex_lock(&resource2); // DEADLOCK! P2 holds R2

printf("P1: Got Resource 2\n");

// Critical section

printf("P1: Working with both resources\n");

pthread_mutex_unlock(&resource2);

pthread_mutex_unlock(&resource1);

return NULL;

}

void* process2(void *arg) {

printf("P2: Acquiring Resource 2...\n");

pthread_mutex_lock(&resource2);

printf("P2: Got Resource 2\n");

sleep(1); // Simulate some work

printf("P2: Acquiring Resource 1...\n");

pthread_mutex_lock(&resource1); // DEADLOCK! P1 holds R1

printf("P2: Got Resource 1\n");

// Critical section

printf("P2: Working with both resources\n");

pthread_mutex_unlock(&resource1);

pthread_mutex_unlock(&resource2);

return NULL;

}

int main() {

pthread_t t1, t2;

pthread_create(&t1, NULL, process1, NULL);

pthread_create(&t2, NULL, process2, NULL);

pthread_join(t1, NULL);

pthread_join(t2, NULL);

return 0;

}9.2 Deadlock Prevention

Strategy: Ensure at least one Coffman condition cannot hold.

9.2.1 Breaking Mutual Exclusion

- Make resources shareable (not always possible)

- Use read-write locks for read-heavy workloads

#include <pthread.h>

pthread_rwlock_t shared_resource = PTHREAD_RWLOCK_INITIALIZER;

void* reader(void *arg) {

pthread_rwlock_rdlock(&shared_resource); // Multiple readers OK

// Read data

printf("Reader %ld reading\n", (long)pthread_self());

pthread_rwlock_unlock(&shared_resource);

return NULL;

}

void* writer(void *arg) {

pthread_rwlock_wrlock(&shared_resource); // Exclusive write

// Write data

printf("Writer %ld writing\n", (long)pthread_self());

pthread_rwlock_unlock(&shared_resource);

return NULL;

}9.2.2 Breaking Hold and Wait

- Require processes to request all resources at once

#include <pthread.h>

#include <stdbool.h>

typedef struct {

pthread_mutex_t *locks;

int num_locks;

pthread_mutex_t allocation_lock;

} ResourceManager;

// Acquire all resources atomically

bool acquire_all_resources(ResourceManager *rm, int *resource_ids, int count) {

pthread_mutex_lock(&rm->allocation_lock);

// Try to acquire all locks

for (int i = 0; i < count; i++) {

if (pthread_mutex_trylock(&rm->locks[resource_ids[i]]) != 0) {

// Failed to acquire, release all acquired locks

for (int j = 0; j < i; j++) {

pthread_mutex_unlock(&rm->locks[resource_ids[j]]);

}

pthread_mutex_unlock(&rm->allocation_lock);

return false;

}

}

pthread_mutex_unlock(&rm->allocation_lock);

return true;

}9.2.3 Breaking No Preemption

- Allow resources to be preempted (forcibly removed)

- Save process state and restore later

// Conceptual example - actual implementation is OS-specific

void preemptable_resource_request(Process *p, Resource *r) {

if (!try_acquire(r)) {

// Release all currently held resources

release_all_resources(p);

// Save process state

save_state(p);

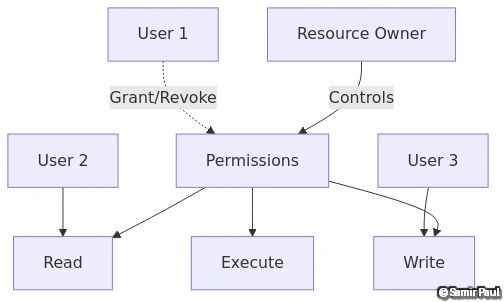

// Add to waiting queue