System Design Notes

A comprehensive guide to designing large-scale distributed systems

1. Introduction to System Design

System design is the process of defining the architecture, components, modules, interfaces, and data for a system to satisfy specified requirements. In the context of software engineering, it involves making high-level decisions about how to build scalable, reliable, and maintainable systems.

Why System Design Matters

Modern applications serve millions or even billions of users simultaneously. These systems must be:

- Scalable: Handle growing amounts of work

- Reliable: Continue functioning under failures

- Available: Accessible when users need them

- Maintainable: Easy to update and modify

- Performant: Respond quickly to user requests

- Cost-effective: Operate within reasonable budgets

The Journey Ahead

This guide takes you through a comprehensive exploration of system design, from fundamental concepts to complex real-world implementations. Each section builds upon previous knowledge, creating a complete understanding of how modern distributed systems work.

Learning Approach

Throughout this guide, we’ll use:

- Visual diagrams (in Mermaid format) to illustrate architectures

- Real-world examples from companies like Netflix, Uber, and Twitter

- Code examples in Python to demonstrate concepts

- Trade-off analyses to understand decision-making

- Practical exercises to reinforce learning

2. System Design Fundamentals

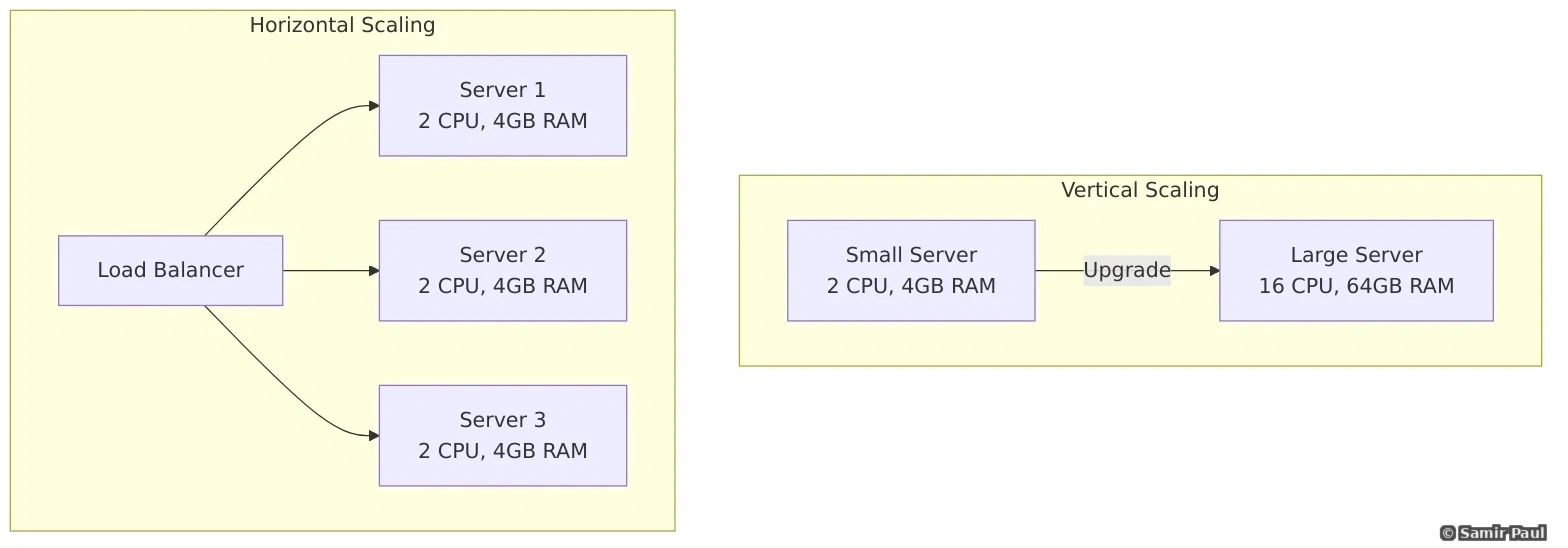

2.1 Scalability

Scalability is the ability of a system to handle increased load by adding resources. There are two primary approaches:

Vertical Scaling (Scale Up)

Adding more power to existing machines (CPU, RAM, disk).

Advantages:

- Simple implementation

- No code changes required

- Maintains data consistency easily

Disadvantages:

- Hardware limits (you can’t add infinite resources)

- Single point of failure

- Expensive at high end

- Requires downtime for upgrades

Horizontal Scaling (Scale Out)

Adding more machines to distribute the load.

Advantages:

- No theoretical limit to scaling

- Better fault tolerance

- More cost-effective

- Can use commodity hardware

Disadvantages:

- Application complexity increases

- Data consistency challenges

- Network overhead

- Requires load balancing

2.2 Performance vs Scalability

These concepts are often confused but are distinctly different:

Performance Problem: System is slow for a single user

- Solution: Optimize algorithms, add indexes, use caching

Scalability Problem: System performs well for one user but degrades under load

- Solution: Add servers, implement load balancing, distribute data

2.3 Latency vs Throughput

Latency: Time to perform a single operation

- Measured in milliseconds (ms)

- Example: Database query takes 50ms

Throughput: Number of operations per time unit

- Measured in requests per second (RPS) or queries per second (QPS)

- Example: System handles 10,000 requests/second

Important Insight: Low latency doesn’t always mean high throughput!

2.4 Key Latency Numbers Every Engineer Should Know

Understanding these numbers helps make informed design decisions:

| Operation | Latency | Relative Scale |

|---|---|---|

| L1 cache reference | 0.5 ns | 1x |

| Branch mispredict | 5 ns | 10x |

| L2 cache reference | 7 ns | 14x |

| Mutex lock/unlock | 100 ns | 200x |

| Main memory reference | 100 ns | 200x |

| Compress 1KB with Snappy | 10,000 ns (10 µs) | 20,000x |

| Send 1KB over 1 Gbps network | 10,000 ns (10 µs) | 20,000x |

| Read 1 MB sequentially from memory | 250,000 ns (250 µs) | 500,000x |

| Round trip within same datacenter | 500,000 ns (500 µs) | 1,000,000x |

| Read 1 MB sequentially from SSD | 1,000,000 ns (1 ms) | 2,000,000x |

| Disk seek | 10,000,000 ns (10 ms) | 20,000,000x |

| Read 1 MB sequentially from disk | 30,000,000 ns (30 ms) | 60,000,000x |

| Send packet CA→Netherlands→CA | 150,000,000 ns (150 ms) | 300,000,000x |

- Memory is fast, disk is slow

- SSD is 20x faster than traditional disk

- Network calls within datacenter are relatively fast

- Cross-continent network calls are expensive

- Always prefer sequential reads over random seeks

2.5 Availability

Availability is the percentage of time a system is operational and accessible.

Availability Calculation:

Availability = (Total Time - Downtime) / Total Time × 100%Standard Availability Tiers (The Nines):

| Availability | Downtime per Year | Downtime per Month | Downtime per Week |

|---|---|---|---|

| 99% (two nines) | 3.65 days | 7.31 hours | 1.68 hours |

| 99.9% (three nines) | 8.77 hours | 43.83 minutes | 10.08 minutes |

| 99.99% (four nines) | 52.60 minutes | 4.38 minutes | 1.01 minutes |

| 99.999% (five nines) | 5.26 minutes | 26.30 seconds | 6.05 seconds |

| 99.9999% (six nines) | 31.56 seconds | 2.63 seconds | 0.61 seconds |

Achieving High Availability:

Eliminate Single Points of Failure (SPOF)

- Redundant components

- Failover mechanisms

- Multi-region deployment

Implement Health Checks

- Monitor service health

- Automatic failure detection

- Quick recovery mechanisms

Use Load Balancers

- Distribute traffic

- Route around failures

- Prevent server overload

3. Back-of-the-Envelope Estimation

Being able to quickly estimate system requirements is crucial for system design. This section covers the estimation techniques used in interviews and real-world planning.

3.1 Power of Two

Understand data volume units:

| Power | Exact Value | Approximate Value | Short Name |

|---|---|---|---|

| 10 | 1,024 | 1 thousand | 1 KB |

| 20 | 1,048,576 | 1 million | 1 MB |

| 30 | 1,073,741,824 | 1 billion | 1 GB |

| 40 | 1,099,511,627,776 | 1 trillion | 1 TB |

| 50 | 1,125,899,906,842,624 | 1 quadrillion | 1 PB |

3.2 Common Performance Numbers

- QPS (Queries Per Second) for web server: 1000 - 5000

- Database connections pool size: 50 - 100 per instance

- Average web page size: 1-2 MB

- Video streaming bitrate: 1-5 Mbps for 1080p

- Mobile app API call: 5-10 calls per user session

3.3 Example Estimation: Twitter-Like System

Given Requirements:

- 300 million monthly active users (MAU)

- 50% use Twitter daily

- Average user posts 2 tweets per day

- 10% of tweets contain media

- Data retained for 5 years

Calculations:

Daily Active Users (DAU):

DAU = 300M × 50% = 150MTweet Traffic:

Tweets per day = 150M × 2 = 300M

Tweets per second (average) = 300M / 86400 ≈ 3,500 tweets/second

Peak QPS = 3,500 × 2 = 7,000 tweets/second (assuming 2x peak factor)Storage Estimation:

Average tweet size:

- Tweet text: 280 chars × 2 bytes = 560 bytes

- Metadata (ID, timestamp, user_id, etc.): 200 bytes

- Total per tweet: ~800 bytes ≈ 1 KB

Media tweets (10%):

- Image average: 500 KB

- Total per media tweet: 500 KB + 1 KB ≈ 500 KB

Daily storage:

- Text tweets: 270M × 1 KB = 270 GB

- Media tweets: 30M × 500 KB = 15 TB

- Total per day = 15.27 TB

5-year storage:

15.27 TB/day × 365 days × 5 years ≈ 27.9 PBBandwidth Estimation:

Ingress (upload):

15.27 TB/day ÷ 86,400 seconds ≈ 177 MB/second

Egress (assume each tweet viewed 10 times):

177 MB/s × 10 = 1.77 GB/secondMemory for Cache (80-20 rule):

20% of tweets generate 80% of traffic

Daily cache: 15.27 TB × 0.2 = 3 TB4. The System Design Interview Framework

A systematic approach to solving any system design problem.

4.1 The 4-Step Framework

Step 1: Understand the Problem and Establish Design Scope (3-10 minutes)

Ask clarifying questions:

- Who are the users?

- How many users?

- What features are essential?

- What is the scale we’re designing for?

- What is the expected traffic pattern?

- What technologies does the company use?

Step 2: Propose High-Level Design and Get Buy-In (10-15 minutes)

Key actions:

- Draw initial architecture diagram

- Identify major components

- Explain data flow

- Get feedback from interviewer

Step 3: Design Deep Dive (10-25 minutes)

Focus areas:

- Dig into 2-3 components based on interviewer interest

- Discuss trade-offs

- Address bottlenecks

- Consider edge cases

Step 4: Wrap Up (3-5 minutes)

Final touches:

- Identify system bottlenecks

- Discuss potential improvements

- Recap design decisions

- Mention monitoring and operations

4.2 Key Principles

Start Simple, Then Iterate

- Begin with a basic design

- Add complexity incrementally

- Justify each addition

Focus on What Matters

- Prioritize based on requirements

- Don’t over-engineer

- Keep the user experience in mind

Think About Trade-offs

- No perfect solution exists

- Discuss pros and cons

- Choose based on requirements

Use Real Numbers

- Apply back-of-the-envelope calculations

- Validate your assumptions

- Show quantitative reasoning

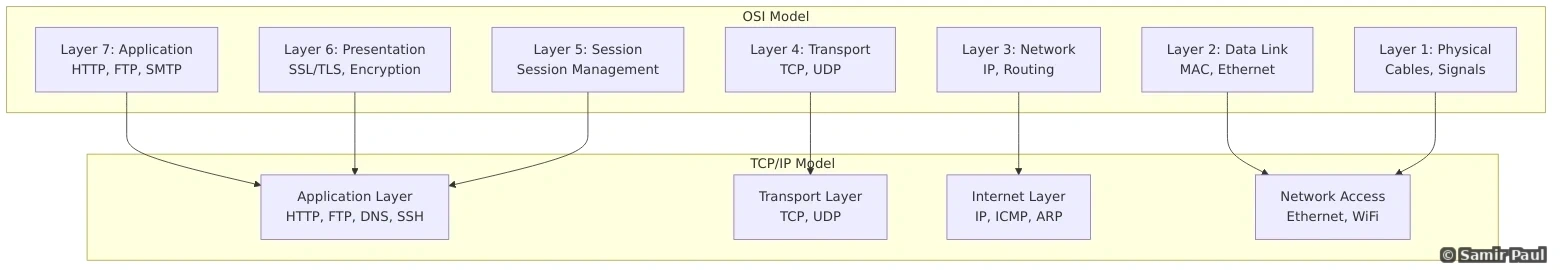

5. Networking and Communication

5.1 The OSI Model and TCP/IP

5.2 HTTP and HTTPS

HTTP (Hypertext Transfer Protocol) is the foundation of data communication on the web.

Common HTTP Methods:

| Method | Purpose | Idempotent | Safe |

|---|---|---|---|

| GET | Retrieve data | Yes | Yes |

| POST | Create resource | No | No |

| PUT | Update/Replace resource | Yes | No |

| PATCH | Partial update | No | No |

| DELETE | Remove resource | Yes | No |

| HEAD | Get headers only | Yes | Yes |

| OPTIONS | Query methods | Yes | Yes |

HTTP Status Codes:

1xx: Informational

100 Continue

101 Switching Protocols

2xx: Success

200 OK

201 Created

202 Accepted

204 No Content

3xx: Redirection

301 Moved Permanently

302 Found (Temporary Redirect)

304 Not Modified

307 Temporary Redirect

308 Permanent Redirect

4xx: Client Errors

400 Bad Request

401 Unauthorized

403 Forbidden

404 Not Found

409 Conflict

429 Too Many Requests

5xx: Server Errors

500 Internal Server Error

502 Bad Gateway

503 Service Unavailable

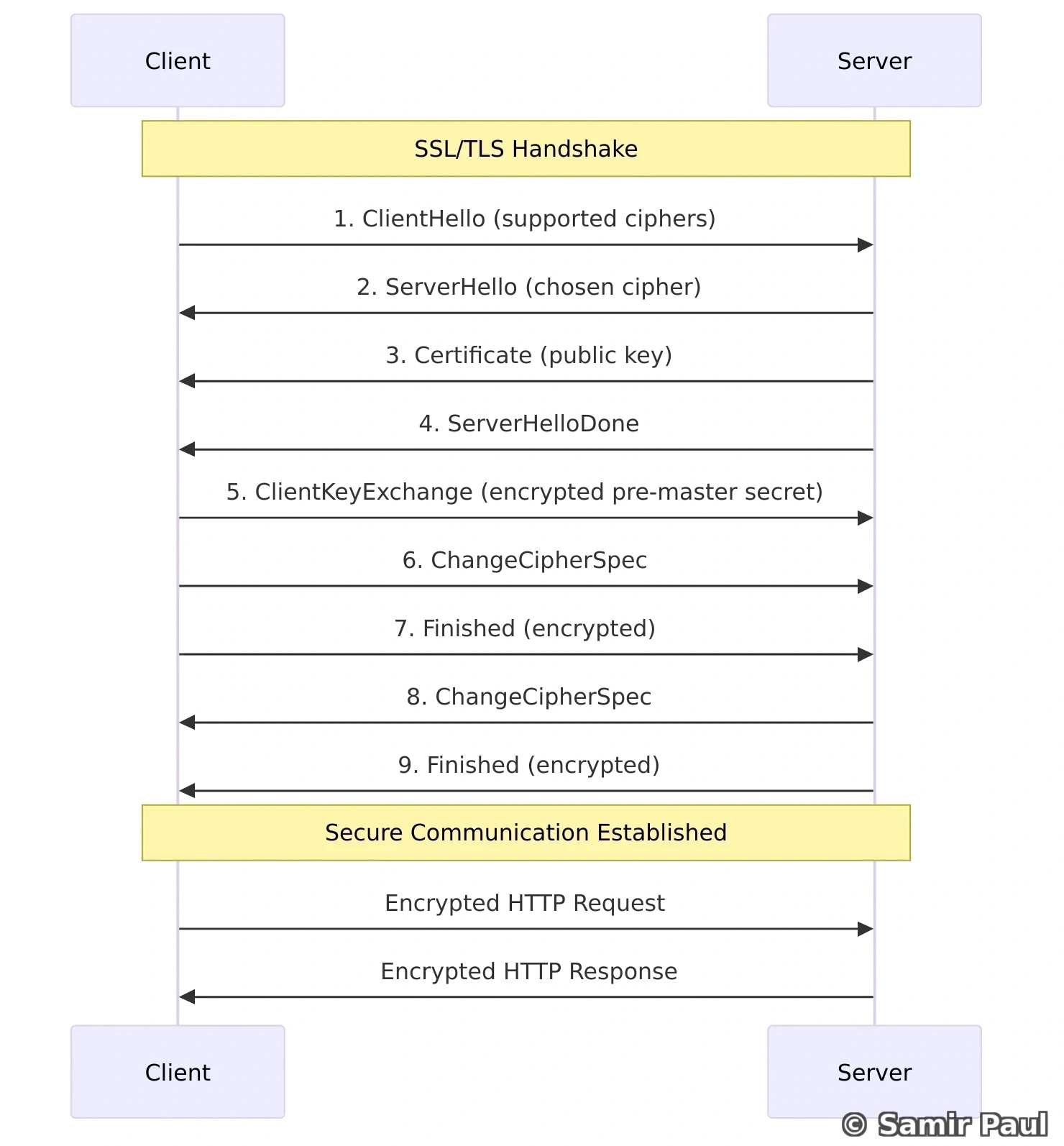

504 Gateway TimeoutHTTPS = HTTP + SSL/TLS

HTTPS adds encryption and authentication through SSL/TLS:

5.3 REST vs GraphQL vs gRPC vs WebSockets

REST (Representational State Transfer)

Characteristics:

- Resource-based (nouns in URLs)

- Stateless

- Uses standard HTTP methods

- Returns JSON or XML

Example:

GET /api/users/123

GET /api/users/123/posts

POST /api/users/123/posts

PUT /api/users/123

DELETE /api/users/123Pros:

- Simple and widely understood

- Cacheable

- Good tooling support

Cons:

- Over-fetching or under-fetching data

- Multiple round trips for related data

- Versioning can be challenging

GraphQL

Characteristics:

- Client specifies exactly what data it needs

- Single endpoint

- Strongly typed schema

- Real-time updates via subscriptions

Example:

query {

user(id: "123") {

name

email

posts(limit: 10) {

title

createdAt

}

}

}Pros:

- No over-fetching/under-fetching

- Single request for complex data

- Self-documenting API

Cons:

- More complex backend

- Caching is harder

- Potential for expensive queries

gRPC (Google Remote Procedure Call)

Characteristics:

- Uses Protocol Buffers (binary format)

- HTTP/2 based

- Strongly typed

- Bi-directional streaming

Example (protobuf):

service UserService {

rpc GetUser (UserRequest) returns (UserResponse);

rpc ListUsers (ListUsersRequest) returns (stream UserResponse);

}

message UserRequest {

string user_id = 1;

}

message UserResponse {

string user_id = 1;

string name = 2;

string email = 3;

}Pros:

- Very fast (binary protocol)

- Streaming support

- Strong typing

Cons:

- Not browser-friendly

- Less human-readable

- Steeper learning curve

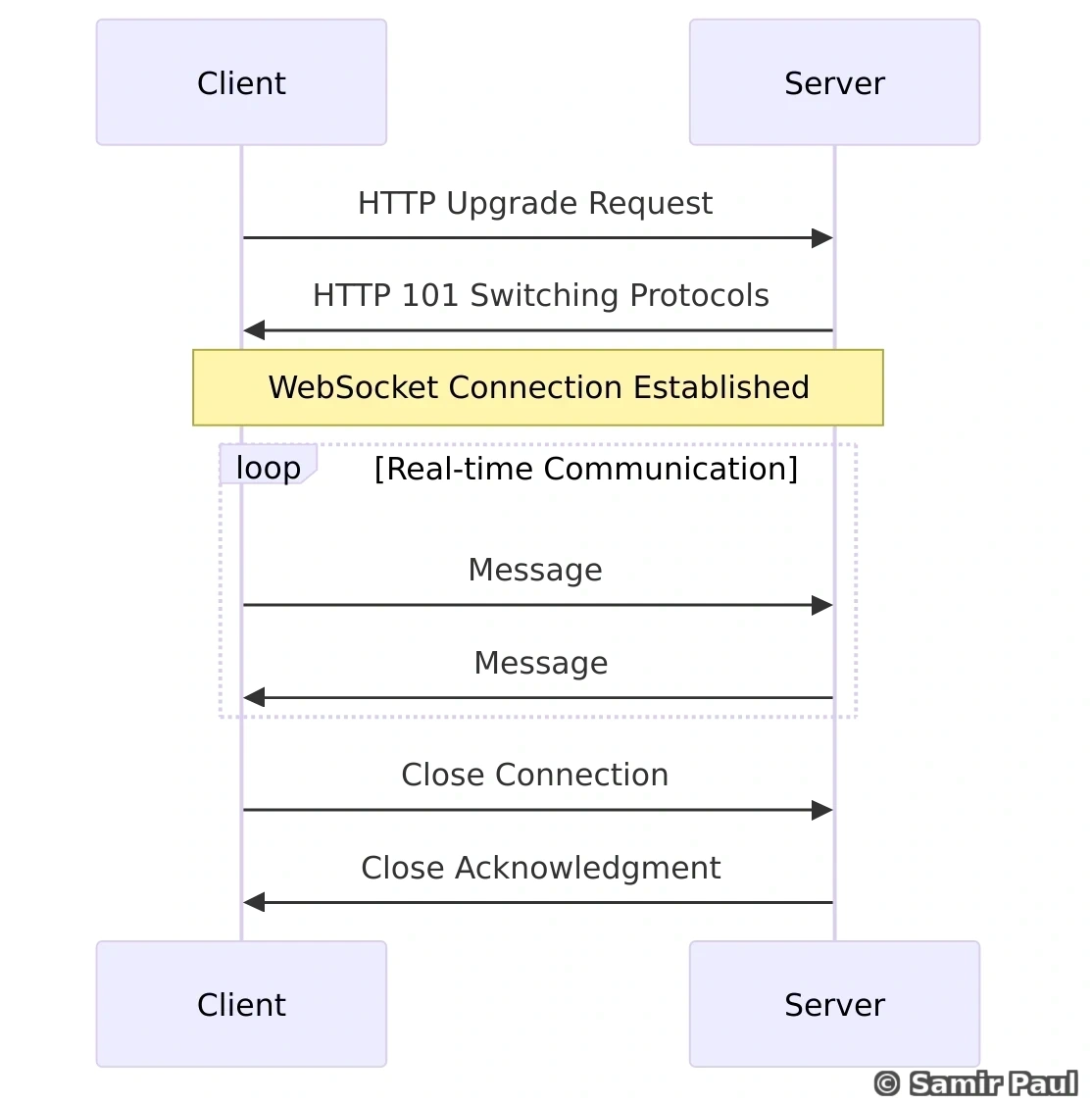

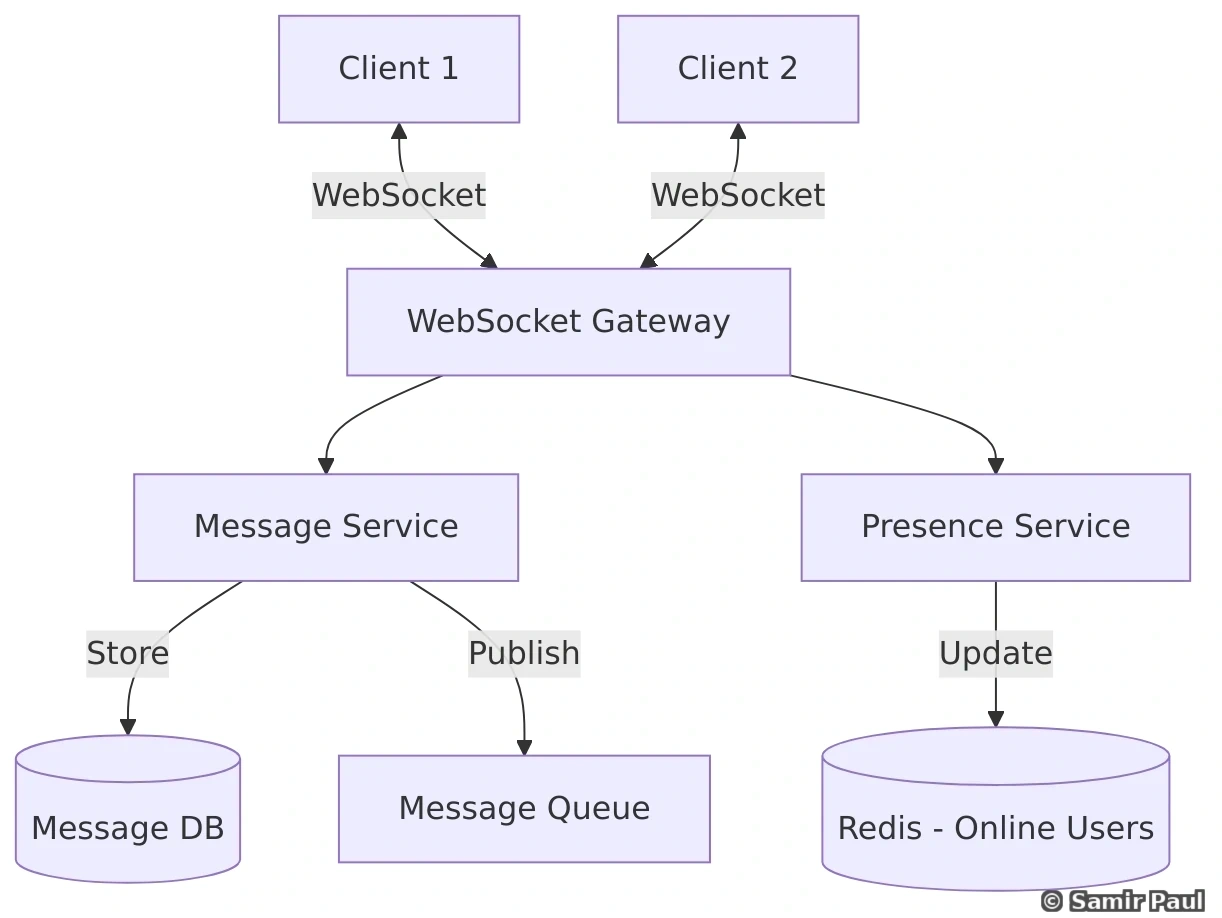

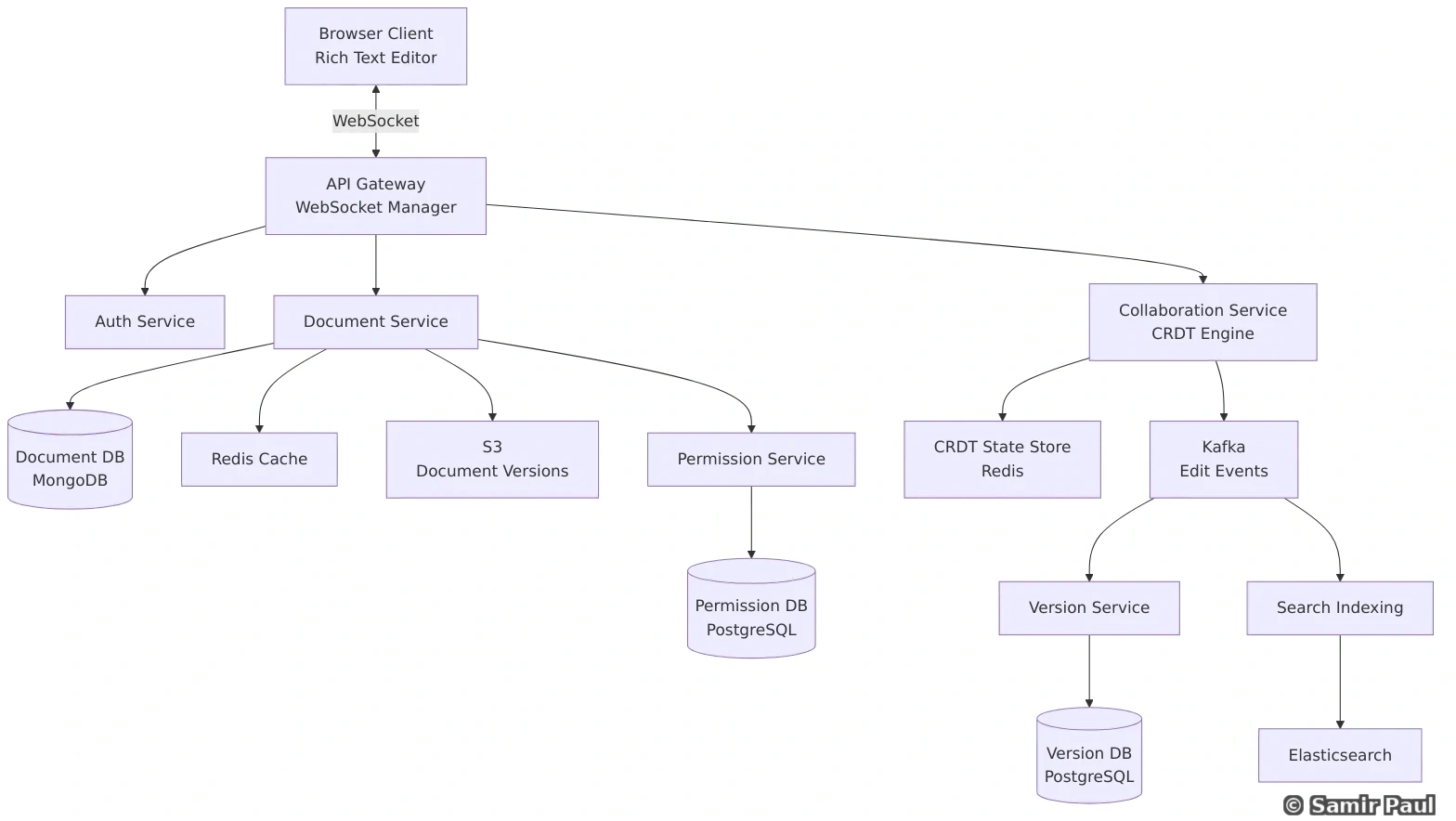

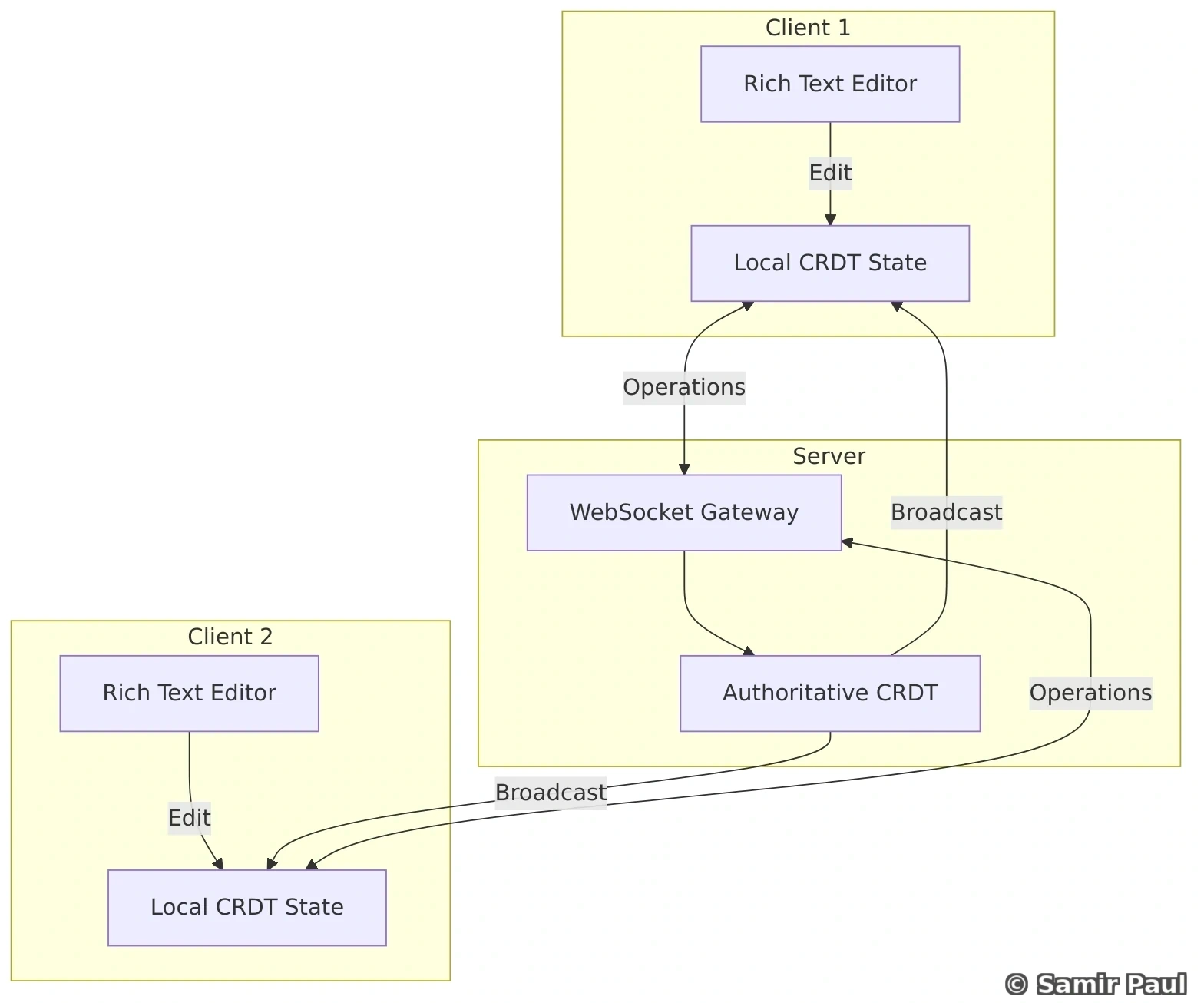

WebSockets

Characteristics:

- Full-duplex communication

- Persistent connection

- Real-time bidirectional data flow

Use cases:

- Chat applications

- Live feeds

- Real-time gaming

- Collaborative editing

5.4 API Authentication

1. Basic Authentication

Authorization: Basic base64(username:password)- Simple but insecure (credentials in every request)

- Use only over HTTPS

2. API Keys

X-API-Key: your-api-key-here- Simple to implement

- Good for server-to-server

- Hard to revoke individual keys

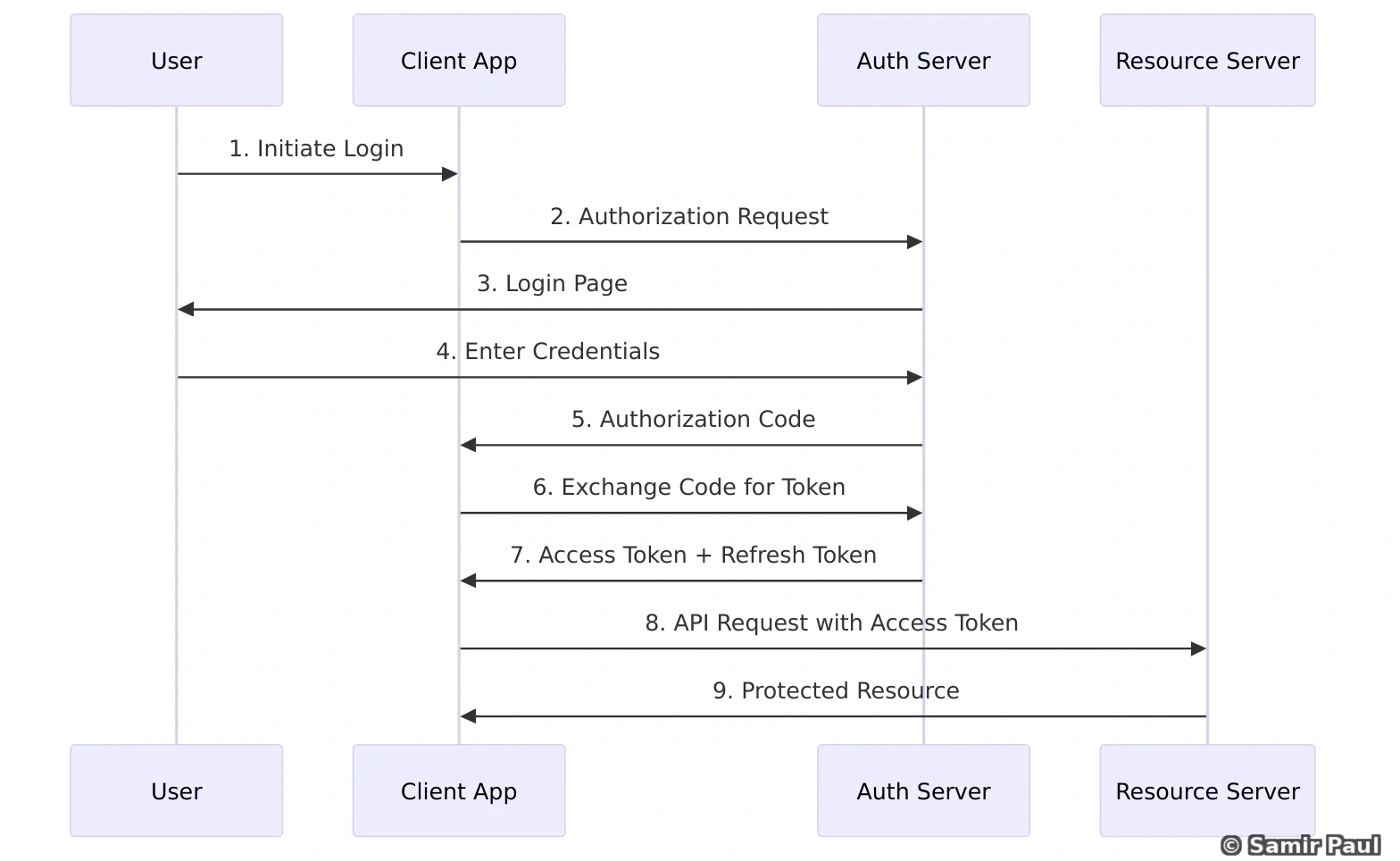

3. OAuth 2.0

Most common flows:

Authorization Code Flow (for web apps):

4. JWT (JSON Web Tokens)

Structure: header.payload.signature

// Header

{

"alg": "HS256",

"typ": "JWT"

}

// Payload

{

"sub": "user123",

"name": "John Doe",

"iat": 1516239022,

"exp": 1516242622

}

// Signature

HMACSHA256(

base64UrlEncode(header) + "." +

base64UrlEncode(payload),

secret

)Pros:

- Stateless (no server-side session storage)

- Portable across domains

- Contains user information

Cons:

- Can’t revoke before expiration

- Token size can be large

- Vulnerable if secret is exposed

6. Load Balancing

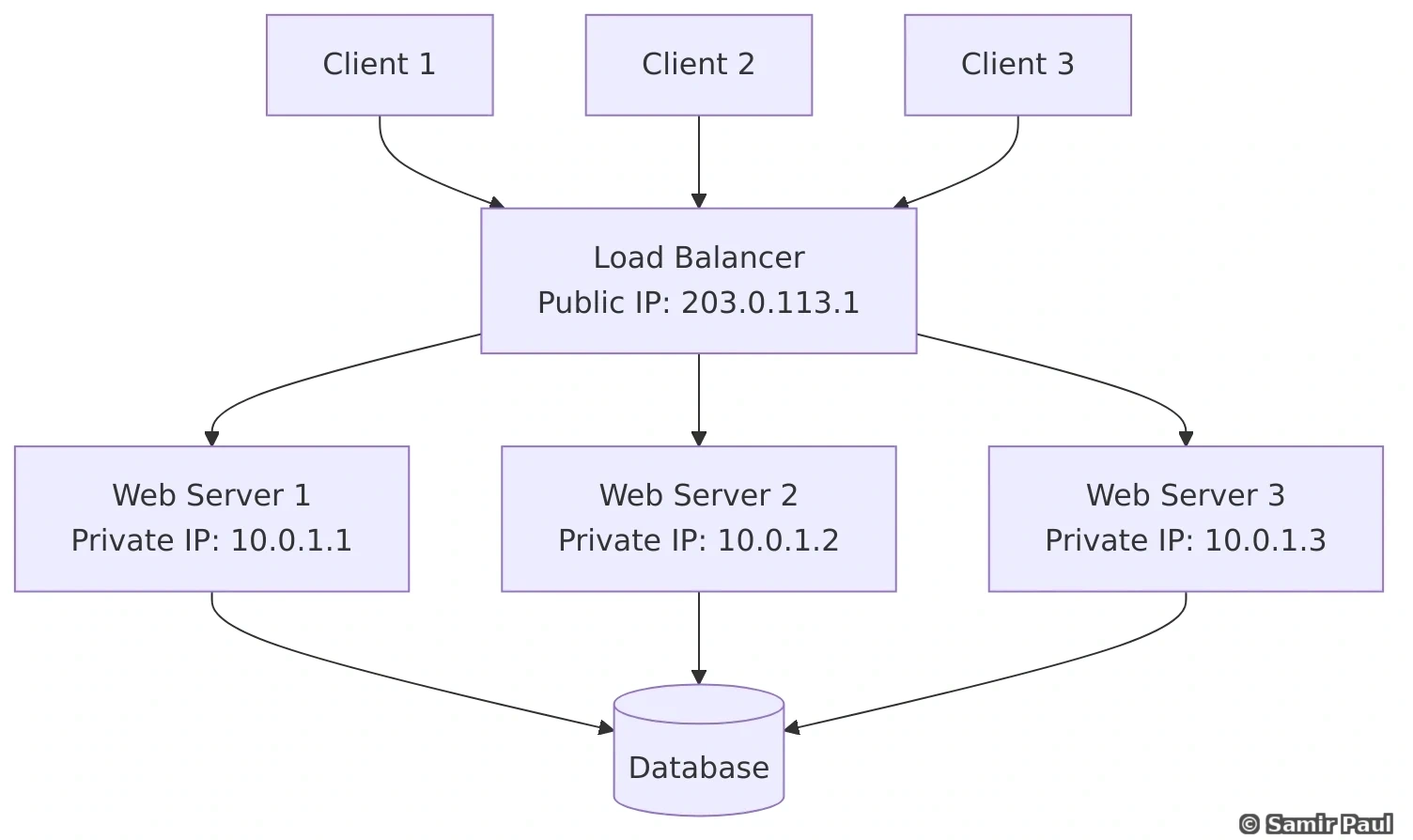

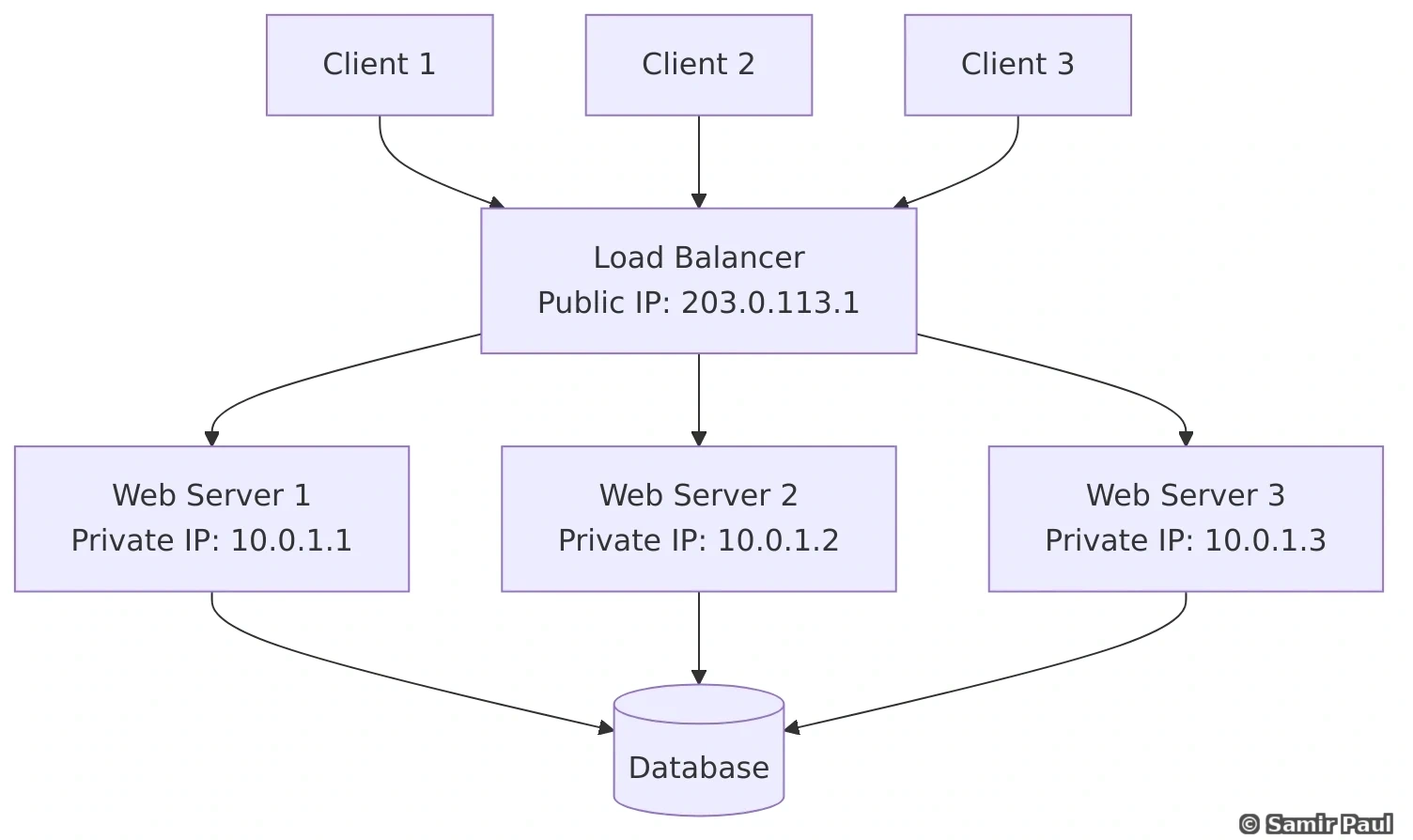

Load balancers distribute incoming traffic across multiple servers, improving availability and preventing any single server from becoming a bottleneck.

6.1 Why Load Balancers?

Benefits:

- High Availability: If one server fails, traffic routes to healthy servers

- Scalability: Add more servers to handle increased load

- Performance: Distribute load evenly

- Flexibility: Perform maintenance without downtime

6.2 Load Balancer Architecture

6.3 Load Balancing Algorithms

Choosing the Right Algorithm: The best load balancing algorithm depends on your specific use case. For stateless applications with similar request complexity, Round Robin works well. For long-lived connections (like WebSockets), use Least Connections. For session affinity, use IP Hash or Consistent Hashing.

1. Round Robin

- Distributes requests sequentially

- Simple and fair distribution

- Doesn’t account for server load or capacity

class RoundRobinLoadBalancer:

def __init__(self, servers):

self.servers = servers

self.current = 0

def get_server(self):

server = self.servers[self.current]

self.current = (self.current + 1) % len(self.servers)

return server2. Weighted Round Robin

- Assigns weight to each server based on capacity

- Servers with higher capacity get more requests

class WeightedRoundRobinLoadBalancer:

def __init__(self, servers_with_weights):

# servers_with_weights = [('server1', 5), ('server2', 3), ('server3', 2)]

self.servers = []

for server, weight in servers_with_weights:

self.servers.extend([server] * weight)

self.current = 0

def get_server(self):

server = self.servers[self.current]

self.current = (self.current + 1) % len(self.servers)

return server3. Least Connections

- Routes to server with fewest active connections

- Good for long-lived connections

class LeastConnectionsLoadBalancer:

def __init__(self, servers):

self.connections = {server: 0 for server in servers}

def get_server(self):

return min(self.connections, key=self.connections.get)

def on_connection_established(self, server):

self.connections[server] += 1

def on_connection_closed(self, server):

self.connections[server] -= 14. Least Response Time

- Routes to server with lowest average response time

- Dynamically adapts to server performance

5. IP Hash

- Uses client IP to determine server

- Ensures same client always routes to same server

- Good for session persistence

import hashlib

class IPHashLoadBalancer:

def __init__(self, servers):

self.servers = servers

def get_server(self, client_ip):

hash_value = int(hashlib.md5(client_ip.encode()).hexdigest(), 16)

server_index = hash_value % len(self.servers)

return self.servers[server_index]6. Random

- Randomly selects server

- Simple and works well with many servers

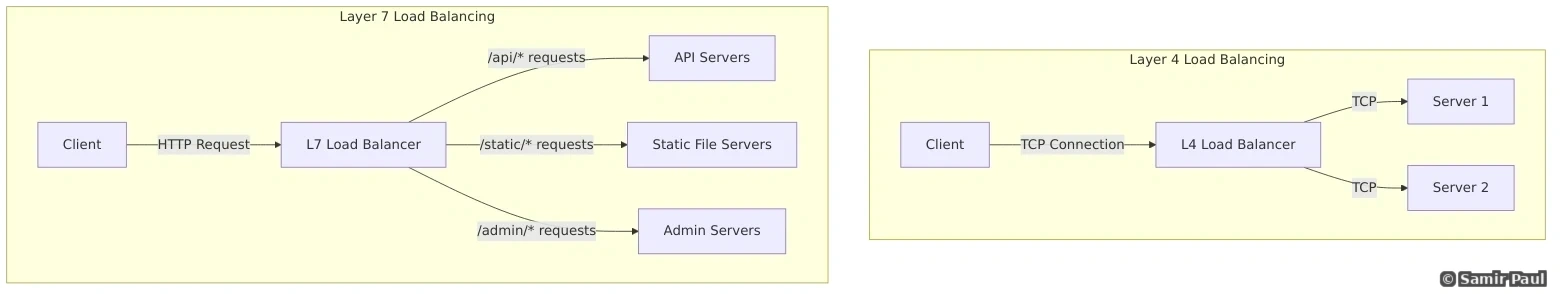

6.4 Load Balancer Layers

Layer 4 vs Layer 7 Trade-off: Layer 4 load balancing is faster (10-100 microseconds latency) but less intelligent. Layer 7 adds 1-10 milliseconds latency but enables content-based routing, URL rewriting, and application-aware decisions. Use Layer 4 for raw throughput, Layer 7 for microservices architectures.

Layer 4 (Transport Layer) Load Balancing

- Operates at TCP/UDP level

- Routes based on IP and port

- Fast (no packet inspection)

- Cannot make routing decisions based on content

Layer 7 (Application Layer) Load Balancing

- Operates at HTTP level

- Can route based on URL, headers, cookies

- More intelligent routing

- Slower (needs to inspect packets)

- Can do SSL termination

6.5 Health Checks

Load balancers need to know which servers are healthy:

import time

import requests

class HealthChecker:

def __init__(self, servers, check_interval=10):

self.servers = servers

self.healthy_servers = set(servers)

self.check_interval = check_interval

def check_health(self, server):

try:

response = requests.get(f"{server}/health", timeout=2)

return response.status_code == 200

except:

return False

def monitor(self):

while True:

for server in self.servers:

if self.check_health(server):

self.healthy_servers.add(server)

else:

self.healthy_servers.discard(server)

time.sleep(self.check_interval)

def get_healthy_servers(self):

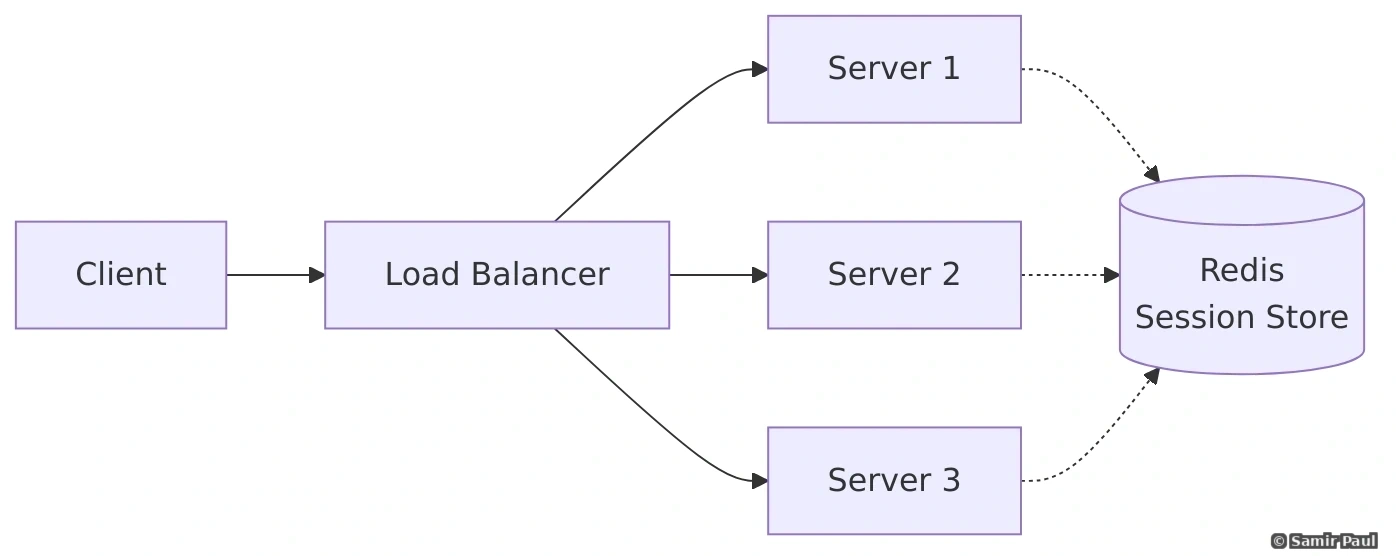

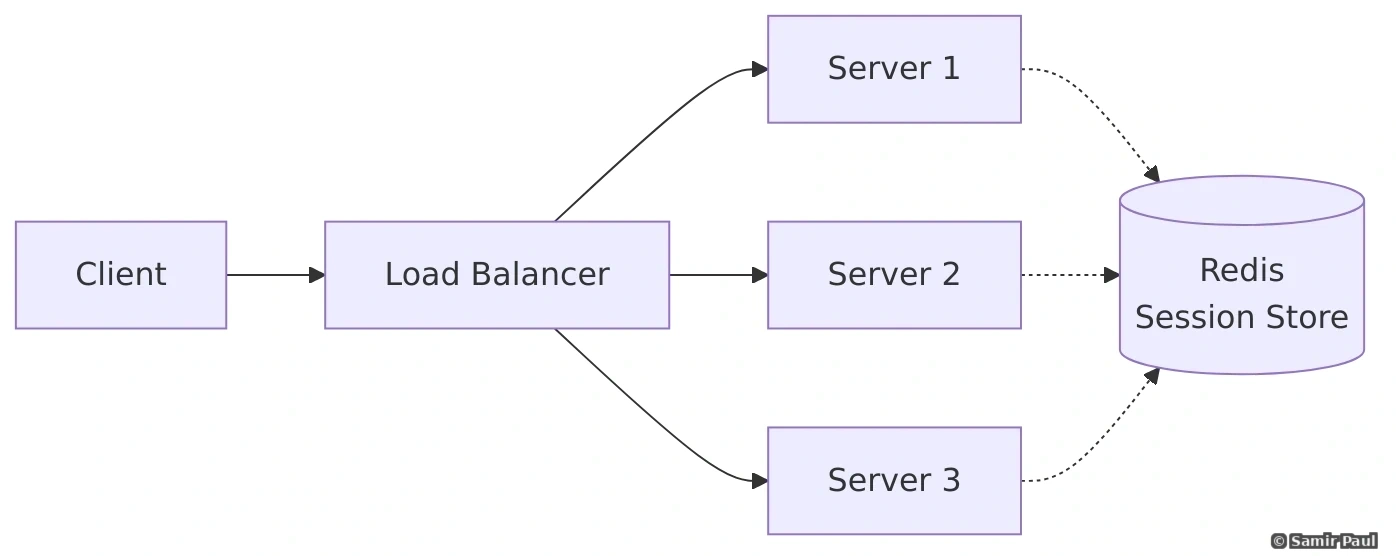

return list(self.healthy_servers)6.6 Session Persistence (Sticky Sessions)

Challenge: With load balancing, subsequent requests from the same user might go to different servers.

Solutions:

1. Sticky Sessions at Load Balancer

- Load balancer remembers which server served a client

- Routes all requests from that client to same server

- Problem: If server dies, session is lost

2. Shared Session Storage

- Store sessions in Redis/Memcached

- Any server can serve any request

- More scalable and fault-tolerant

3. Client-Side Sessions (JWT)

- Session data stored in token on client

- Stateless servers

- Token passed with each request

7. Caching Strategies

7.1 What to Cache?

Good candidates for caching:

- Read-heavy data: Data that is read frequently but updated infrequently

- Expensive computations: Results that take significant CPU time to calculate

- Database query results: Frequently-run queries with stable results

- API responses: Third-party API calls with consistent data

- Rendered HTML pages: Static or semi-static page content

- Session data: User authentication and session information

- Static assets: Images, CSS, JavaScript files

Avoid caching:

- Highly dynamic data that changes frequently

- User-specific sensitive data without proper isolation

- Data requiring strong consistency guarantees

- Data with low hit rates (rarely accessed)

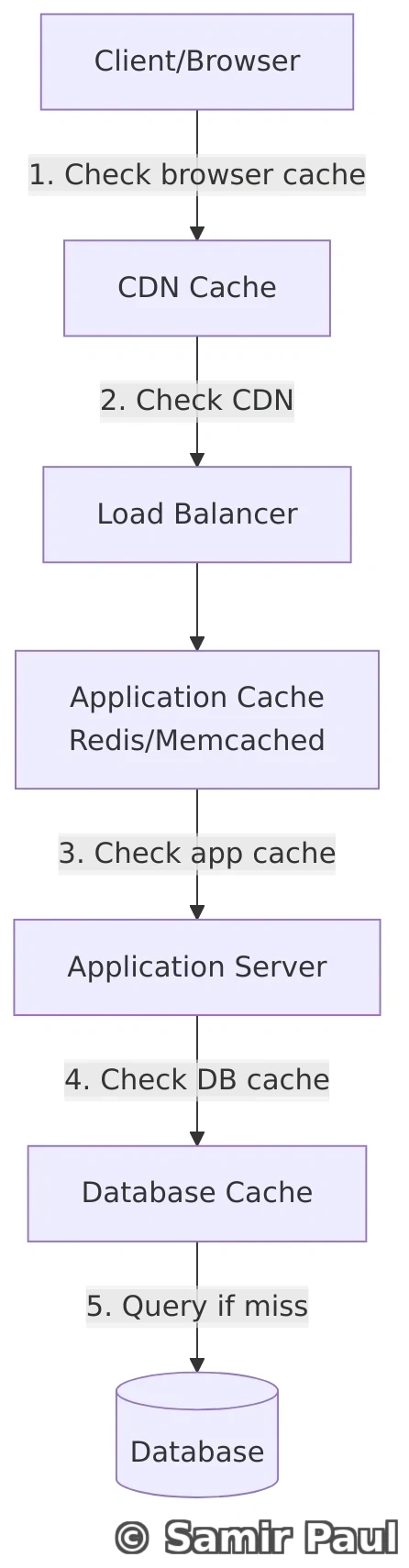

7.2 Cache Levels

1. Client-Side Caching

- Browser cache

- LocalStorage / SessionStorage

- Service Workers

2. CDN Caching

- Caches static assets close to users

- Reduces origin server load

3. Application-Level Caching

- In-memory caches (Redis, Memcached)

- Application server cache

4. Database Caching

- Query result cache

- Buffer pool cache

7.3 Caching Patterns

Caching Pattern Selection: Choose your caching pattern based on read/write ratios and consistency requirements:

- Cache-Aside: Best for read-heavy workloads with eventual consistency tolerance (90% of use cases)

- Write-Through: When strong consistency is required between cache and database

- Write-Behind: For write-heavy workloads where eventual consistency is acceptable

- Refresh-Ahead: For predictable access patterns with hot data

1. Cache-Aside (Lazy Loading)

Most common pattern:

def get_user(user_id):

# Try cache first

user = cache.get(f"user:{user_id}")

if user is None:

# Cache miss - load from database

user = database.query(f"SELECT * FROM users WHERE id = {user_id}")

# Store in cache for next time

cache.set(f"user:{user_id}", user, ttl=3600)

return userFlow:

- Application checks cache

- If data exists (cache hit), return it

- If data doesn’t exist (cache miss):

- Load from database

- Write to cache

- Return data

Pros:

- Only requested data is cached

- Cache failure doesn’t break application

Cons:

- Initial request is slow (cache miss)

- Stale data possible if cache doesn’t expire

2. Read-Through Cache

Cache sits between application and database:

class ReadThroughCache:

def __init__(self, cache, database):

self.cache = cache

self.database = database

def get(self, key):

# Cache handles database lookup automatically

return self.cache.get(key, loader=lambda: self.database.get(key))Flow:

- Application requests data from cache

- Cache checks if data exists

- If not, cache loads from database automatically

- Cache returns data

Pros:

- Cleaner application code

- Consistent caching logic

Cons:

- Cache becomes critical dependency

- Potential bottleneck

3. Write-Through Cache

Data written to cache and database simultaneously:

def update_user(user_id, data):

# Write to both cache and database

cache.set(f"user:{user_id}", data)

database.update(f"UPDATE users SET ... WHERE id = {user_id}")Pros:

- Cache always consistent with database

- No stale data

Cons:

- Slower writes (two operations)

- Cache may contain rarely-read data

4. Write-Behind (Write-Back) Cache

Data written to cache immediately, database updated asynchronously:

def update_user(user_id, data):

# Write to cache immediately

cache.set(f"user:{user_id}", data)

# Queue database write for later

write_queue.push({

'operation': 'update',

'table': 'users',

'id': user_id,

'data': data

})Pros:

- Very fast writes

- Can batch database writes

Cons:

- Risk of data loss if cache fails

- More complex to implement

- Eventual consistency

5. Refresh-Ahead

Proactively refresh cache before expiration:

import time

import threading

def refresh_ahead_cache(key, ttl, refresh_threshold=0.8):

data = cache.get(key)

if data is None:

# Cache miss - load and cache

data = load_from_database(key)

cache.set(key, data, ttl=ttl)

else:

# Check if approaching expiration

remaining_ttl = cache.ttl(key)

if remaining_ttl < (ttl * refresh_threshold):

# Asynchronously refresh

threading.Thread(

target=lambda: cache.set(key, load_from_database(key), ttl=ttl)

).start()

return data7.4 Cache Eviction Policies

When cache is full, which data should be removed?

1. LRU (Least Recently Used)

- Evicts least recently accessed items

- Most commonly used

- Good for general-purpose caching

2. LFU (Least Frequently Used)

- Evicts items accessed least often

- Good for data with varying access patterns

3. FIFO (First In First Out)

- Evicts oldest items first

- Simple but not always optimal

4. TTL (Time To Live)

- Items expire after fixed time

- Good for time-sensitive data

Implementation of LRU Cache:

from collections import OrderedDict

class LRUCache:

def __init__(self, capacity):

self.cache = OrderedDict()

self.capacity = capacity

def get(self, key):

if key not in self.cache:

return None

# Move to end (most recently used)

self.cache.move_to_end(key)

return self.cache[key]

def put(self, key, value):

if key in self.cache:

# Update and move to end

self.cache.move_to_end(key)

self.cache[key] = value

if len(self.cache) > self.capacity:

# Remove least recently used (first item)

self.cache.popitem(last=False)

# Usage

cache = LRUCache(capacity=3)

cache.put("user:1", {"name": "Alice"})

cache.put("user:2", {"name": "Bob"})

cache.put("user:3", {"name": "Charlie"})

cache.put("user:4", {"name": "David"}) # Evicts user:17.5 Cache Considerations

1. Cache Expiration (TTL)

TTL Strategy Pitfalls: Setting TTL too short causes cache misses and unnecessary database hits. Setting it too long risks serving stale data. Monitor cache hit ratios and adjust TTL dynamically based on actual data update frequency. For critical data, implement cache invalidation events rather than relying solely on TTL.

Set appropriate TTL based on data characteristics:

# Fast-changing data

cache.set("stock_price:AAPL", price, ttl=60) # 1 minute

# Slow-changing data

cache.set("user_profile:123", profile, ttl=3600) # 1 hour

# Rarely changing data

cache.set("product_category", categories, ttl=86400) # 24 hours2. Cache Stampede Prevention

Cache Thundering Herd: When a popular cached item expires, hundreds or thousands of requests can simultaneously hit the database, causing a spike in load. This can bring down the database. Implement one of: (1) Locking (only first request loads), (2) Probabilistic early refresh (reload before expiration), or (3) Refresh-Ahead pattern.

Problem: When cached item expires, multiple requests simultaneously hit database.

Solution: Use locks or probabilistic early expiration:

import threading

import random

lock_dict = {}

def get_with_stampede_protection(key, ttl, load_function):

data = cache.get(key)

if data is None:

# Get or create lock for this key

if key not in lock_dict:

lock_dict[key] = threading.Lock()

lock = lock_dict[key]

# Only one thread loads data

with lock:

# Double-check cache (another thread may have loaded it)

data = cache.get(key)

if data is None:

data = load_function()

cache.set(key, data, ttl=ttl)

# Probabilistic early refresh

remaining_ttl = cache.ttl(key)

if remaining_ttl < ttl * 0.2: # Last 20% of TTL

if random.random() < 0.1: # 10% chance

threading.Thread(

target=lambda: cache.set(key, load_function(), ttl=ttl)

).start()

return data3. Cache Warming

Pre-populate cache with frequently accessed data:

def warm_cache():

"""Run during application startup"""

# Load popular users

popular_users = database.query("SELECT * FROM users ORDER BY popularity DESC LIMIT 1000")

for user in popular_users:

cache.set(f"user:{user.id}", user, ttl=3600)

# Load popular products

popular_products = database.query("SELECT * FROM products ORDER BY sales DESC LIMIT 5000")

for product in popular_products:

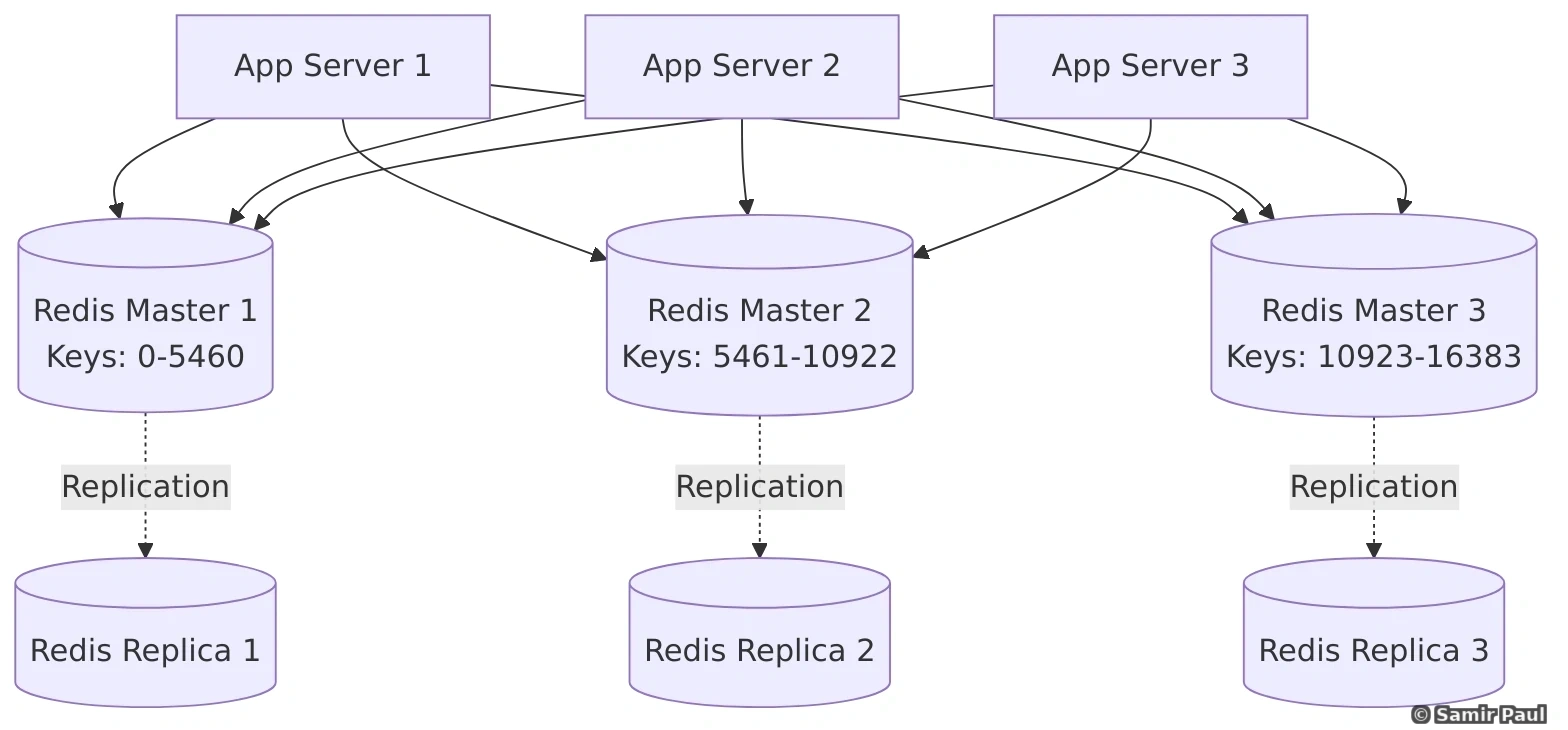

cache.set(f"product:{product.id}", product, ttl=7200)4. Distributed Caching

For high availability and scalability:

7.6 Redis vs Memcached

| Feature | Redis | Memcached |

|---|---|---|

| Data Structures | Strings, Lists, Sets, Sorted Sets, Hashes, Bitmaps | Only strings |

| Persistence | Yes (RDB, AOF) | No |

| Replication | Yes | No (requires external tools) |

| Transactions | Yes | No |

| Pub/Sub | Yes | No |

| Lua Scripting | Yes | No |

| Multi-threading | Single-threaded (6.0+ has I/O threads) | Multi-threaded |

| Memory Efficiency | Good | Slightly better |

| Use Case | Complex caching, session store, queues | Simple key-value caching |

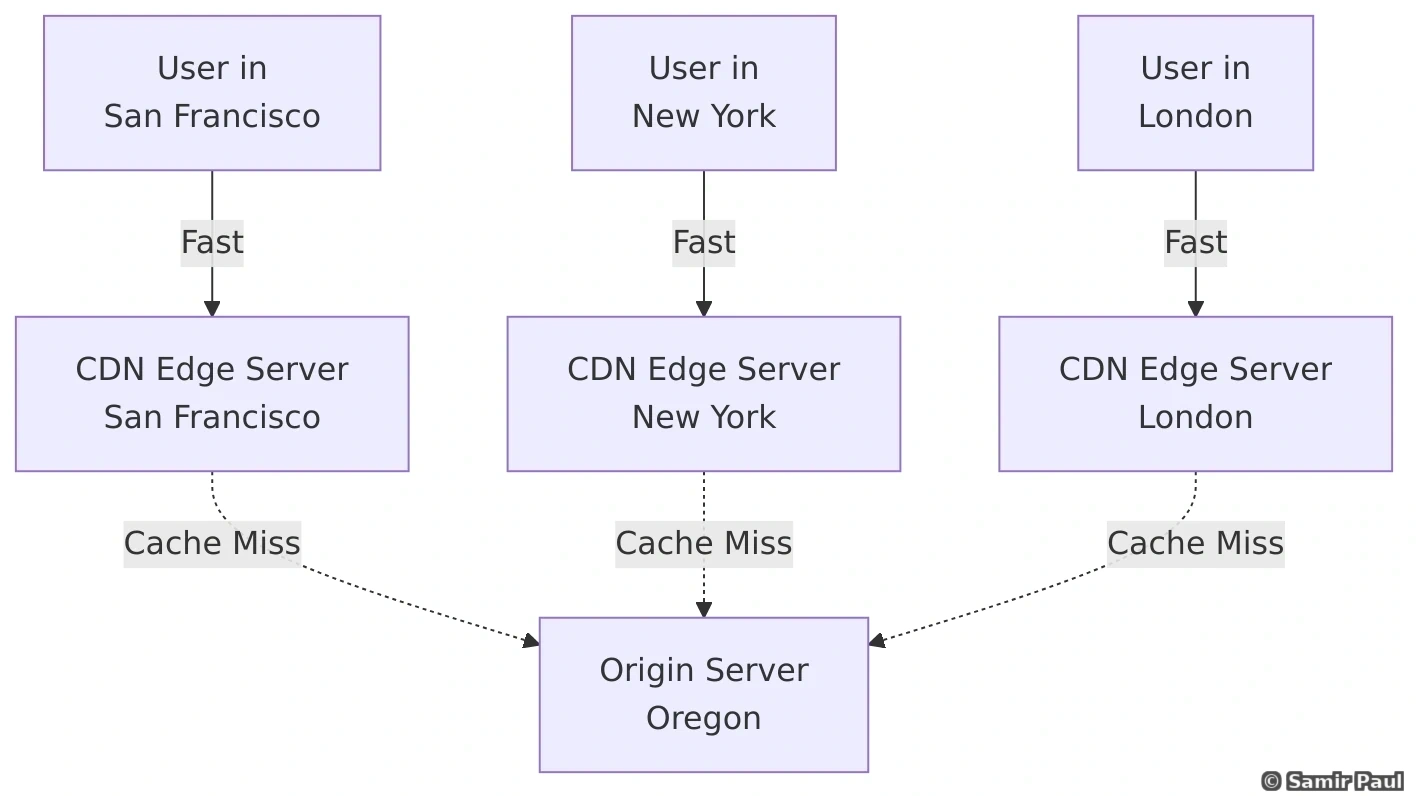

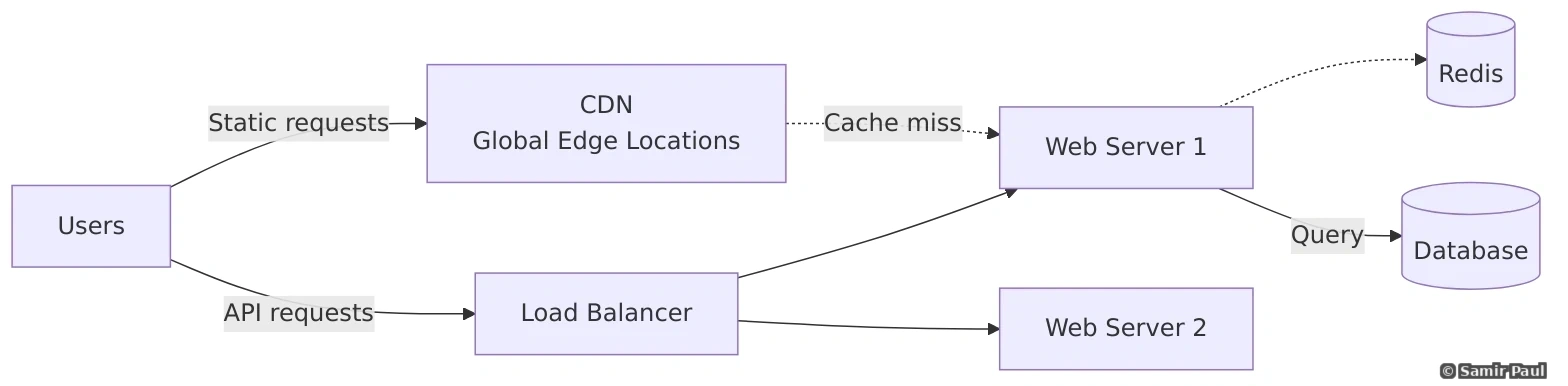

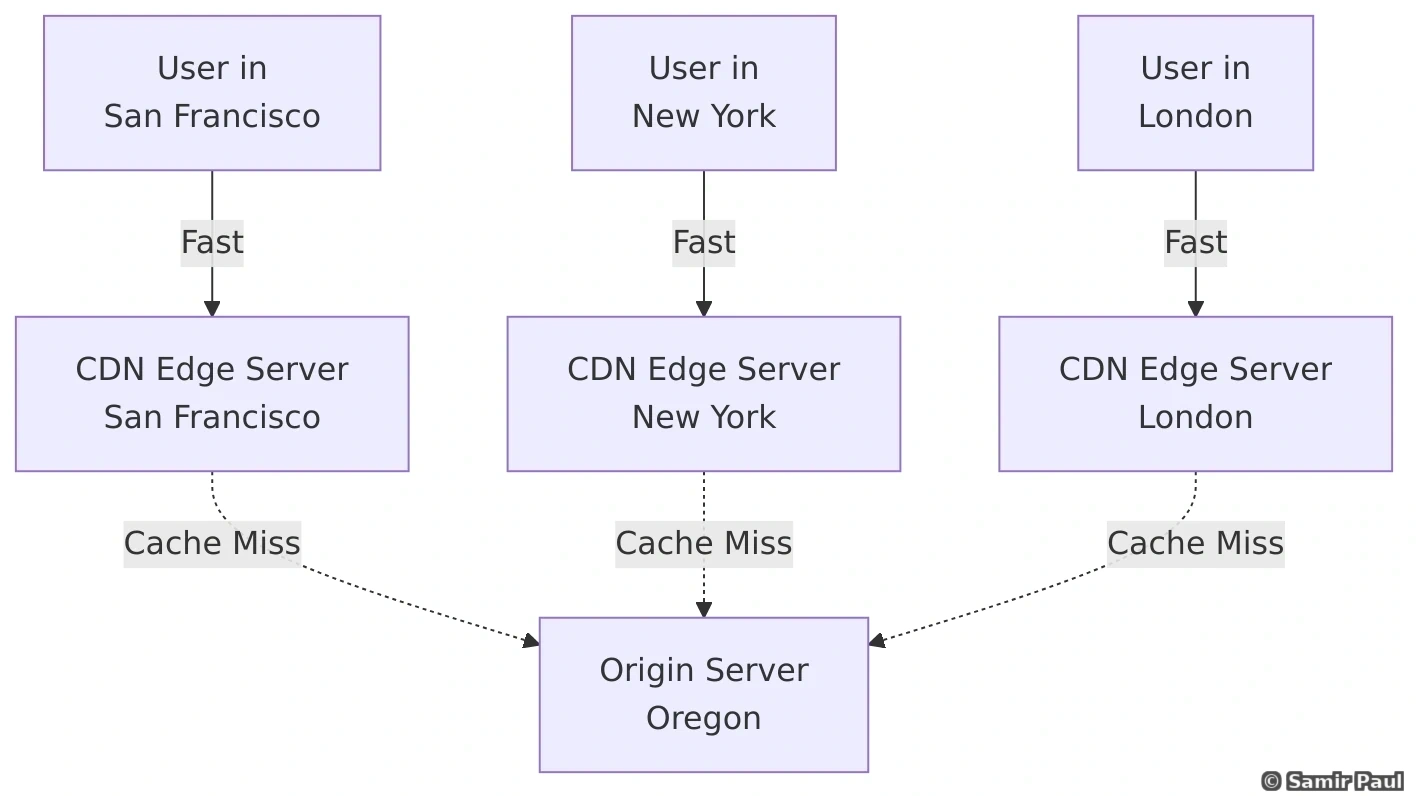

8. Content Delivery Networks (CDN)

CDNs are geographically distributed networks of servers that deliver content to users based on their location.

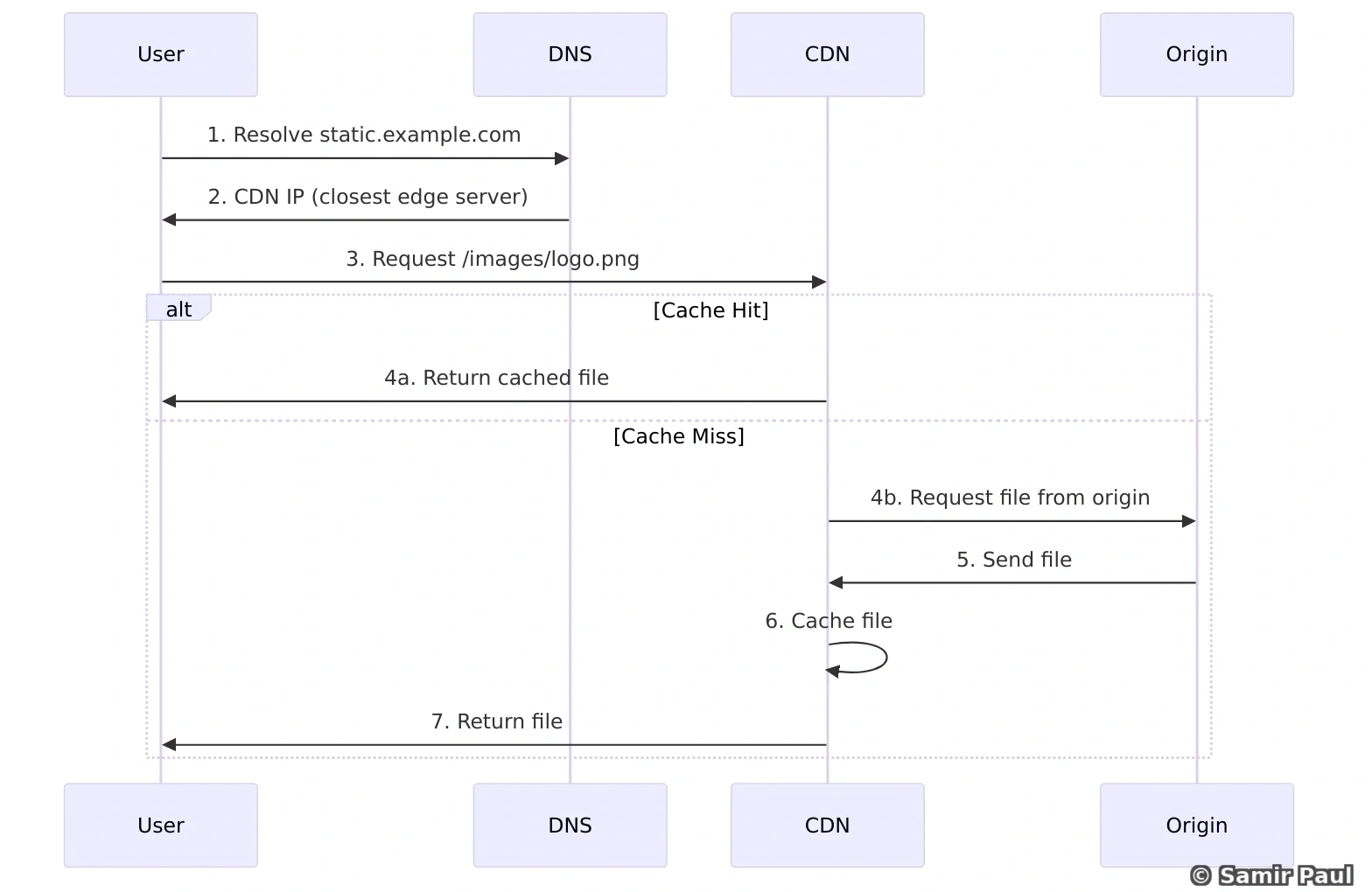

8.1 How CDN Works

CDN Request Flow:

8.2 Benefits of CDN

Reduced Latency

- Content served from nearest location

- Fewer network hops

Reduced Origin Load

- CDN handles most traffic

- Origin serves only cache misses

Better Availability

- Multiple edge servers provide redundancy

- Can serve stale content if origin is down

DDoS Protection

- Distributed architecture absorbs attacks

- Traffic filtered at edge

Cost Savings

- Reduced bandwidth from origin

- Lower infrastructure costs

8.3 What to Put on CDN?

Static Assets (Best for CDN):

- Images

- CSS files

- JavaScript files

- Fonts

- Videos

- PDFs and downloadable files

Dynamic Content (Possible with advanced CDNs):

- API responses with caching headers

- Personalized content (with edge computing)

- HTML pages

8.4 CDN Considerations

1. Cache Invalidation

Challenge: How to update cached content?

Solutions:

a. Time-Based (TTL)

Cache-Control: max-age=86400 # Cache for 24 hoursb. Versioned URLs

/static/app.js?v=1.2.3

/static/app.1.2.3.js

/static/app.abc123hash.jsc. Cache Purge API

import requests

def purge_cdn_cache(url):

# Cloudflare example

response = requests.post(

'https://api.cloudflare.com/client/v4/zones/{zone_id}/purge_cache',

headers={'Authorization': 'Bearer {api_token}'},

json={'files': [url]}

)

return response.json()2. Cache-Control Headers

# Don't cache

Cache-Control: no-store

# Cache but revalidate

Cache-Control: no-cache

# Cache for 1 hour

Cache-Control: max-age=3600

# Cache for 1 hour, revalidate after expiry

Cache-Control: max-age=3600, must-revalidate

# Private (browser only, not CDN)

Cache-Control: private, max-age=3600

# Public (can be cached by CDN)

Cache-Control: public, max-age=86400

# Immutable (never changes)

Cache-Control: public, max-age=31536000, immutable3. CDN Push vs Pull

Pull CDN (Most Common):

- CDN fetches content from origin on first request

- Origin remains source of truth

- Lazy loading

Push CDN:

- You upload content directly to CDN

- No origin server needed

- Manual or automated uploads

9. Database Systems

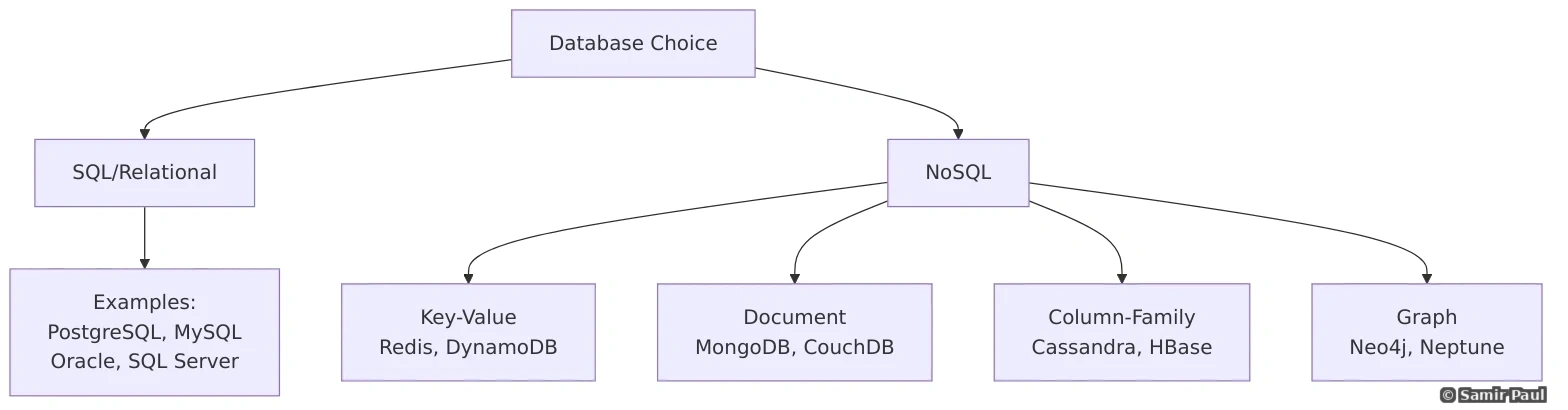

9.1 SQL vs NoSQL

9.2 When to Use SQL?

ACID Guarantees: SQL databases provide ACID properties (Atomicity, Consistency, Isolation, Durability) which are critical for financial transactions, inventory management, and any scenario where data integrity cannot be compromised. If a transaction fails midway, all changes are rolled back - ensuring your data never ends up in an inconsistent state.

Use SQL (Relational Databases) When:

Data is structured and relationships are important

- E-commerce (orders, customers, products)

- Financial systems (accounts, transactions)

- CRM systems

ACID compliance is required

- Banking and financial applications

- Inventory management

- Any system where data consistency is critical

Complex queries needed

- Reporting and analytics

- Joins across multiple tables

- Aggregations and complex filtering

Data integrity constraints

- Foreign keys

- Unique constraints

- Check constraints

Example Schema (E-commerce):

CREATE TABLE users (

id BIGINT PRIMARY KEY AUTO_INCREMENT,

email VARCHAR(255) UNIQUE NOT NULL,

name VARCHAR(255) NOT NULL,

created_at TIMESTAMP DEFAULT CURRENT_TIMESTAMP

);

CREATE TABLE products (

id BIGINT PRIMARY KEY AUTO_INCREMENT,

name VARCHAR(255) NOT NULL,

price DECIMAL(10, 2) NOT NULL,

stock INT NOT NULL,

category_id BIGINT,

created_at TIMESTAMP DEFAULT CURRENT_TIMESTAMP,

FOREIGN KEY (category_id) REFERENCES categories(id)

);

CREATE TABLE orders (

id BIGINT PRIMARY KEY AUTO_INCREMENT,

user_id BIGINT NOT NULL,

total_amount DECIMAL(10, 2) NOT NULL,

status ENUM('pending', 'paid', 'shipped', 'delivered') DEFAULT 'pending',

created_at TIMESTAMP DEFAULT CURRENT_TIMESTAMP,

FOREIGN KEY (user_id) REFERENCES users(id)

);

CREATE TABLE order_items (

id BIGINT PRIMARY KEY AUTO_INCREMENT,

order_id BIGINT NOT NULL,

product_id BIGINT NOT NULL,

quantity INT NOT NULL,

price DECIMAL(10, 2) NOT NULL,

FOREIGN KEY (order_id) REFERENCES orders(id),

FOREIGN KEY (product_id) REFERENCES products(id)

);9.3 When to Use NoSQL?

Use NoSQL When:

Massive scale required

- Billions of rows

- Petabytes of data

- Millions of operations per second

Schema flexibility needed

- Rapidly evolving data models

- Each record has different fields

- Semi-structured or unstructured data

High availability over consistency

- Social media feeds

- Real-time analytics

- IoT data

Denormalized data is acceptable

- Data duplication is okay

- Joins are rare

NoSQL Types:

1. Key-Value Stores (Redis, DynamoDB)

Simplest NoSQL model:

# Redis example

cache.set("user:123", json.dumps({

"name": "John Doe",

"email": "john@example.com"

}))

user = json.loads(cache.get("user:123"))Use cases:

- Session storage

- Caching

- Real-time analytics

- Shopping carts

2. Document Databases (MongoDB, CouchDB)

Store JSON-like documents:

// MongoDB example

{

"_id": ObjectId("507f1f77bcf86cd799439011"),

"name": "John Doe",

"email": "john@example.com",

"addresses": [

{

"type": "home",

"street": "123 Main St",

"city": "San Francisco",

"zip": "94102"

},

{

"type": "work",

"street": "456 Market St",

"city": "San Francisco",

"zip": "94103"

}

],

"orders": [

{"order_id": "ORD001", "total": 99.99},

{"order_id": "ORD002", "total": 149.99}

]

}Use cases:

- Content management

- User profiles

- Product catalogs

- Real-time analytics

3. Column-Family Stores (Cassandra, HBase)

Optimized for write-heavy workloads:

Row Key: user_123

Column Family: profile

name: "John Doe"

email: "john@example.com"

Column Family: activity

2024-01-15:10:30:00: "login"

2024-01-15:10:31:22: "viewed_product_456"

2024-01-15:10:35:45: "added_to_cart_456"Use cases:

- Time-series data

- Event logging

- IoT sensor data

- Message systems

4. Graph Databases (Neo4j, Amazon Neptune)

Optimized for relationships:

// Neo4j example

(John:Person {name: "John Doe"})

-[:FRIENDS_WITH]->(Jane:Person {name: "Jane Smith"})

-[:WORKS_AT]->(Company:Company {name: "Acme Corp"})

<-[:WORKS_AT]-(Bob:Person {name: "Bob Johnson"})

-[:LIKES]->(Product:Product {name: "Widget"})Use cases:

- Social networks

- Recommendation engines

- Fraud detection

- Knowledge graphs

9.4 Database Performance Optimization

Performance Optimization Hierarchy: Follow this order for maximum impact:

- Add indexes on frequently queried columns (10-100x speedup)

- Optimize slow queries using EXPLAIN (5-50x speedup)

- Add caching layer for hot data (10-100x speedup)

- Scale reads with replicas when CPU-bound (linear scaling)

- Shard database only when vertical scaling exhausted (adds complexity)

1. Indexing

Index Trade-offs: Every index speeds up reads but slows down writes. An insert/update/delete must update all indexes on that table. A table with 10 indexes will have 10x slower writes. Only index columns that are frequently used in WHERE, JOIN, or ORDER BY clauses. Monitor index usage with EXPLAIN and remove unused indexes.

Indexes speed up queries but slow down writes:

-- Create index

CREATE INDEX idx_users_email ON users(email);

CREATE INDEX idx_products_category ON products(category_id);

-- Composite index

CREATE INDEX idx_orders_user_status ON orders(user_id, status);

-- Full-text index

CREATE FULLTEXT INDEX idx_products_search ON products(name, description);Types of Indexes:

- B-Tree Index (default): Good for equality and range queries

- Hash Index: Only for equality comparisons

- Full-Text Index: For text search

- Spatial Index: For geographic data

Index Best Practices:

- Index columns used in WHERE, JOIN, ORDER BY

- Don’t over-index (slows writes)

- Use composite indexes for multi-column queries

- Monitor index usage and remove unused indexes

2. Query Optimization

-- Bad: SELECT *

SELECT * FROM users WHERE email = 'john@example.com';

-- Good: Select only needed columns

SELECT id, name, email FROM users WHERE email = 'john@example.com';

-- Use EXPLAIN to analyze queries

EXPLAIN SELECT * FROM orders

WHERE user_id = 123 AND status = 'pending';

-- Avoid N+1 queries

-- Bad: Query in loop

for user_id in user_ids:

orders = db.query(f"SELECT * FROM orders WHERE user_id = {user_id}")

-- Good: Single query

orders = db.query(f"SELECT * FROM orders WHERE user_id IN ({','.join(user_ids)})")3. Connection Pooling

import psycopg2

from psycopg2 import pool

# Create connection pool

connection_pool = pool.SimpleConnectionPool(

minconn=5,

maxconn=20,

host="localhost",

database="mydb",

user="user",

password="password"

)

def execute_query(query):

conn = connection_pool.getconn()

try:

cursor = conn.cursor()

cursor.execute(query)

result = cursor.fetchall()

return result

finally:

connection_pool.putconn(conn)10. Database Sharding and Partitioning

Sharding: Last Resort Scaling: Sharding adds significant complexity - cross-shard joins become application-level operations, distributed transactions are complex, and rebalancing data is challenging. Exhaust these options first: (1) Optimize queries and add indexes, (2) Add read replicas, (3) Vertical scaling (bigger machine), (4) Caching layer. Only shard when you absolutely must handle massive scale (billions of rows, millions of QPS).

When a single database can’t handle the load, we need to split data across multiple databases.

10.1 Vertical vs Horizontal Partitioning

Vertical Partitioning (Splitting by columns)

Original Table:

users: id, name, email, address, bio, profile_pic

Split into:

users_basic: id, name, email

users_extended: id, address, bio, profile_picUse when:

- Different columns accessed with different frequencies

- Some columns are rarely used

- Reduce I/O for common queries

Horizontal Partitioning/Sharding (Splitting by rows)

users table split by region:

users_us: All US users

users_eu: All EU users

users_asia: All ASIA users10.2 Sharding Strategies

Choosing a Sharding Strategy:

- Range-Based: Use for time-series data or when range queries are common (e.g., “get all orders from last month”)

- Hash-Based: Best for even distribution and single-key lookups (e.g., user profiles, sessions)

- Geographic: Essential for data residency compliance (GDPR, data sovereignty laws)

- Directory-Based: Most flexible but adds latency and single point of failure (shard directory)

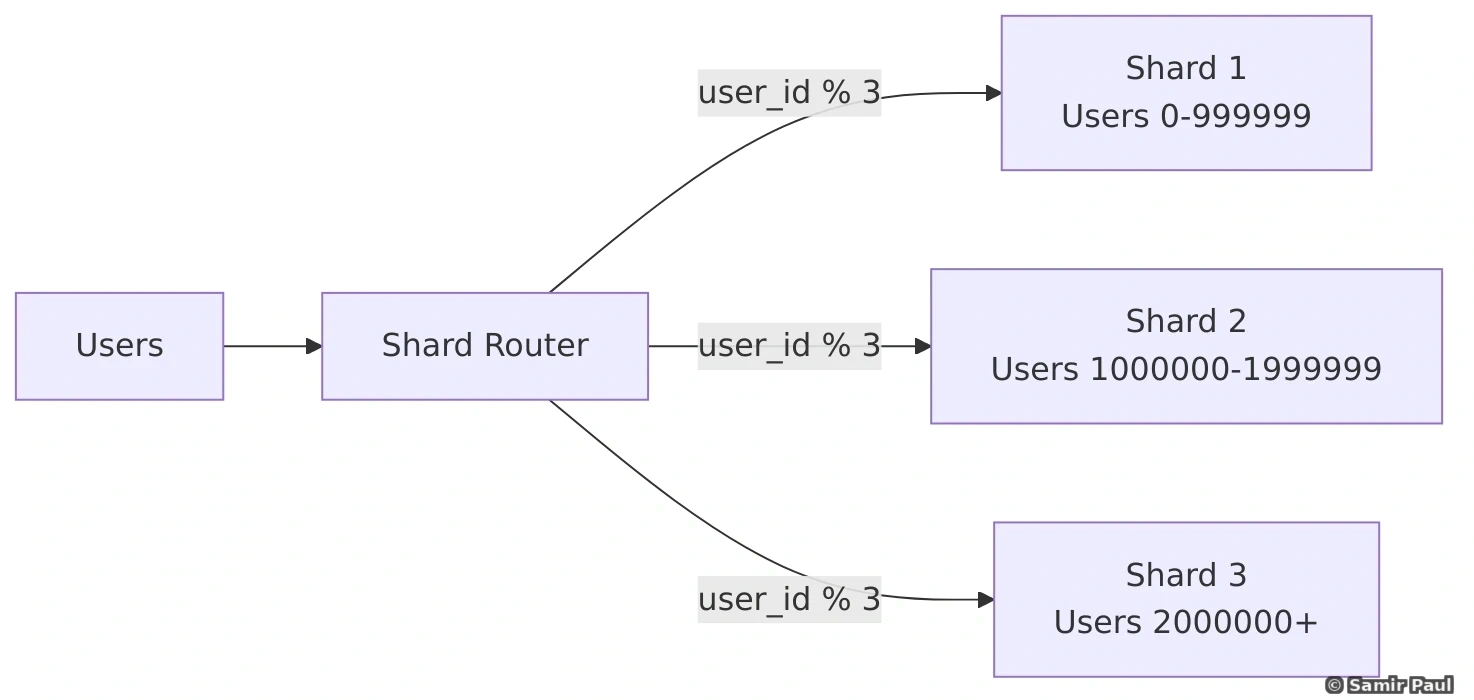

1. Range-Based Sharding

Split data based on ranges:

def get_shard_by_range(user_id):

if user_id < 1000000:

return "shard_1"

elif user_id < 2000000:

return "shard_2"

elif user_id < 3000000:

return "shard_3"

else:

return "shard_4"Pros:

- Simple to implement

- Easy to add new ranges

Cons:

- Uneven distribution (hot shards)

- Difficult to rebalance

2. Hash-Based Sharding

Use hash function to determine shard:

import hashlib

def get_shard_by_hash(user_id, num_shards):

hash_value = int(hashlib.md5(str(user_id).encode()).hexdigest(), 16)

shard_id = hash_value % num_shards

return f"shard_{shard_id}"Pros:

- Even distribution

- Automatic balancing

Cons:

- Adding shards requires rehashing

- Range queries difficult

3. Geographic Sharding

Split by location:

def get_shard_by_geo(user_location):

if user_location in ['US', 'CA', 'MX']:

return "shard_americas"

elif user_location in ['GB', 'FR', 'DE']:

return "shard_europe"

elif user_location in ['CN', 'JP', 'IN']:

return "shard_asia"

else:

return "shard_global"Pros:

- Low latency (data close to users)

- Regulatory compliance (data residency)

Cons:

- Uneven distribution

- Cross-shard queries complex

4. Directory-Based Sharding

Maintain lookup table:

# Shard directory

shard_directory = {

"user:1": "shard_1",

"user:2": "shard_1",

"user:3": "shard_2",

"user:4": "shard_3",

}

def get_shard_by_directory(user_id):

return shard_directory.get(f"user:{user_id}", "shard_default")Pros:

- Maximum flexibility

- Easy to migrate data between shards

Cons:

- Directory is single point of failure

- Additional lookup required

10.3 Challenges with Sharding

Sharding Operational Complexity: Sharding introduces these hard problems:

- Cross-shard joins: Must fetch from multiple shards and join in application (slow)

- Distributed transactions: 2PC is slow and error-prone (avoid if possible)

- Data rebalancing: Adding shards requires massive data migration (hours/days downtime)

- Hotspots: Uneven sharding key distribution causes some shards to be overloaded

- Query routing: Routing layer becomes complex and critical

1. Cross-Shard Joins

Denormalization is Necessary: With sharding, you cannot do efficient cross-shard joins. Denormalize data by storing redundant copies across shards. Accept the write overhead and potential staleness. Example: Store user_name and user_email in orders table even though they’re in users table.

Problem: Can’t join tables across different databases.

Solution: Denormalize data or handle joins at application level:

def get_user_with_orders(user_id):

# Get shard for user

user_shard = get_shard(user_id)

user = user_shard.query(f"SELECT * FROM users WHERE id = {user_id}")

# Orders might be on different shard

order_shard = get_shard_for_orders(user_id)

orders = order_shard.query(f"SELECT * FROM orders WHERE user_id = {user_id}")

# Combine in application

user['orders'] = orders

return user2. Distributed Transactions

2PC Performance Penalty: Two-Phase Commit (2PC) requires locking all shards and coordinating across them. In MySQL, 2PC adds 20-50% latency overhead and can deadlock if timing is wrong. Better approach: Use Saga pattern - break transaction into compensating transactions, or ensure shard key is included in all transactions so data stays on one shard.

Problem: Transaction spanning multiple shards.

Solution: Two-Phase Commit (2PC) or avoid distributed transactions:

def transfer_money(from_user_id, to_user_id, amount):

from_shard = get_shard(from_user_id)

to_shard = get_shard(to_user_id)

if from_shard == to_shard:

# Same shard - normal transaction

with from_shard.transaction():

from_shard.execute(f"UPDATE accounts SET balance = balance - {amount} WHERE user_id = {from_user_id}")

to_shard.execute(f"UPDATE accounts SET balance = balance + {amount} WHERE user_id = {to_user_id}")

else:

# Different shards - use 2PC or saga pattern

# (More complex - see distributed transactions section)

pass3. Rebalancing

Problem: Adding/removing shards requires data migration.

Solution: Consistent hashing or careful planning:

def rebalance_shards(old_shards, new_shards):

# Calculate which data needs to move

for key in get_all_keys():

old_shard = hash(key) % old_shards

new_shard = hash(key) % new_shards

if old_shard != new_shard:

# Migrate data

data = get_data_from_shard(old_shard, key)

write_data_to_shard(new_shard, key, data)

delete_data_from_shard(old_shard, key)10.4 Sharding Architecture

This is Part 1 of the comprehensive guide. The document will continue with remaining sections covering Database Replication, Distributed Systems, Design Patterns, Real-World System Designs (Twitter, Google Maps, Key-Value Store, etc.), Case Studies, and extensive references.

Would you like me to continue with the next sections?

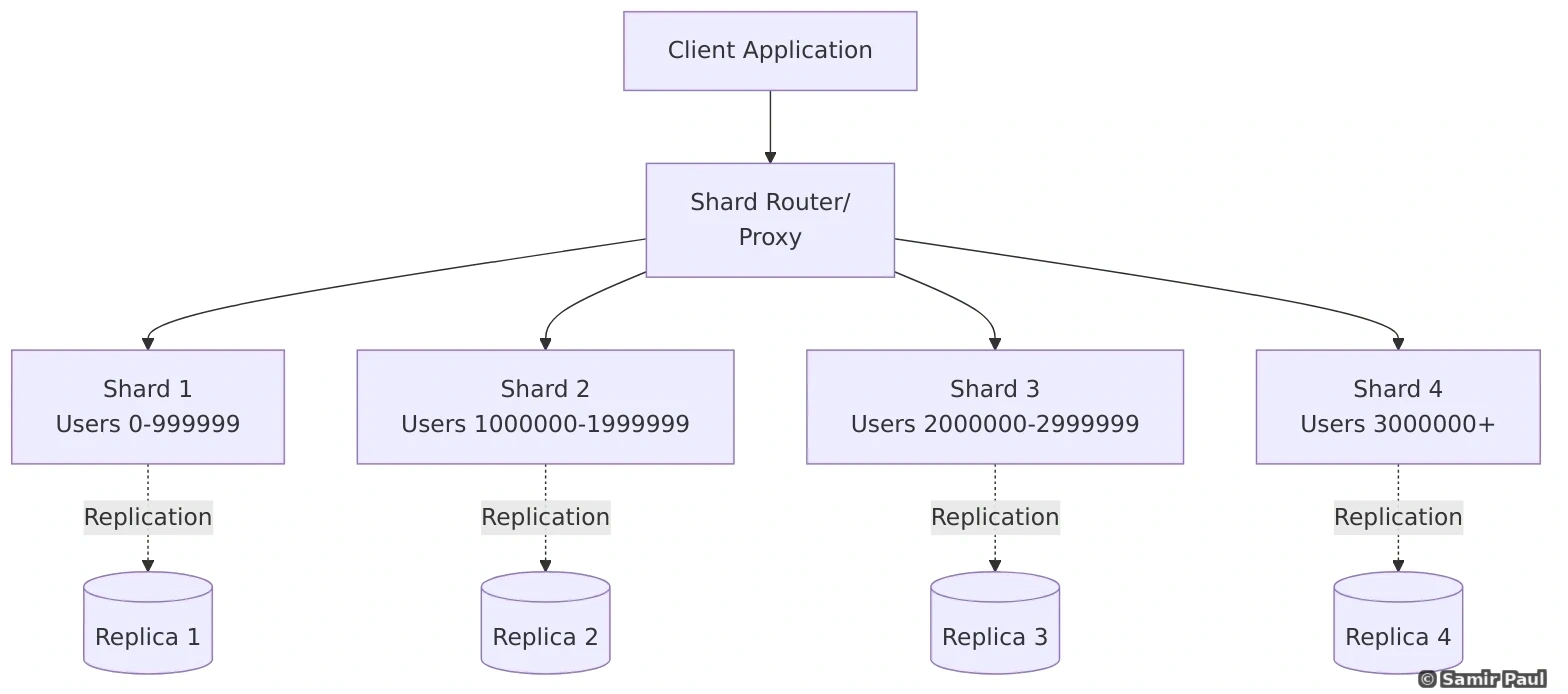

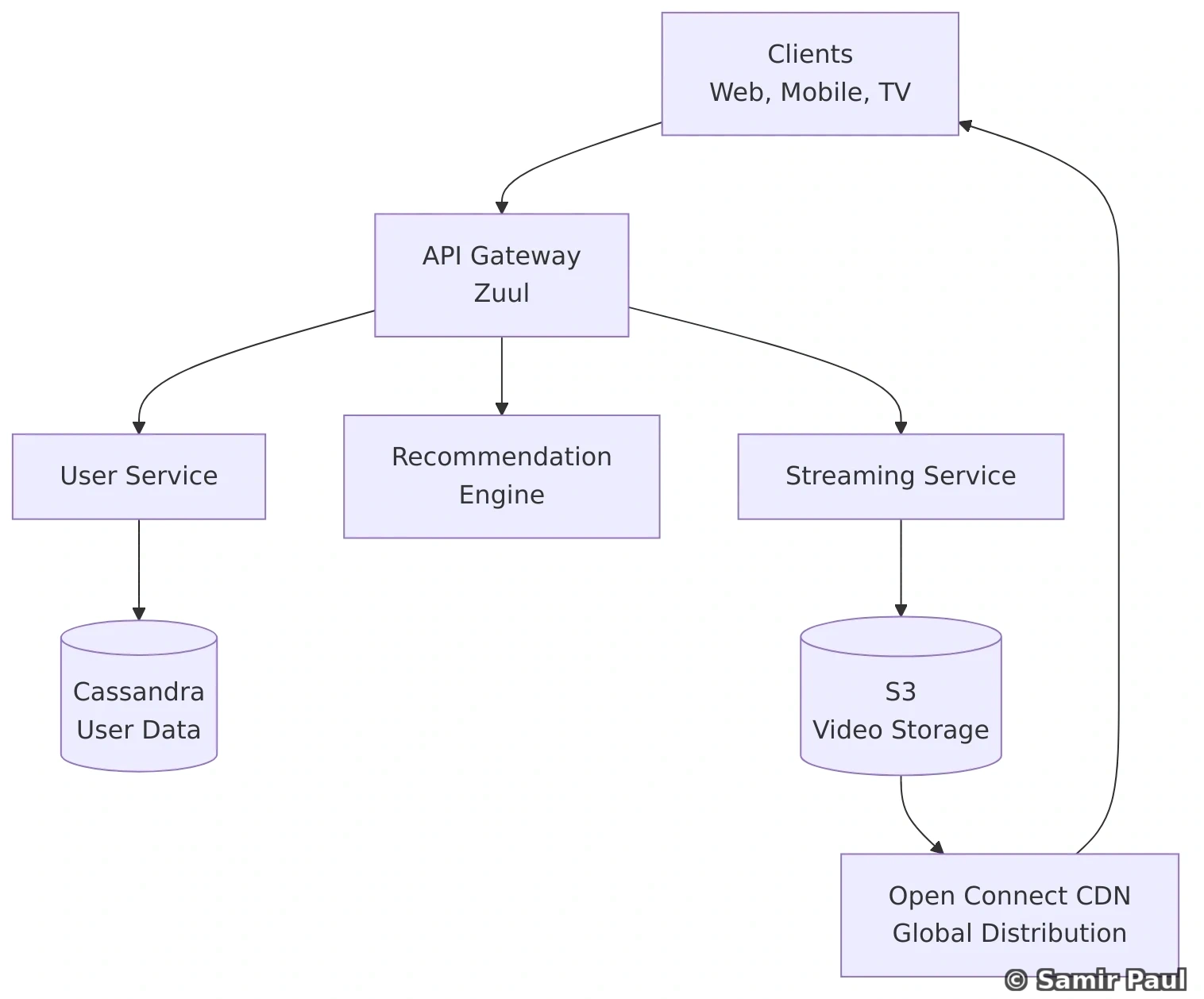

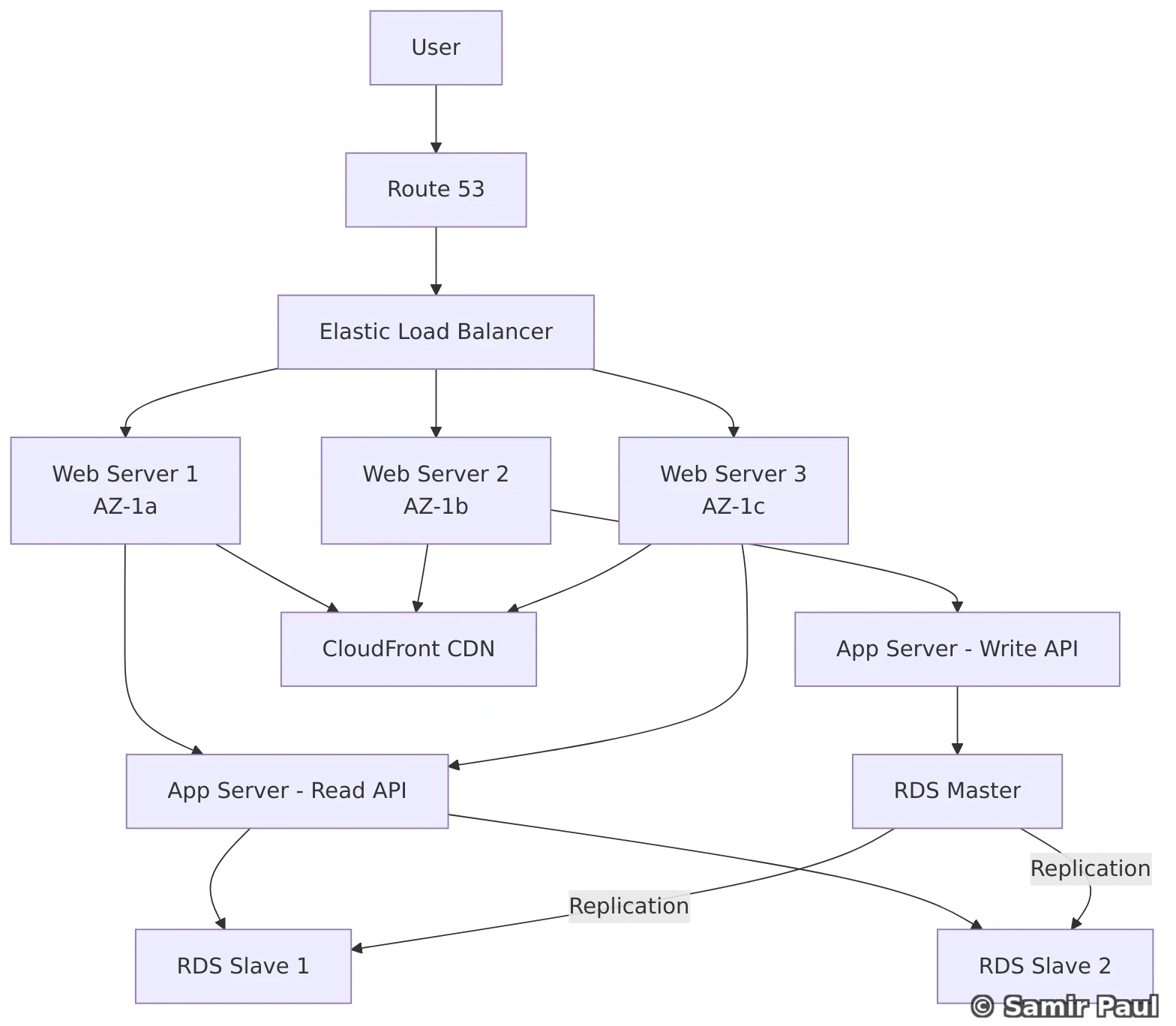

11. Database Replication

Replication Benefits: Database replication is the easiest way to scale read traffic. With 3 read replicas, you can handle 3x the read load. Replication also provides data redundancy (if master fails, promote a replica) and enables running analytics/backups on replicas without impacting production.

Database replication is the process of copying data from one database to another to ensure redundancy, improve availability, and enhance read performance.

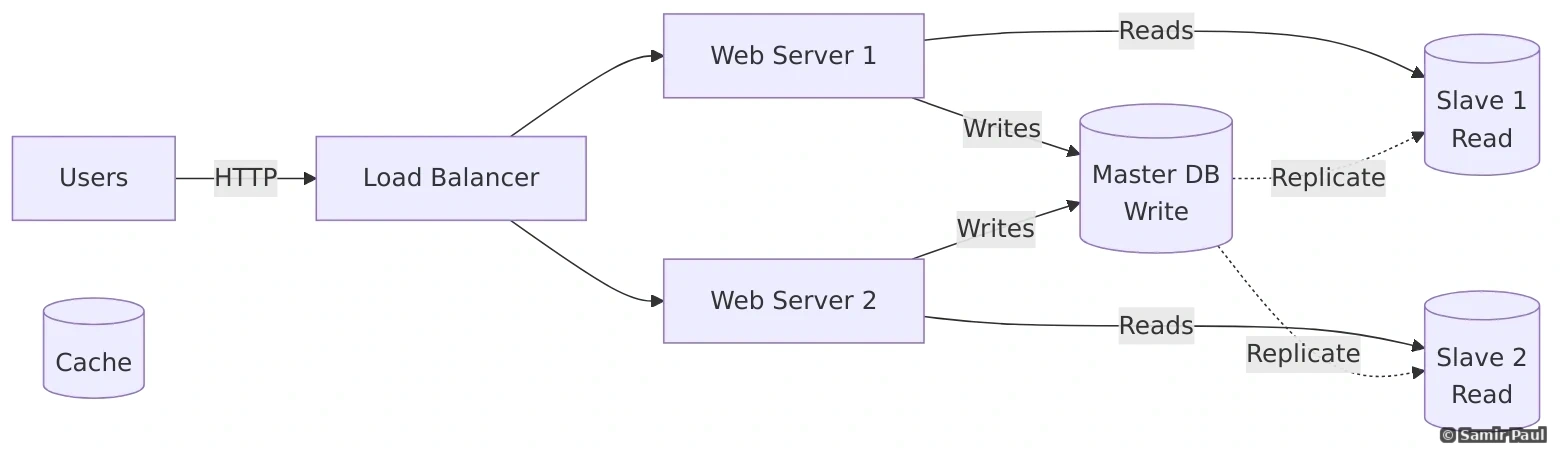

11.1 Master-Slave Replication

The most common replication pattern:

How it works:

- All write operations go to the master database

- Master propagates changes to slave databases

- Read operations are distributed across slave databases

- Improves read performance through load distribution

Advantages:

- Better read performance (distribute reads across multiple servers)

- Data backup (slaves serve as backups)

- Analytics without impacting production (run reports on slaves)

- High availability (promote slave if master fails)

Disadvantages:

- Replication lag (slaves might be slightly behind master)

- Single point of failure for writes (only one master)

- Complexity in failover scenarios

Replication Lag Problem: Replication is usually asynchronous - slaves can be seconds or even minutes behind the master. If a user writes data and immediately reads from a replica, they might not see their own write! Solutions: (1) Read-after-write consistency (read from master for short time after write), (2) Sticky sessions (always read from same replica), or (3) Use timestamps/version numbers to detect stale reads.

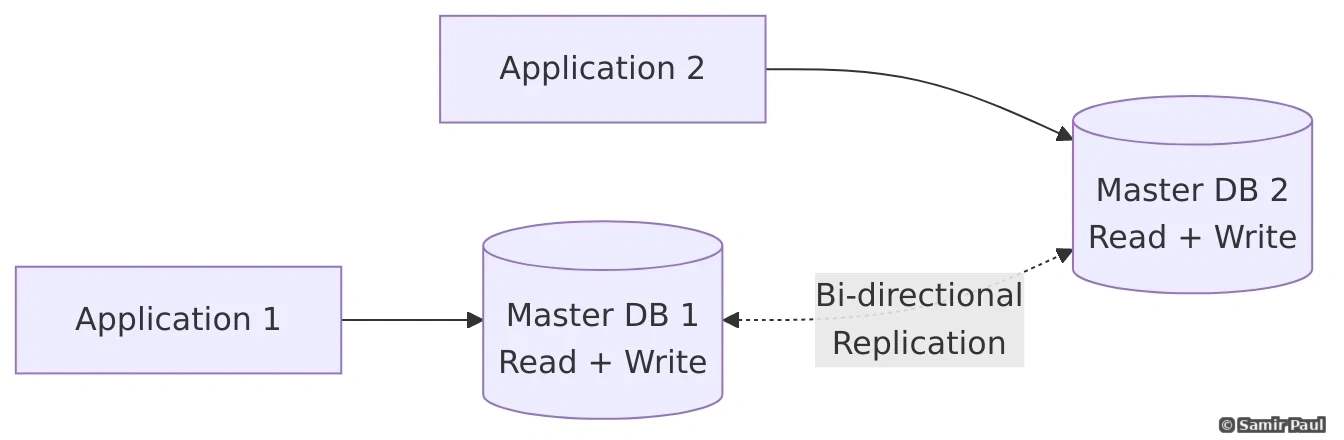

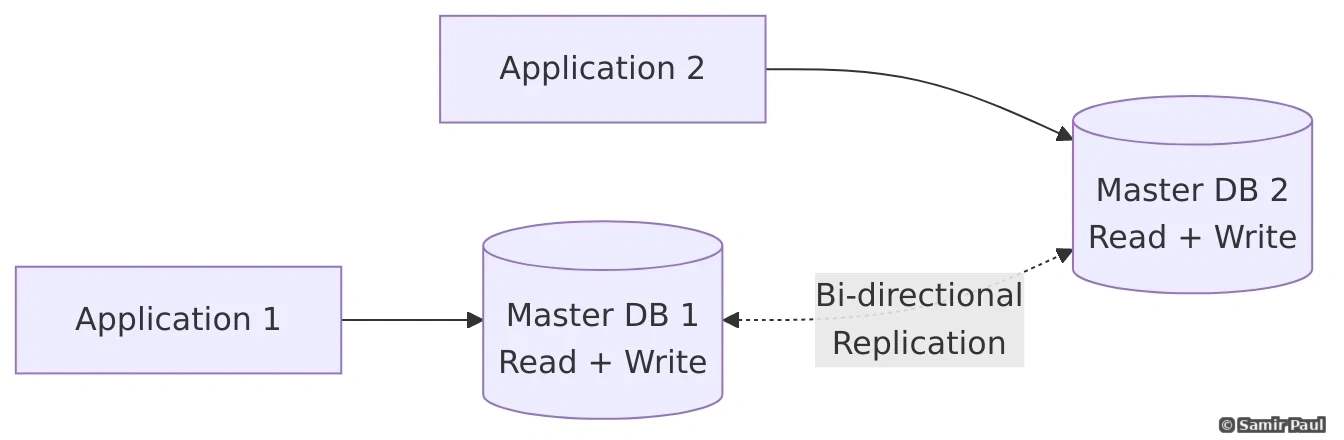

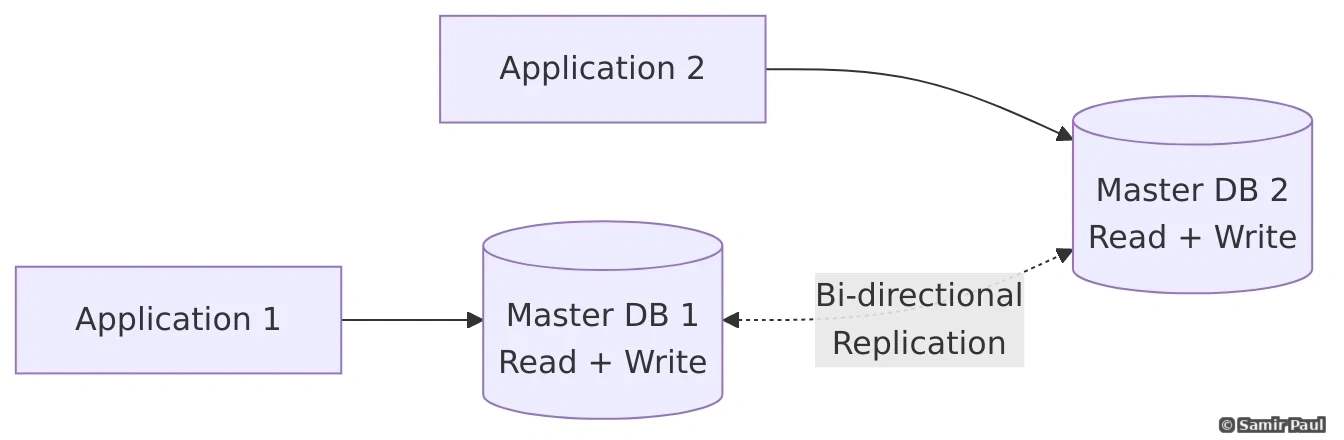

11.2 Master-Master Replication

Both databases accept writes and sync with each other:

Advantages:

- No single point of failure for writes

- Better write performance (distribute writes)

- Lower latency for geographically distributed systems

Disadvantages:

- Complex conflict resolution

- Potential for inconsistency

- More difficult to manage

Multi-Master Conflicts: With master-master replication, two masters can accept conflicting writes. Example: User A updates email to “a@example.com” on Master 1, User B updates same user’s email to “b@example.com” on Master 2. Which wins? Last-Write-Wins (LWW) is simple but can lose data. Version vectors detect conflicts but require application-level resolution. Avoid multi-master if possible.

Conflict Resolution Strategies:

# Last Write Wins (LWW)

def resolve_conflict_lww(value1, value2):

"""

Choose the value with the latest timestamp

"""

if value1.timestamp > value2.timestamp:

return value1

return value2

# Version Vector

class VersionVector:

def __init__(self):

self.vector = {}

def increment(self, node_id):

self.vector[node_id] = self.vector.get(node_id, 0) + 1

def merge(self, other):

"""Merge two version vectors"""

for node_id, version in other.vector.items():

self.vector[node_id] = max(

self.vector.get(node_id, 0),

version

)

def compare(self, other):

"""

Returns:

- 'before' if self < other

- 'after' if self > other

- 'concurrent' if conflicting

"""

self_greater = False

other_greater = False

all_nodes = set(self.vector.keys()) | set(other.vector.keys())

for node in all_nodes:

self_ver = self.vector.get(node, 0)

other_ver = other.vector.get(node, 0)

if self_ver > other_ver:

self_greater = True

elif other_ver > self_ver:

other_greater = True

if self_greater and not other_greater:

return 'after'

elif other_greater and not self_greater:

return 'before'

else:

return 'concurrent'11.3 Replication Methods

Synchronous Replication:

- Master waits for slave acknowledgment before confirming write

- Strong consistency

- Higher latency

- Used when data consistency is critical

def synchronous_write(data):

# Write to master

master.write(data)

# Wait for all slaves to acknowledge

for slave in slaves:

slave.write(data)

slave.acknowledge()

return "Write successful"Asynchronous Replication:

- Master confirms write immediately

- Slaves updated eventually

- Lower latency

- Risk of data loss if master fails

def asynchronous_write(data):

# Write to master

master.write(data)

# Queue replication (don't wait)

for slave in slaves:

replication_queue.enqueue({

'slave': slave,

'data': data

})

return "Write successful"Semi-Synchronous Replication:

- Master waits for at least one slave

- Balance between consistency and performance

12. Distributed Systems Concepts

12.1 What is a Distributed System?

Characteristics:

- Multiple autonomous computers: Independent nodes with their own CPU, memory, and storage

- Connected through a network: Communication via TCP/IP, message queues, or RPC

- Coordinate actions through message passing: No shared memory between nodes

- No shared memory: Each node has isolated memory space

- Failures are partial: Some components can fail while others continue working

Benefits:

- Scalability: Handle more load by adding machines (horizontal scaling)

- Reliability: System continues working even if components fail

- Performance: Process data closer to users (reduced latency)

- Availability: No single point of failure with proper design

Challenges:

- Network is unreliable: Messages can be lost or delayed

- Partial failures: Some components fail while others work

- Synchronization: Coordinating actions is difficult without shared memory

- Consistency: Keeping data consistent across nodes is complex

12.2 Fallacies of Distributed Computing

Eight assumptions developers wrongly make about distributed systems:

The network is reliable

- Reality: Networks fail, packets are lost, connections drop

Latency is zero

- Reality: Network calls are slow (milliseconds to seconds)

Bandwidth is infinite

- Reality: Network capacity is limited and shared

The network is secure

- Reality: Networks can be compromised, data can be intercepted

Topology doesn’t change

- Reality: Network topology changes frequently (servers added/removed, DNS changes)

There is one administrator

- Reality: Multiple teams manage different parts of the system

Transport cost is zero

- Reality: Serialization and network transfer have CPU and bandwidth costs

The network is homogeneous

- Reality: Different protocols, formats, and vendors across the network

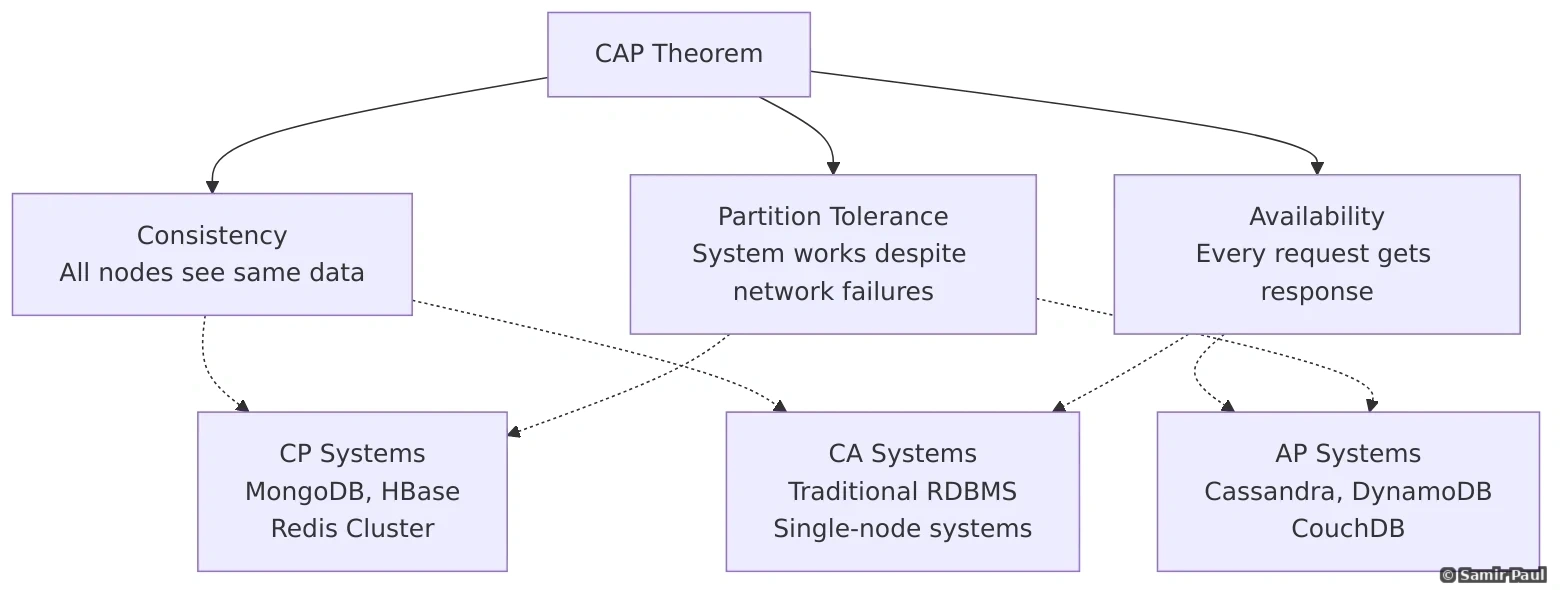

13. CAP Theorem and Consistency Models

13.1 CAP Theorem

The CAP theorem states that a distributed system can provide at most TWO of the following three guarantees simultaneously:

- C (Consistency): All nodes see the same data at the same time

- A (Availability): Every request receives a response (success or failure)

- P (Partition Tolerance): System continues operating despite network partitions

In Reality:

- Network partitions will happen (P is mandatory)

- Must choose between C and A during partition

- When partition heals, system needs reconciliation strategy

Partition Tolerance is Mandatory: In the real world, network partitions WILL happen - switches fail, network cables get cut, data centers lose connectivity. You cannot choose “CA” because you cannot avoid network partitions. The real choice is: During a partition, do you want Consistency (CP) or Availability (AP)?

CP Systems (Consistency + Partition Tolerance):

- Wait for partition to heal

- Return errors during partition

- Example: Banking systems, MongoDB (with majority write concern)

CP System Trade-off: CP systems (like banking systems) choose to be unavailable during network partitions rather than show inconsistent data. If you can’t reach a quorum of nodes, writes fail. This prevents showing incorrect account balances but means some operations fail during network issues.

AP Systems (Availability + Partition Tolerance):

- Always accept reads/writes

- Resolve conflicts later

- Example: Social media feeds, DNS, Cassandra

AP System Trade-off: AP systems (like social media feeds) stay available during network partitions but accept eventual consistency. You might temporarily see old data or conflicting updates, but the system never returns errors. Facebook chooses AP - it’s better to show a slightly stale news feed than to be completely unavailable.

13.2 Consistency Models

Consistency Model Selection: Choose based on your use case:

- Strong Consistency: Financial transactions, inventory systems (can’t sell same item twice)

- Eventual Consistency: Social media feeds, analytics, caching (temporary staleness is acceptable)

- Read-Your-Writes: User profiles, settings (users must see their own changes immediately)

- Causal Consistency: Comment threads, chat (replies must appear after original message)

Strong Consistency:

- After a write, all reads see that value

- Highest consistency guarantee

- Higher latency

def strong_consistency_read():

# Read from master or wait for replication

return master.read()Eventual Consistency:

- If no new updates, eventually all reads return same value

- Lower latency

- Used in AP systems

def eventual_consistency_read():

# Read from any replica

# Might return stale data

return random.choice(replicas).read()Read-Your-Writes Consistency:

- Users see their own writes immediately

- Others might see stale data

session_writes = {}

def write(user_id, data):

master.write(data)

session_writes[user_id] = time.now()

def read(user_id):

if user_id in session_writes:

# Read from master for this user

return master.read()

else:

# Can read from replica for others

return replica.read()Monotonic Reads:

- If user reads value A, subsequent reads won’t return older values

user_last_read_time = {}

def monotonic_read(user_id):

last_time = user_last_read_time.get(user_id, 0)

# Only read from replicas caught up to last_time

valid_replicas = [

r for r in replicas

if r.last_sync_time >= last_time

]

result = random.choice(valid_replicas).read()

user_last_read_time[user_id] = time.now()

return resultCausal Consistency:

- Related operations are seen in correct order

- Independent operations can be seen in any order

13.3 BASE Properties

Alternative to ACID for distributed systems:

- Basically Available: System appears to work most of the time

- Soft state: State may change without input (due to eventual consistency)

- Eventual consistency: System becomes consistent over time

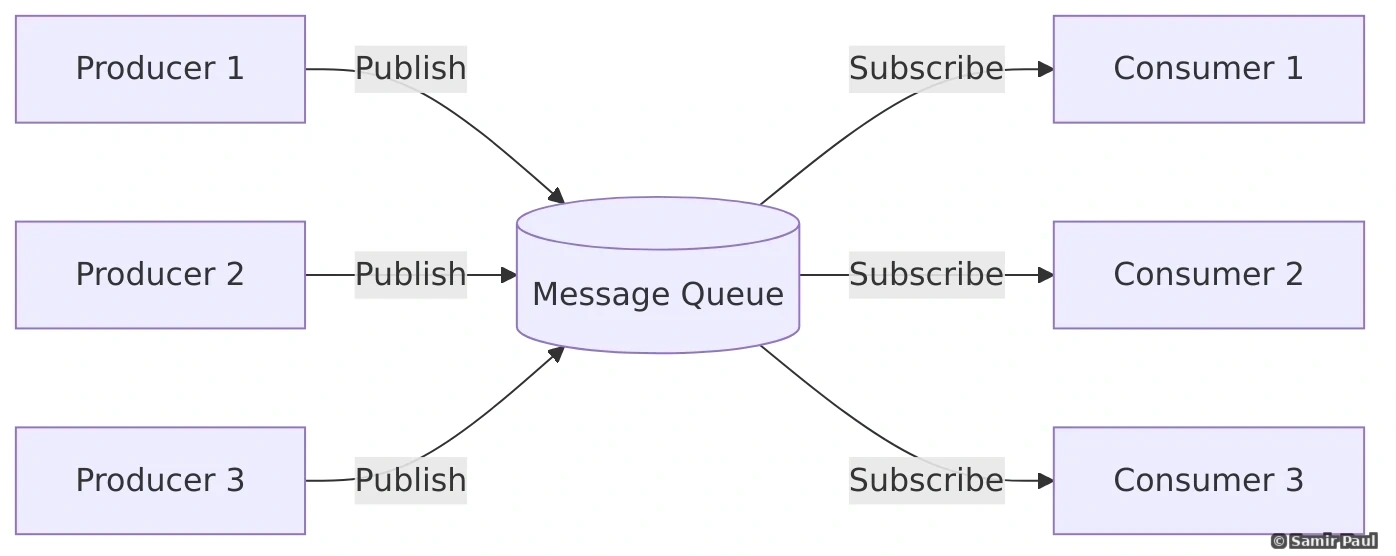

14. Message Queues and Event-Driven Architecture

14.1 Why Message Queues?

Message queues enable asynchronous communication between services with these benefits:

1. Decoupling: Services don’t need to know about each other directly

- Producers and consumers are independent

- Can evolve separately without coordination

2. Reliability: Messages persisted until successfully processed

- Messages won’t be lost if consumer is down

- Retry mechanisms for failed processing

3. Scalability: Add more consumers to handle increased load

- Horizontal scaling of message processing

- Load distribution across multiple consumers

4. Buffering: Handle traffic spikes gracefully

- Queue absorbs burst traffic

- Consumers process at sustainable rate

14.2 Message Queue Patterns

1. Point-to-Point (Queue)

Each message consumed by exactly one consumer:

class MessageQueue:

def __init__(self):

self.queue = []

self.lock = threading.Lock()

def send(self, message):

with self.lock:

self.queue.append(message)

def receive(self):

with self.lock:

if self.queue:

return self.queue.pop(0)

return None

# Usage

queue = MessageQueue()

# Producer

queue.send({"order_id": 123, "action": "process"})

# Consumer

message = queue.receive()

if message:

process_order(message)2. Publish-Subscribe (Topic)

Each message consumed by all subscribers:

class PubSubTopic:

def __init__(self):

self.subscribers = []

self.messages = []

def subscribe(self, subscriber):

self.subscribers.append(subscriber)

def publish(self, message):

for subscriber in self.subscribers:

subscriber.receive(message)

# Usage

topic = PubSubTopic()

# Subscribers

email_service = EmailService()

sms_service = SMSService()

push_service = PushService()

topic.subscribe(email_service)

topic.subscribe(sms_service)

topic.subscribe(push_service)

# Publisher

topic.publish({"event": "order_placed", "user_id": 123})

# All three services receive the message14.3 Popular Message Queue Systems

RabbitMQ:

- Feature-rich

- Complex routing

- Good for traditional message queueing

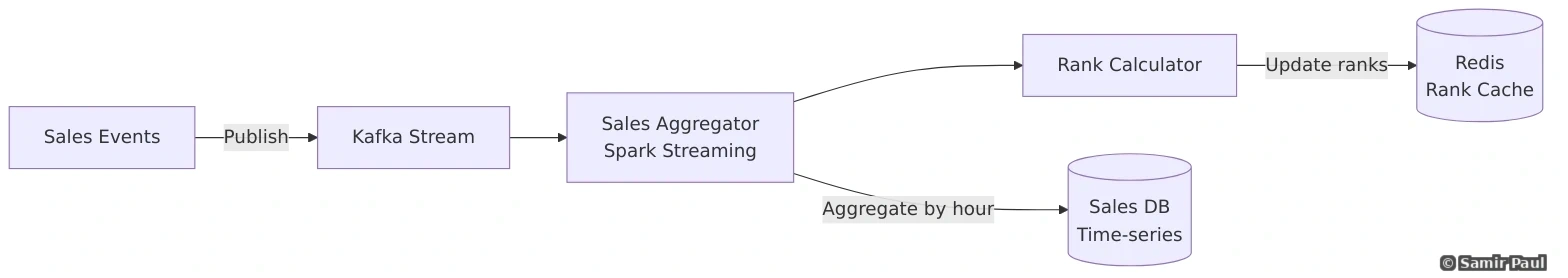

Apache Kafka:

- High throughput

- Log-based

- Great for event streaming

- Stores messages for replay

Amazon SQS:

- Fully managed

- Simple to use

- Good for AWS ecosystem

Redis Pub/Sub:

- Very fast (in-memory)

- Simple pub/sub

- No message persistence

14.4 Event-Driven Architecture Example

# Event Bus

class EventBus:

def __init__(self):

self.handlers = {}

def subscribe(self, event_type, handler):

if event_type not in self.handlers:

self.handlers[event_type] = []

self.handlers[event_type].append(handler)

def publish(self, event):

event_type = event.get('type')

if event_type in self.handlers:

for handler in self.handlers[event_type]:

try:

handler(event)

except Exception as e:

print(f"Handler error: {e}")

# Services

def send_confirmation_email(event):

user_id = event['user_id']

order_id = event['order_id']

print(f"Sending email to user {user_id} for order {order_id}")

def update_inventory(event):

items = event['items']

print(f"Updating inventory for {items}")

def send_notification(event):

user_id = event['user_id']

print(f"Sending push notification to user {user_id}")

# Setup

event_bus = EventBus()

event_bus.subscribe('order_placed', send_confirmation_email)

event_bus.subscribe('order_placed', update_inventory)

event_bus.subscribe('order_placed', send_notification)

# Trigger event

event_bus.publish({

'type': 'order_placed',

'order_id': 12345,

'user_id': 789,

'items': ['item1', 'item2']

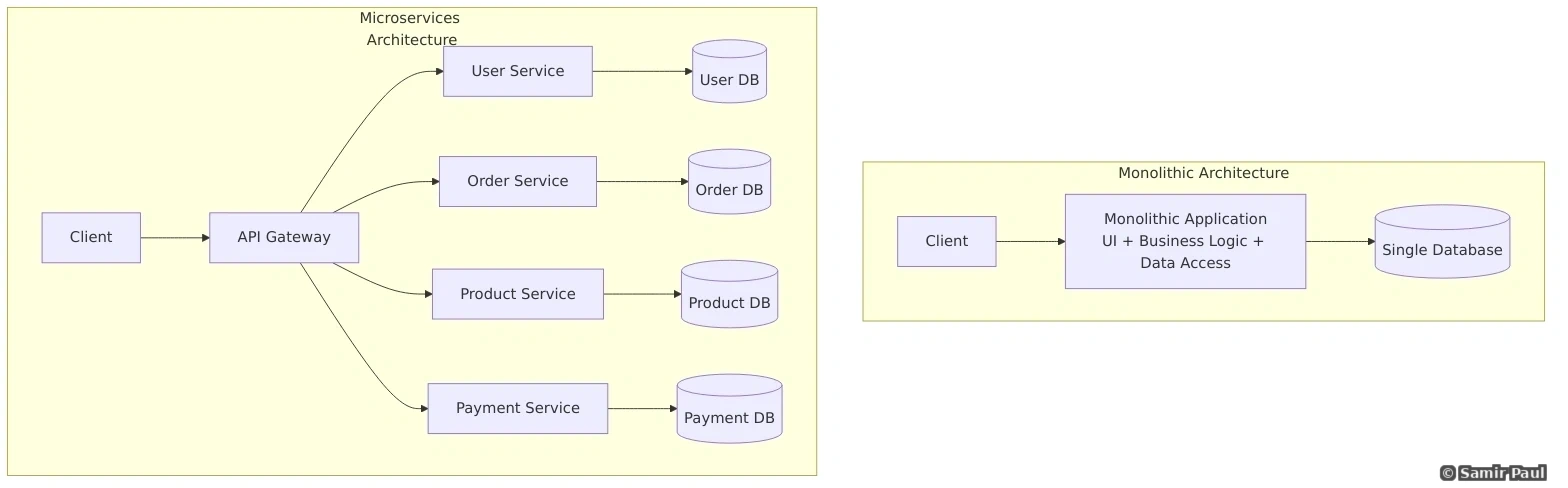

})15. Microservices Architecture

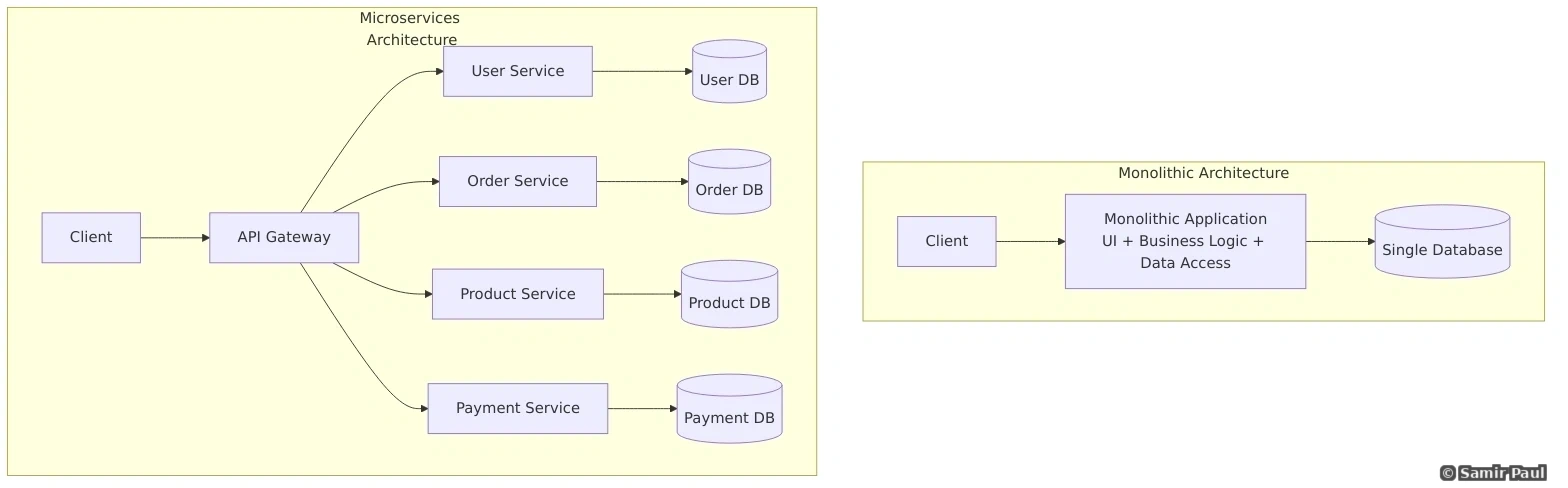

15.1 Monolith vs Microservices

Monolith Advantages:

- Simple to develop and test

- Easy deployment

- Good for small teams

- Lower latency (no network calls)

Monolith Disadvantages:

- Difficult to scale

- Long deployment times

- One bug can bring down entire system

- Hard to adopt new technologies

Microservices Advantages:

- Independent scaling

- Technology diversity

- Fault isolation

- Faster deployment

- Team autonomy

Microservices Disadvantages:

- Increased complexity

- Network latency

- Data consistency challenges

- Testing is harder

- Operational overhead

15.2 Microservices Best Practices

1. Single Responsibility

Each service should do one thing well:

Good:

- UserService: Manages users

- OrderService: Manages orders

- EmailService: Sends emails

Bad:

- UserOrderEmailService: Does everything2. Database per Service

Each service has its own database:

# User Service

class UserService:

def __init__(self):

self.db = UserDatabase()

def create_user(self, data):

return self.db.insert(data)

def get_user(self, user_id):

return self.db.query(user_id)

# Order Service

class OrderService:

def __init__(self):

self.db = OrderDatabase() # Different database

self.user_service = UserServiceClient()

def create_order(self, user_id, items):

# Get user info via API (not direct DB access)

user = self.user_service.get_user(user_id)

order = {

'user_id': user_id,

'items': items,

'total': self.calculate_total(items)

}

return self.db.insert(order)3. API Gateway Pattern

Single entry point for all clients:

class APIGateway:

def __init__(self):

self.user_service = UserServiceClient()

self.order_service = OrderServiceClient()

self.product_service = ProductServiceClient()

def get_user_dashboard(self, user_id):

# Aggregate data from multiple services

user = self.user_service.get_user(user_id)

orders = self.order_service.get_user_orders(user_id)

recommendations = self.product_service.get_recommendations(user_id)

return {

'user': user,

'recent_orders': orders[:5],

'recommended_products': recommendations

}4. Circuit Breaker Pattern

Prevent cascading failures:

from enum import Enum

import time

class CircuitState(Enum):

CLOSED = "closed" # Normal operation

OPEN = "open" # Failing, reject requests

HALF_OPEN = "half_open" # Testing if recovered

class CircuitBreaker:

def __init__(self, failure_threshold=5, timeout=60):

self.failure_count = 0

self.failure_threshold = failure_threshold

self.timeout = timeout

self.state = CircuitState.CLOSED

self.last_failure_time = None

def call(self, func, *args, **kwargs):

if self.state == CircuitState.OPEN:

if time.time() - self.last_failure_time > self.timeout:

self.state = CircuitState.HALF_OPEN

else:

raise Exception("Circuit breaker is OPEN")

try:

result = func(*args, **kwargs)

self.on_success()

return result

except Exception as e:

self.on_failure()

raise e

def on_success(self):

self.failure_count = 0

self.state = CircuitState.CLOSED

def on_failure(self):

self.failure_count += 1

self.last_failure_time = time.time()

if self.failure_count >= self.failure_threshold:

self.state = CircuitState.OPEN

# Usage

payment_circuit = CircuitBreaker(failure_threshold=5, timeout=60)

def process_payment(amount):

return payment_circuit.call(payment_service.charge, amount)This completes Part II. The document continues with Part III covering Design Patterns, Real-World System Designs, and Case Studies.

25. Scaling from Zero to Million Users

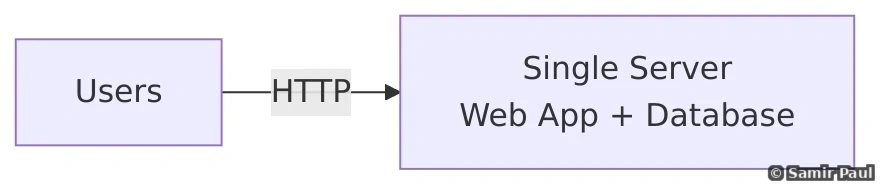

25.1 Stage 1: Single Server

Architecture:

- All code in one place

- Single database

- Simple deployment

- No external services

Configuration:

- 1 web server

- 1 database

- ~1,000-5,000 concurrent users

Issues emerge when:

- Single point of failure

- Can’t scale beyond server capacity

- Database bottleneck

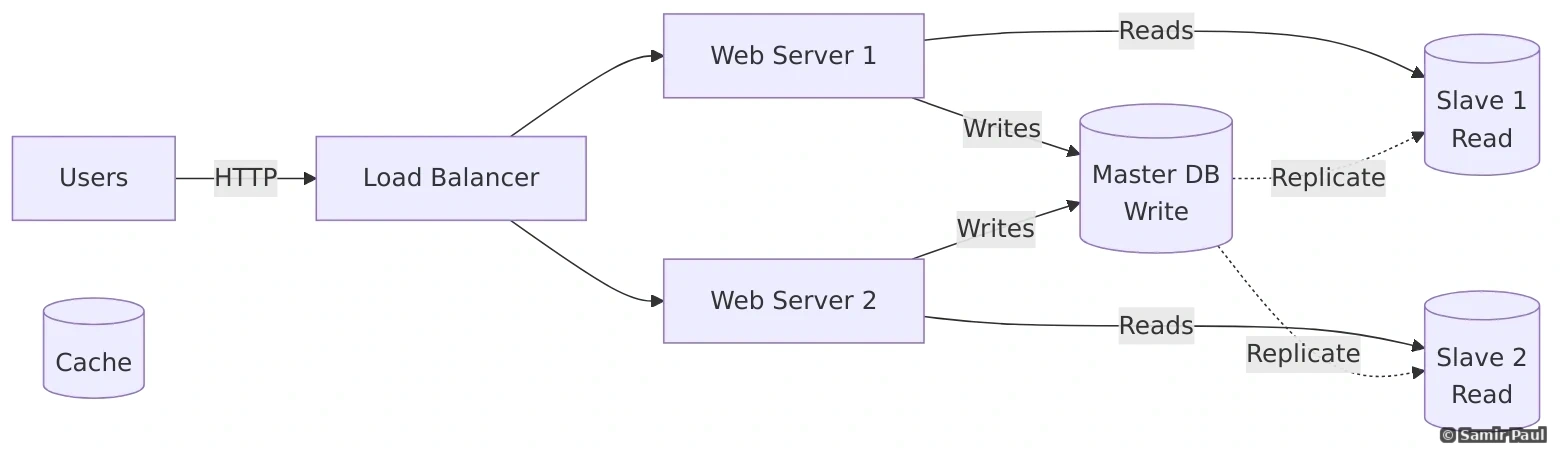

25.2 Stage 2: Separate Web and Database Tiers

Changes:

- Add load balancer (distribute traffic)

- Multiple web servers (can scale horizontally)

- Separate database (can upgrade independently)

Capacity:

- ~100,000 concurrent users

- Load balancer: 1-2 Gbps throughput

- Web servers: 5-10 servers

- Database: Single instance (might be beefy)

Implementation Example:

# Load Balancer (Round Robin)

class LoadBalancer:

def __init__(self, servers):

self.servers = servers

self.current = 0

def route_request(self, request):

server = self.servers[self.current]

self.current = (self.current + 1) % len(self.servers)

return server.handle(request)

# Web Servers (Stateless)

class WebServer:

def __init__(self, db_connection):

self.db = db_connection

def handle(self, request):

if request.path == '/users/123':

user = self.db.query(f"SELECT * FROM users WHERE id = 123")

return json.dumps(user)

# Setup

servers = [WebServer(db_connection), WebServer(db_connection)]

lb = LoadBalancer(servers)25.3 Stage 3: Add Caching Layer

Benefits:

- Reduce database load

- Faster response times

- Better user experience

Implementation:

from functools import wraps

import time

class CachingWebServer:

def __init__(self, db, cache):

self.db = db

self.cache = cache

def get_user(self, user_id):

# Check cache first

cache_key = f"user:{user_id}"

cached_user = self.cache.get(cache_key)

if cached_user:

return cached_user

# Cache miss - query database

user = self.db.query(f"SELECT * FROM users WHERE id = {user_id}")

# Store in cache for 1 hour

self.cache.set(cache_key, user, ttl=3600)

return user25.4 Stage 4: Add CDN for Static Content

CDN Benefits:

- Serve content from location closest to user

- Reduce origin server load

- Faster page loads globally

- Lower bandwidth costs

25.5 Stage 5: Database Replication

Benefits:

- Master for writes

- Slaves for reads

- Better read performance

- High availability

25.6 Stage 6: Database Sharding

When to shard:

- Database size > 1TB

- Single master at capacity

- Need to scale beyond vertical limits

Sharding key considerations:

- Even distribution

- Immutable after shard creation

- Avoid hot keys

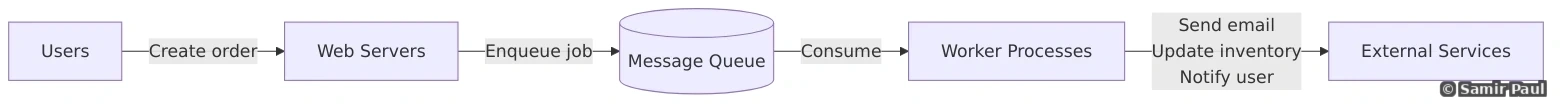

25.7 Stage 7: Message Queue for Async Processing

Benefits:

- Non-blocking operations

- Decouple producers and consumers

- Scale workers independently

- Handle traffic spikes

19. Unique ID Generation in Distributed Systems

Generating globally unique IDs is critical for distributed systems.

19.1 Requirements

Unique IDs must be:

- Unique across all servers

- Sortable (roughly ordered by time)

- Decentralized (no single point of failure)

- Fast generation

- Fit in 64 bits (for efficiency)

19.2 Snowflake ID Algorithm

Twitter’s Snowflake algorithm is industry standard:

| 1 | 41 | 10 | 12 |

|Unused |Timestamp |Machine ID |Sequence|

|1 bit |ms since |Data center|Counter |

| |epoch |+ Worker | |Structure (64 bits):

- Bit 0: Unused (ensures positive numbers)

- Bits 1-41: Timestamp (42 bits, milliseconds since epoch)

- Bits 42-51: Machine ID (10 bits, 1024 machines)

- Bits 52-63: Sequence number (12 bits, 4096 IDs per ms per machine)

Capacity:

- 2^41 timestamps ≈ 69 years

- 2^10 machines = 1,024 machines

- 2^12 sequences = 4,096 IDs per millisecond per machine

- Total: ~4 million IDs per second per machine

Implementation:

import time

import threading

class SnowflakeIDGenerator:

EPOCH = 1288834974657 # Twitter Epoch

WORKER_ID_BITS = 10

DATACENTER_ID_BITS = 5

SEQUENCE_BITS = 12

MAX_WORKER_ID = (1 << WORKER_ID_BITS) - 1

MAX_DATACENTER_ID = (1 << DATACENTER_ID_BITS) - 1

MAX_SEQUENCE = (1 << SEQUENCE_BITS) - 1

WORKER_ID_SHIFT = SEQUENCE_BITS

DATACENTER_ID_SHIFT = SEQUENCE_BITS + WORKER_ID_BITS

TIMESTAMP_SHIFT = SEQUENCE_BITS + WORKER_ID_BITS + DATACENTER_ID_BITS

def __init__(self, worker_id, datacenter_id):

if worker_id > self.MAX_WORKER_ID or worker_id < 0:

raise ValueError(f"Worker ID must be <= {self.MAX_WORKER_ID}")

if datacenter_id > self.MAX_DATACENTER_ID or datacenter_id < 0:

raise ValueError(f"Datacenter ID must be <= {self.MAX_DATACENTER_ID}")

self.worker_id = worker_id

self.datacenter_id = datacenter_id

self.sequence = 0

self.last_timestamp = -1

self.lock = threading.Lock()

def _current_timestamp(self):

return int(time.time() * 1000)

def generate_id(self):

with self.lock:

timestamp = self._current_timestamp()

if timestamp < self.last_timestamp:

raise Exception("Clock moved backwards")

if timestamp == self.last_timestamp:

self.sequence += 1

if self.sequence > self.MAX_SEQUENCE:

# Wait for next millisecond

timestamp = self._current_timestamp()

self.sequence = 0

else:

self.sequence = 0

self.last_timestamp = timestamp

id = (

(timestamp - self.EPOCH) << self.TIMESTAMP_SHIFT |

self.datacenter_id << self.DATACENTER_ID_SHIFT |

self.worker_id << self.WORKER_ID_SHIFT |

self.sequence

)

return id

# Usage

id_gen = SnowflakeIDGenerator(worker_id=1, datacenter_id=1)

for _ in range(5):

print(id_gen.generate_id())19.3 Other ID Generation Methods

UUID:

- 128 bits

- Guaranteed unique

- Not sortable

- Larger ID size

Database Auto-Increment:

- Simple

- Requires network call

- Single point of failure potential

- Exposes data volume

Timestamp + Random:

- Simple

- Sortable

- Risk of collision with multiple generators

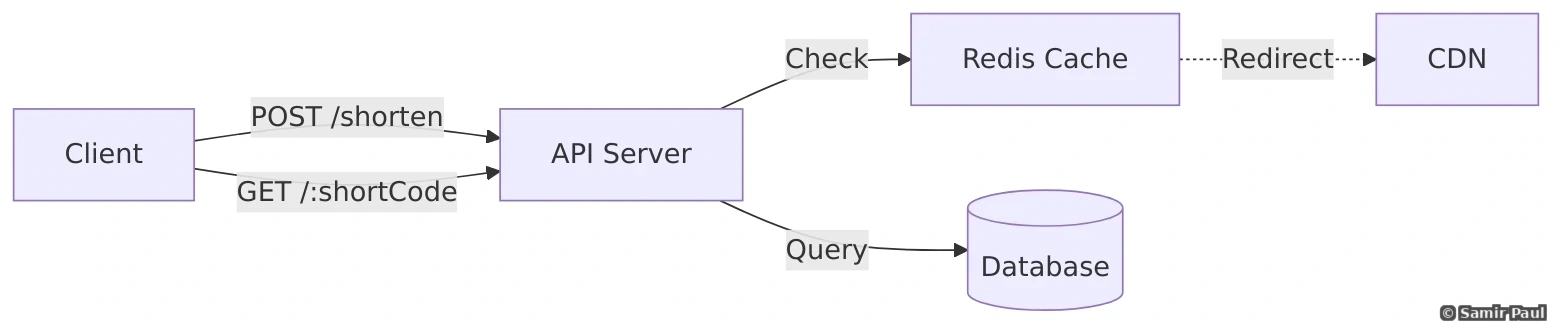

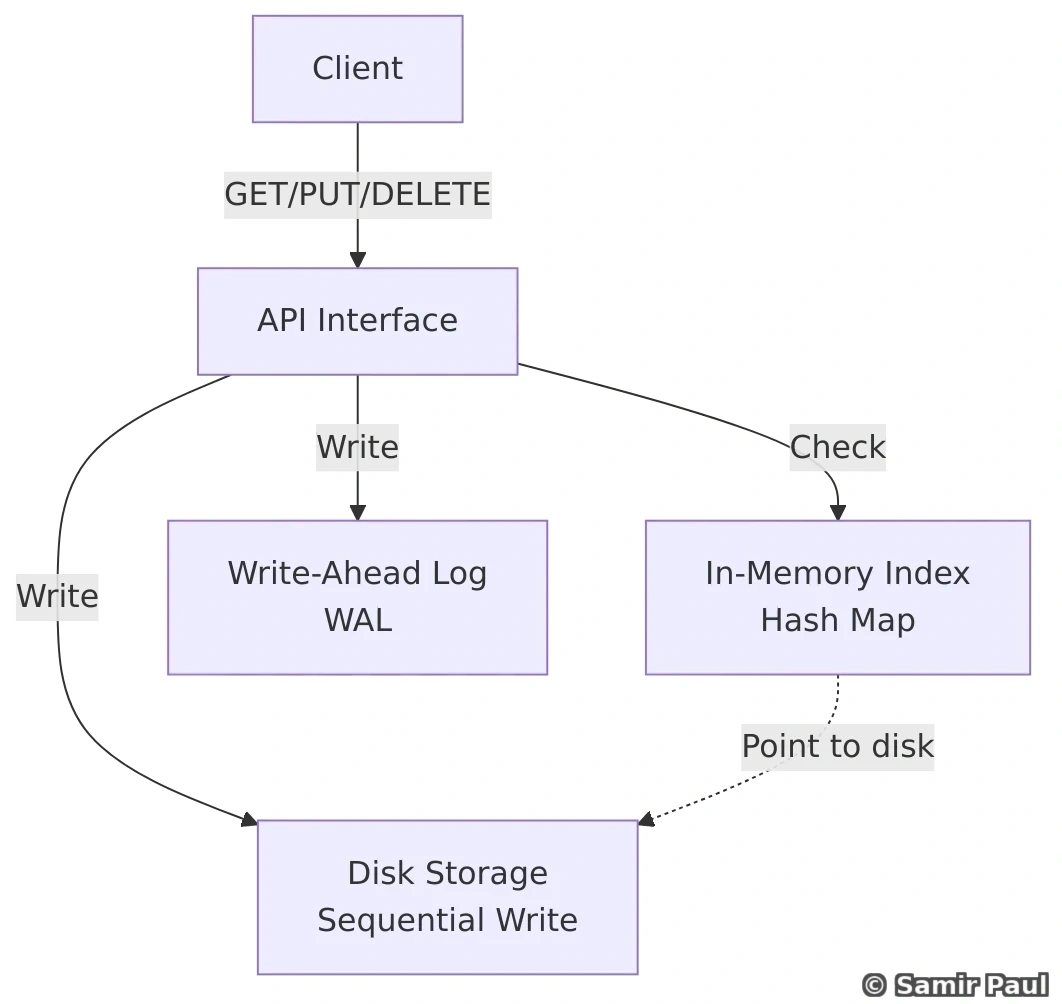

26. Design a Key-Value Store

26.1 Requirements

Functional:

- GET(key): retrieve value

- PUT(key, value): store value

- DELETE(key): remove value

Non-Functional:

- Single node (vertical scalability only)

- O(1) time complexity for all operations

- Persistent storage

- Efficient memory usage

- Hardware-aware optimization

26.2 Architecture

26.3 Implementation

import os

import json

import time

from threading import Lock

class SimpleKVStore:

def __init__(self, data_dir="./data"):

self.data_dir = data_dir

self.memory_index = {} # In-memory hash map

self.wal_file = os.path.join(data_dir, "wal.log") # Write-ahead log

self.data_file = os.path.join(data_dir, "data.db") # Actual data

self.lock = Lock()

os.makedirs(data_dir, exist_ok=True)

self._load_from_disk()

def _load_from_disk(self):

"""Load index from disk on startup"""

if os.path.exists(self.wal_file):

with open(self.wal_file, 'r') as f:

for line in f:

entry = json.loads(line.strip())

if entry['op'] == 'PUT':

self.memory_index[entry['key']] = entry['value']

elif entry['op'] == 'DELETE':

self.memory_index.pop(entry['key'], None)

def get(self, key):

with self.lock:

if key not in self.memory_index:

return None

return self.memory_index[key]

def put(self, key, value):

with self.lock:

# Write-ahead log (WAL)

entry = {'op': 'PUT', 'key': key, 'value': value, 'timestamp': time.time()}

with open(self.wal_file, 'a') as f:

f.write(json.dumps(entry) + '\n')

# Update in-memory index

self.memory_index[key] = value

# Periodic flush to disk (simplified)

if len(self.memory_index) % 1000 == 0:

self._flush_to_disk()

def delete(self, key):

with self.lock:

if key not in self.memory_index:

return False

# Write deletion to WAL

entry = {'op': 'DELETE', 'key': key, 'timestamp': time.time()}

with open(self.wal_file, 'a') as f:

f.write(json.dumps(entry) + '\n')

# Remove from memory

del self.memory_index[key]

return True

def _flush_to_disk(self):

"""Periodically flush in-memory index to disk"""

with open(self.data_file, 'w') as f:

for key, value in self.memory_index.items():

f.write(f"{key}={json.dumps(value)}\n")

# Usage

store = SimpleKVStore()

store.put("user:1", {"name": "Alice", "age": 30})

store.put("user:2", {"name": "Bob", "age": 25})

print(store.get("user:1")) # {"name": "Alice", "age": 30}

store.delete("user:2")

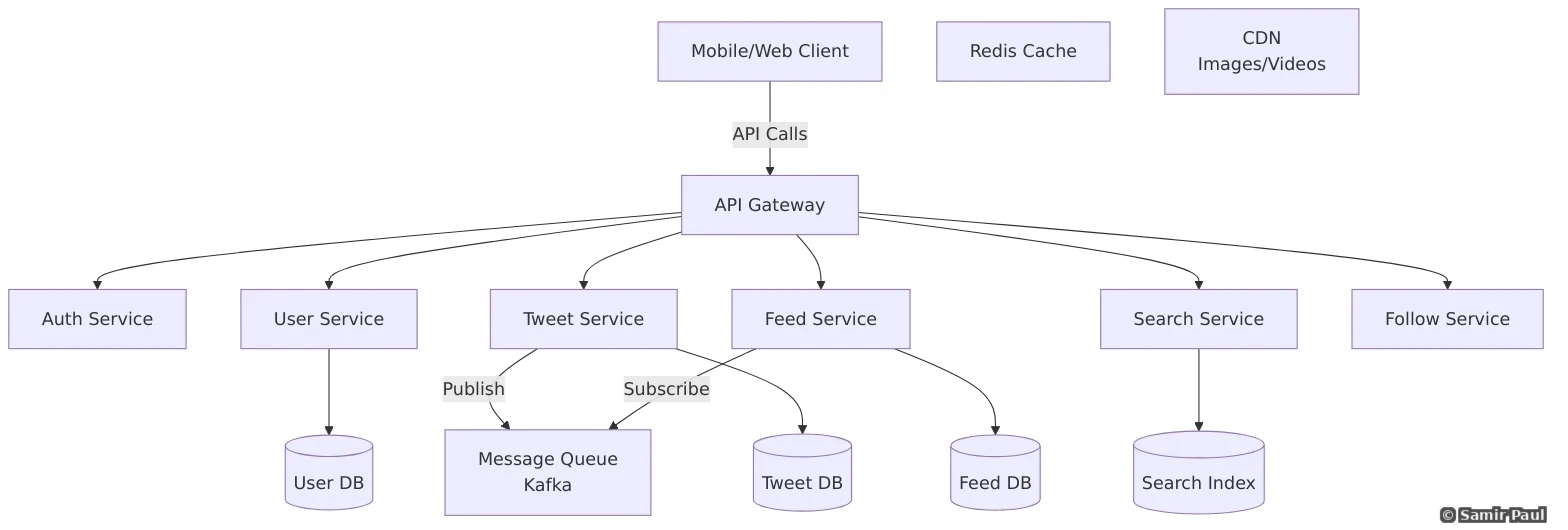

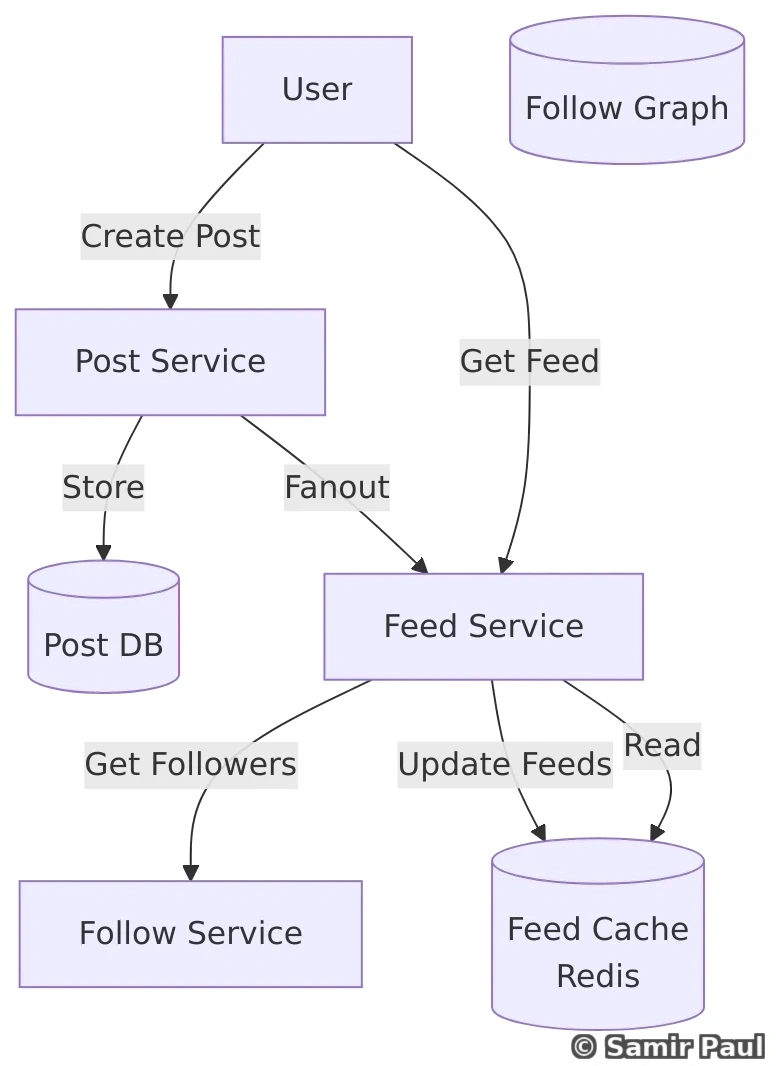

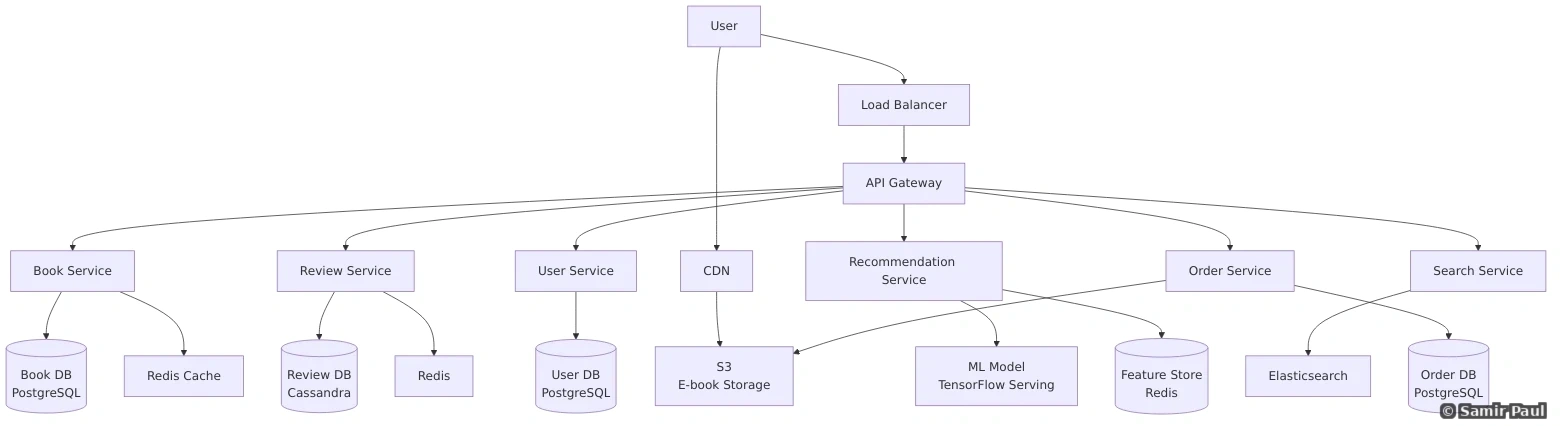

print(store.get("user:2")) # None28. Design Twitter with Microservices

28.1 High-Level Architecture

28.2 Core Services Explained

Tweet Service:

- Create, read, update, delete tweets

- Validate tweet content

- Handle media uploads

- Publish tweet events

Feed Service:

- Generate personalized feed

- Combine tweets from followed accounts

- Sort chronologically

- Cache frequently accessed feeds

Search Service:

- Full-text search on tweets

- Handle hashtags

- Trending topics

- Use Elasticsearch or similar

Follow Service:

- Manage follower/following relationships

- Social graph

- Notification for new followers

28.3 Handling the “Thundering Herd”

Challenge: When celebrity tweets, millions access simultaneously.

Solution: Fan-Out Cache

# When a celebrity tweets

def create_celebrity_tweet(user_id, content):

tweet = TweetService.create(user_id, content)

# Get all followers

followers = FollowService.get_followers(user_id)

# Pre-populate feeds for active followers

for follower_id in followers[:100000]: # Top 100K followers

feed_key = f"feed:{follower_id}"

cache.prepend(feed_key, tweet) # Add to front of feed

# Enqueue notification

notification_queue.enqueue({

'user_id': follower_id,

'event': 'new_tweet',

'from_user': user_id

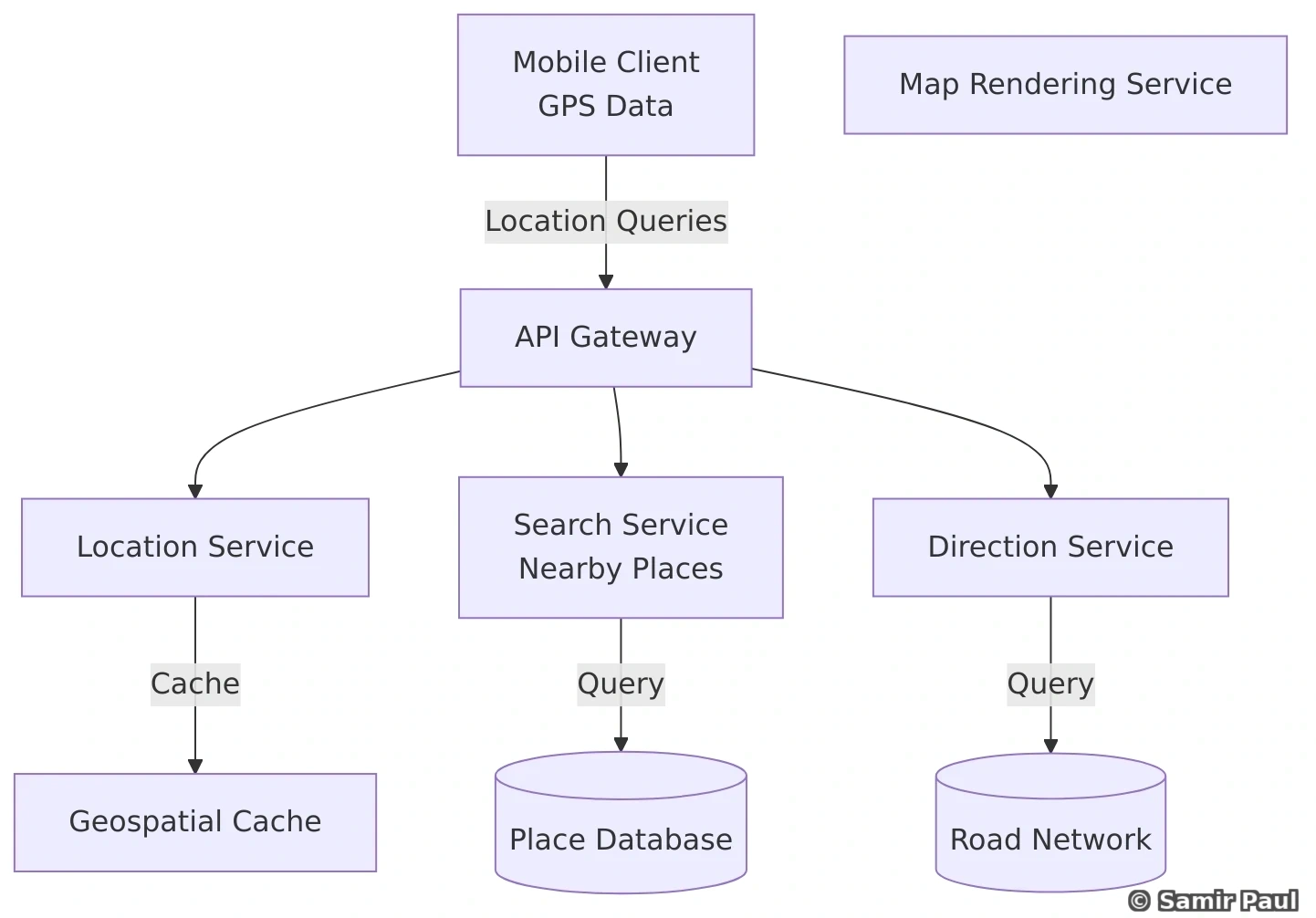

})29. Design Google Maps / Location-Based Services

29.1 Architecture Overview

29.2 Geospatial Indexing

Storing location data efficiently:

# Using Geohash for spatial indexing

def geohash_encode(latitude, longitude, precision=5):

"""

Geohash encodes lat/long into a short string

Nearby locations have similar geohashes

"""

# Simplified geohash (actual implementation is more complex)

lat_bits = bin(int((latitude + 90) / 180 * (2 ** 20)))[2:].zfill(20)

lon_bits = bin(int((longitude + 180) / 360 * (2 ** 20)))[2:].zfill(20)

# Interleave bits

interleaved = ''.join(lon_bits[i] + lat_bits[i] for i in range(20))

# Convert to base32

base32 = "0123456789bcdefghjkmnpqrstuvwxyz"

geohash = ''

for i in range(0, len(interleaved), 5):

chunk = interleaved[i:i+5]

geohash += base32[int(chunk, 2)]

return geohash[:precision]

# Nearby search using geohash

def find_nearby_places(latitude, longitude, radius_km=1):

"""Find all places within radius"""

center_geohash = geohash_encode(latitude, longitude, precision=7)

# Get geohashes for surrounding cells

nearby_geohashes = get_neighbors(center_geohash)

places = []

for geohash in nearby_geohashes:

# Query database for places with this geohash prefix

db_places = PlaceDB.query(f"geohash LIKE '{geohash}%'")

# Filter by distance

for place in db_places:

dist = haversine_distance(latitude, longitude, place.lat, place.lon)

if dist <= radius_km:

places.append(place)

return places

def haversine_distance(lat1, lon1, lat2, lon2):

"""Calculate distance between two points in km"""

import math

R = 6371 # Earth radius in km

dlat = math.radians(lat2 - lat1)

dlon = math.radians(lon2 - lon1)

a = math.sin(dlat/2)**2 + math.cos(math.radians(lat1)) * math.cos(math.radians(lat2)) * math.sin(dlon/2)**2

c = 2 * math.atan2(math.sqrt(a), math.sqrt(1-a))

return R * c29.3 Direction Finding (Shortest Path)

# Simplified Dijkstra's algorithm for shortest path

import heapq

class DirectionFinder:

def __init__(self, road_network):